🐳The Deep Roots of DeepSeek: How It All Began

A translation of DeepSeek CEO Liang Wenfeng's first public interview, originally published in May 2023.

Hi, this is Tony! Welcome to this issue of Recode China AI, your go-to newsletter for the latest AI news and research in China.

The DeepSeek momentum shows no signs of slowing down. Since the release of its latest LLM DeepSeek-V3 and reasoning model DeepSeek-R1, the tech community has been abuzz with excitement. Here’s a quick roundup of DeepSeek’s latest developments:

The DeepSeek chatbot app skyrocketed to the top of the iOS free app charts in both the U.S. and China.

Japan’s semiconductor sector is facing a downturn as shares of major chip companies fell sharply on Monday following the emergence of DeepSeek’s models.

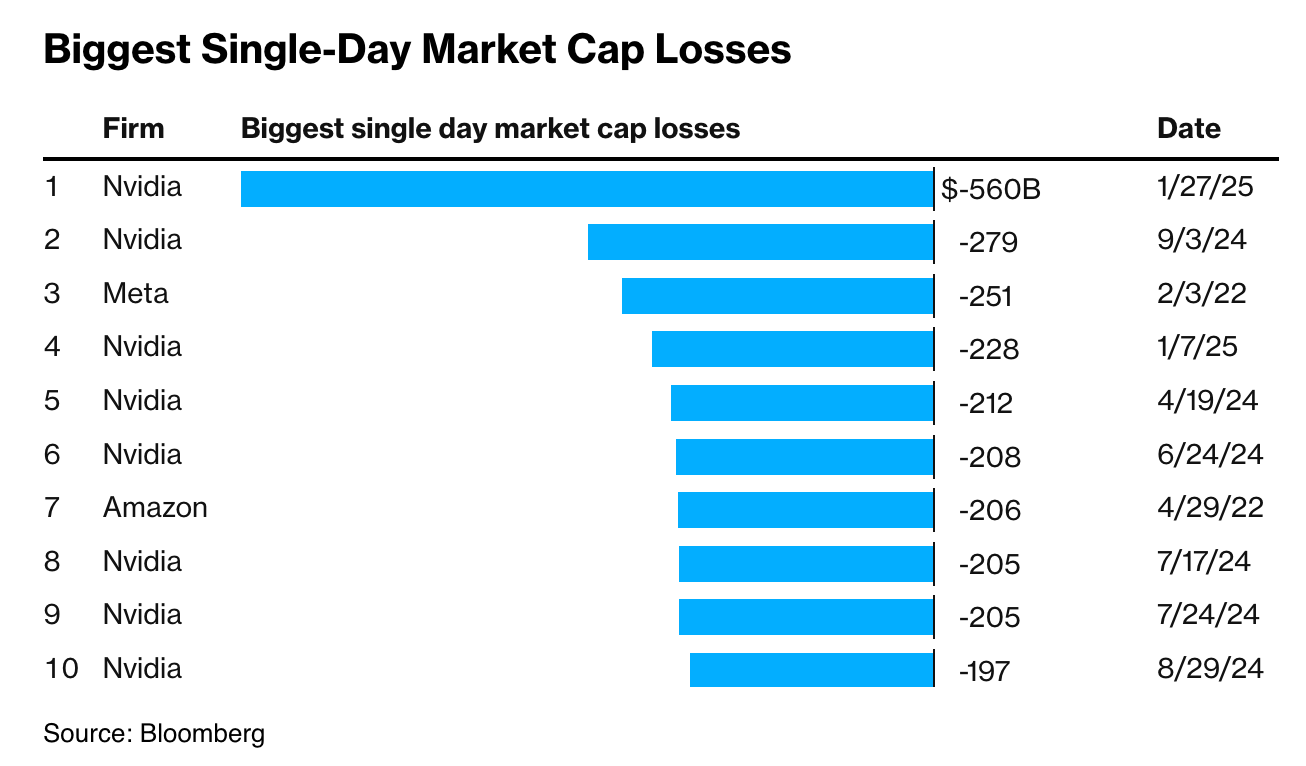

U.S. tech stocks also experienced a significant downturn on Monday due to investor concerns over competitive advancements in AI by DeepSeek. Nvidia stock dropped nearly 18%.

Scale AI CEO Alexandr Wang praised DeepSeek’s latest model as the top performer on “Humanity’s Last Exam,” a rigorous test featuring the hardest questions from math, physics, biology, and chemistry professors. Wang also claimed that DeepSeek has about 50,000 H100s, despite lacking evidence.

Meta is concerned DeepSeek outperforms its yet-to-be-released Llama 4, The Information reported. Meta isn’t alone — other tech giants are also scrambling to understand how this Chinese startup has achieved such results.

DeepSeek-R1 also achieved impressive rankings on LLM evaluations, including #3 on LMSYS’ (Large Model Systems Organization) LLM evaluation arena for general performance, and #2 on the WebDev arena for web coding tasks.

a16z co-founder Marc Andreessen likened DeepSeek-R1’s impact to AI’s “Sputnik moment.” (But this time, the technology is being shared openly, hat tip to

.)OpenAI, ByteDance, Alibaba, Zhipu AI, and Moonshot AI are among the teams actively studying DeepSeek, Chinese media outlet TMTPost reported. OpenAI and ByteDance are even exploring potential research collaborations with the startup.

DeepSeek CEO Liang Wenfeng, also the founder of High-Flyer – a Chinese quantitative fund and DeepSeek’s primary backer – recently met with Chinese Premier Li Qiang, where he highlighted the challenges Chinese companies face due to U.S. restrictions on advanced chip exports, Wall Street Journal reported.

AMD said on X that it has integrated the new DeepSeek-V3 model into its Instinct MI300X GPUs, optimized for peak performance with SGLang.

The low-profile AI lab has become the subject of intense analysis, with everyone holding their own lens to study its meteoric rise. Its CEO rarely speaks publicly, so every interview and statement is scrutinized.

China-focused podcast and media platform ChinaTalk has already translated one interview with Liang after DeepSeek-V2 was released in 2024 (kudos to Jordan!) In this post, I translated another from May 2023, shortly after the DeepSeek’s founding. The piece was auto-translated by the DeepSeek chatbot, with minor revisions.

Nearly 20 months later, it’s fascinating to revisit Liang’s early views, which may hold the secret behind how DeepSeek, despite limited resources and compute access, has risen to stand shoulder-to-shoulder with the world’s leading AI companies. For those short on time, I also recommend Wired’s latest feature and MIT Tech Review’s coverage on DeepSeek.

ChinaTalk: Deepseek: The Quiet Giant Leading China’s AI Race

Wired: How Chinese AI Startup DeepSeek Made a Model that Rivals OpenAI

MIT Tech Review: How a top Chinese AI model overcame US sanctions

You can also find my previous posts on DeepSeek below:

The following article is translated from 36Kr, written by Yu Lili, and edited by Liu Jing. You can find the original link here.

Madness of High-Flyer: The Stealthy Rise of an AI Giant in LLMs

In the swarm of LLM battles, High-Flyer stands out as the most unconventional player.

This is a game destined for the few. Many startups have begun to adjust their strategies or even consider withdrawing after major players entered the field, yet this quantitative fund is forging ahead alone.

In May, High-Flyer named its new independent organization dedicated to LLMs "DeepSeek," emphasizing its focus on achieving truly human-level AI. Their goal is not just to replicate ChatGPT, but to explore and unravel more mysteries of Artificial General Intelligence (AGI).

Moreover, in a field considered highly dependent on scarce talent, High-Flyer is attempting to gather a group of obsessed individuals, wielding what they consider their greatest weapon: collective curiosity.

In the quantitative field, High-Flyer is a "top fund" that has reached a scale of hundreds of billions. However, its recent focus on the new wave of AI is quite dramatic.

When the shortage of high-performance GPU chips among domestic cloud providers became the most direct factor limiting the birth of China's generative AI, according to "Caijing Eleven People (a Chinese media outlet)," there are no more than five companies in China with over 10,000 GPUs. Besides several leading tech giants, this list includes a quantitative fund company named High-Flyer. It is generally believed that 10,000 NVIDIA A100 chips are the computational threshold for training LLMs independently.

In fact, this company, rarely viewed through the lens of AI, has long been a hidden AI giant: in 2019, High-Flyer Quant established an AI company, with its self-developed deep learning training platform "Firefly One" totaling nearly 200 million yuan in investment, equipped with 1,100 GPUs; two years later, "Firefly Two" increased its investment to 1 billion yuan, equipped with about 10,000 NVIDIA A100 graphics cards.

This means, in terms of computational power alone, High-Flyer had secured its ticket to develop something like ChatGPT earlier than many major tech companies.

However, LLMs heavily depend on computational power, algorithms, and data, requiring an initial investment of $50 million and tens of millions of dollars per training session, making it difficult for companies not worth billions to sustain. Despite these challenges, High-Flyer remains optimistic. Founder Liang Wenfeng told us, "The key is that we want to do this, and we can do this, making us one of the most suitable candidates."

This enigmatic optimism first stems from High-Flyer's unique growth trajectory.

Quantitative investment is an import from the United States, which means almost all founding teams of China's top quantitative funds have some experience with American or European hedge funds. High-Flyer is the exception: it is entirely homegrown, having grown through its own explorations.

In 2021, just six years after its founding, High-Flyer reached a scale of hundreds of billions and was dubbed one of the "Four Heavenly Kings of Quantitative Finance."

Growing as an outsider, High-Flyer has always been like a disruptor. Several industry insiders have told us that High-Flyer "has always used a novel approach to enter this industry, whether in R&D systems, products, or sales."

A founder of a leading quantitative fund believes that High-Flyer has always "not followed the conventional path," but rather "in the way they wanted," even if it meant being somewhat unorthodox or controversial, "they dared to speak out openly and then act according to their own ideas."

Regarding the secret to High-Flyer's growth, insiders attribute it to "selecting a group of inexperienced but potential individuals, and having an organizational structure and corporate culture that allows innovation to happen," which they believe is also the secret for LLM startups to compete with major tech companies.

The more crucial secret, perhaps, comes from High-Flyer's founder, Liang Wenfeng.

While studying AI at Zhejiang University (a top Chinese university known for its STEM programs), Liang was utterly convinced that "AI will definitely change the world," a belief that was not widely accepted in 2008.

After graduation, unlike his peers who joined major tech companies as programmers, he retreated to a cheap rental in Chengdu, enduring repeated failures in various scenarios, eventually breaking into the complex field of finance and founding High-Flyer.

An interesting detail is that in the early years, a similarly eccentric friend, working on "unreliable" aircraft in a Shenzhen urban village, tried to recruit him. This friend later founded a company worth hundreds of billions of dollars, named DJI.

Therefore, beyond the inevitable topics of money, talent, and computational power involved in LLMs, we also discussed with High-Flyer founder Liang about what kind of organizational structure can foster innovation and how long human madness can last.

After more than a decade of entrepreneurship, this is the first public interview for this rarely seen "tech geek" type of founder.

Coincidentally, on April 11, when High-Flyer announced its venture into LLMs, it also quoted a piece of advice from French New Wave director François Truffaut to young directors: "Il faut avoir une folle ambition et une folle sincérité." (This translates to: "One must have a mad ambition and a mad sincerity.")

1. Conducting Research and Exploration

Doing the most important and challenging things

36Kr: Recently, High-Flyer announced its decision to venture into building LLMs. Why would a quantitative fund undertake such a task?

Liang Wenfeng: Our venture into LLMs isn't directly related to quantitative finance or finance in general. We've established a new company called DeepSeek specifically for this purpose.

Many of the core members at High-Flyer come from an AI background. We've experimented with various scenarios and eventually delved into the sufficiently complex field of finance. General AI might be one of the next big challenges, so for us, it's a matter of how to do it, not why.

36Kr: Are you planning to train a LLM yourselves, or focus on a specific vertical industry—like finance-related LLMs?

Liang Wenfeng: We aim to develop general AI, or AGI. Language models might be a necessary path towards AGI and already exhibit some of its characteristics, so we'll start there and later include visual models, etc.

36Kr: Many startups have abandoned the broad direction of only developing general LLMs due to major tech companies entering the field.

Liang Wenfeng: We won't prematurely design applications based on models; we'll focus on the LLMs themselves.

36Kr: Many believe that for startups, entering the field after major companies have established a consensus is no longer a good timing.

Liang Wenfeng: Currently, it seems that neither major companies nor startups can quickly establish a dominant technological advantage. With OpenAI leading the way and everyone building on publicly available papers and code, by next year at the latest, both major companies and startups will have developed their own large language models.

Both major companies and startups have their opportunities. Existing vertical scenarios aren't in the hands of startups, which makes this phase less friendly for them. However, since these scenarios are ultimately fragmented and consist of small needs, they are more suited to flexible startup organizations. In the long run, the barriers to applying LLMs will lower, and startups will have opportunities at any point in the next 20 years.

Our goal is clear: not to focus on verticals and applications, but on research and exploration.

36Kr: Why do you define your mission as "conducting research and exploration"?

Liang Wenfeng: It's driven by curiosity. From a broader perspective, we want to test some hypotheses. For example, we understand that the essence of human intelligence might be language, and human thought might be a process of language. You think you're thinking, but you might just be weaving language in your mind. This suggests that human-like AI (AGI) could emerge from language models.

From a narrower perspective, GPT-4 still holds many mysteries. While we replicate, we also research to uncover these mysteries.

36Kr: But research means incurring greater costs.

Liang Wenfeng: Simply replicating can be done based on public papers or open-source code, requiring minimal training or just fine-tuning, which is low cost. Research involves various experiments and comparisons, requiring more computational power and higher personnel demands, thus higher costs.

36Kr: Where does the research funding come from?

Liang Wenfeng: High-Flyer, as one of our funders, has ample R&D budgets, and we also have an annual donation budget of several hundred million yuan, previously given to public welfare organizations. If needed, adjustments can be made.

36Kr: But without two to three hundred million dollars, you can't even get to the table for foundational LLMs. How do we sustain its continuous investment?

Liang Wenfeng: We're also in talks with various funders. Many VCs have reservations about funding research; they need exits and want to commercialize products quickly. With our priority on research, it's hard to secure funding from VCs. But we have computational power and an engineering team, which is half the battle.

36Kr: What business models have we considered and hypothesized?

Liang Wenfeng: We're currently thinking about publicly sharing most of our training results, which could integrate with commercialization. We hope more people can use LLMs even on a small app at low cost, rather than the technology being monopolized by a few.

36Kr: Some major companies will also offer services later. What differentiates you?

Liang Wenfeng: Major companies' models might be tied to their platforms or ecosystems, whereas we are completely free.

36Kr: Regardless, a commercial company engaging in an infinitely investing research exploration seems somewhat crazy.

Liang Wenfeng: If you must find a commercial reason, it might be elusive because it's not cost-effective.

From a commercial standpoint, basic research has a low return on investment. Early investors in OpenAI certainly didn't invest thinking about the returns but because they genuinely wanted to pursue this.

What we're certain of now is that since we want to do this and have the capability, at this point in time, we are among the most suitable candidates.

2. The Reserve of Ten Thousand GPUs and Its Cost

An exciting endeavor perhaps cannot be measured solely by money.

36Kr: GPUs have become a highly sought-after resource amidst the surge of ChatGPT-driven entrepreneurship.. You had the foresight to reserve 10,000 GPUs as early as 2021. Why?

Liang Wenfeng: Actually, the progression from one GPU in the beginning, to 100 GPUs in 2015, 1,000 GPUs in 2019, and then to 10,000 GPUs happened gradually. Before reaching a few hundred GPUs, we hosted them in IDCs. As the scale grew larger, hosting could no longer meet our needs, so we began building our own data centers.

Many might think there's an undisclosed business logic behind this, but in reality, it's primarily driven by curiosity.

36Kr: What kind of curiosity?

Liang Wenfeng: Curiosity about the boundaries of AI capabilities. For many outsiders, the wave of ChatGPT has been a huge shock; but for insiders, the impact of AlexNet in 2012 already heralded a new era. AlexNet's error rate was significantly lower than other models at the time, reviving neural network research that had been dormant for decades. Although specific technological directions have continuously evolved, the combination of models, data, and computational power remains constant. Especially after OpenAI released GPT-3 in 2020, the direction was clear: a massive amount of computational power was needed. Yet, even in 2021 when we invested in building Firefly Two, most people still couldn't understand. (You can find more details about Firefly Two in this paper.)

36Kr: So since 2012, you've been focusing on the reserve of computational power?

Liang Wenfeng: For researchers, the thirst for computational power is insatiable. After conducting small-scale experiments, there's always a desire to conduct larger ones. Since then, we've consciously deployed as much computational power as possible.

36Kr: Many assume that building this computer cluster is for quantitative hedge fund businesses using machine learning for price predictions?

Liang Wenfeng: If solely for quantitative investment, very few GPUs would suffice. Beyond investment, we've conducted extensive research, wanting to understand what paradigms can fully describe the entire financial market, whether there are more concise expressions, where the boundaries of different paradigms lie, and if these paradigms have broader applicability, etc.

36Kr: But this process is also a money-burning endeavor.

Liang Wenfeng: An exciting endeavor perhaps cannot be measured solely by money. It's like buying a piano for the home; one can afford it, and there's a group eager to play music on it.

36Kr: GPUs typically depreciate at a rate of 20%.

Liang Wenfeng: We haven't calculated precisely, but it shouldn't be that much. NVIDIA's GPUs are hard currency; even older models from many years ago are still in use by many. When we decommissioned older GPUs, they were quite valuable second-hand, not losing too much.

36Kr: Building a computer cluster involves significant maintenance fees, labor costs, and even electricity bills.

Liang Wenfeng: Electricity and maintenance fees are actually quite low, accounting for only about 1% of the hardware cost annually. Labor costs are not low, but they are also an investment in the future, the company's greatest asset. The people we choose are relatively modest, curious, and have the opportunity to conduct research here.

36Kr: In 2021, High-Flyer was among the first in the Asia-Pacific region to acquire A100 GPUs. Why earlier than some cloud providers?

Liang Wenfeng: We had conducted pre-research, testing, and planning for new GPUs very early. As for some cloud providers, to my knowledge, their previous needs were scattered. It wasn't until 2022, with the demand for machine training in autonomous driving and the ability to pay, that some cloud providers built up their infrastructure. It's difficult for large companies to purely conduct research and training; it's more driven by business needs.

36Kr: How do you view the competitive landscape of LLMs?

Liang Wenfeng: Large companies certainly have advantages, but if they can't quickly apply them, they may not persist, as they need to see results more urgently.

Leading startups also have solid technology, but like the previous wave of AI startups, they face commercialization challenges.

36Kr: Some might think that a quantitative fund emphasizing its AI work is just blowing bubbles for other businesses.

Liang Wenfeng: But in fact, our quantitative fund has largely stopped external fundraising.

36Kr: How do you distinguish between AI believers and speculators?

Liang Wenfeng: Believers were here before and will remain here. They are more likely to buy GPUs in bulk or sign long-term agreements with cloud providers, rather than renting short-term.

3. How to Truly Foster Innovation

Innovation often arises spontaneously, not through deliberate arrangement, nor can it be taught.

36Kr: How is the recruitment progress for the DeepSeek team?

Liang Wenfeng: The initial team has been assembled. Due to a shortage of personnel in the early stages, some people will be temporarily seconded from High-Flyer. We started recruiting when ChatGPT 3.5 became popular at the end of last year, but we still need more people to join.

36Kr: Talent for LLM startups is also scarce. Some investors say that suitable candidates might only be found in AI labs of giants like OpenAI and Facebook AI Research. Will you look overseas for such talent?

Liang Wenfeng: If pursuing short-term goals, it's right to look for experienced people. But in the long run, experience is less important; foundational abilities, creativity, and passion are more crucial. From this perspective, there are many suitable candidates domestically.

36Kr: Why is experience less important?

Liang Wenfeng: It's not necessarily true that only those who have done something can do it. A principle at High-Flyer is to look at ability, not experience. Our core technical positions are mainly filled by fresh graduates or those who have graduated within one or two years.

36Kr: In innovative ventures, do you think experience is a hindrance?

Liang Wenfeng: When doing something, experienced people might instinctively tell you how it should be done, but those without experience will explore repeatedly, think seriously about how to do it, and then find a solution that fits the current reality.

36Kr: High-Flyer entered the industry as a complete outsider with no financial background and became a leader within a few years. Is this hiring principle one of the secrets?

Liang Wenfeng: Our core team, including myself, initially had no quantitative experience, which is quite unique. It's not the secret to success, but it's part of High-Flyer's culture. We don't intentionally avoid experienced people, but we focus more on ability.

Take the sales position as an example. Our two main salespeople were novices in this industry. One previously worked in foreign trade for German machinery, and the other wrote backend code for a securities firm. When they entered this industry, they had no experience, no resources, and no accumulation.

Now, we might be the only large private fund that primarily relies on direct sales. Direct sales mean not sharing fees with intermediaries, resulting in higher profit margins under the same scale and performance. Many have tried to imitate us but haven't succeeded.

36Kr: Why have many tried to imitate you but not succeeded?

Liang Wenfeng: Because that alone is not enough to foster innovation. It needs to match the company's culture and management.

In fact, in their first year, they achieved nothing, and only started to see some results in the second year. But our evaluation standards are different from most companies. We don't have KPIs or so-called tasks.

36Kr: Then what are your evaluation standards?

Liang Wenfeng: Unlike most companies that focus on the volume of client orders, our sales commissions are not pre-calculated. We encourage salespeople to develop their own networks, meet more people, and create greater influence.

We believe that an honest salesperson who gains clients' trust might not get them to place orders immediately, but can make them feel that he is a reliable person.

36Kr: After selecting the right people, how do you get them up to speed?

Liang Wenfeng: Assign them important tasks and do not interfere. Let them figure things out and perform on their own.

In fact, a company's DNA is hard to imitate. For example, hiring inexperienced people, how to judge their potential, and how to help them grow after hiring, these cannot be directly imitated.

36Kr: What do you think are the necessary conditions for building an innovative organization?

Liang Wenfeng: Our conclusion is that innovation requires as little intervention and management as possible, giving everyone the space to freely express themselves and the opportunity to make mistakes. Innovation often arises spontaneously, not through deliberate arrangement, nor can it be taught.

36Kr: This is a very unconventional management style. How do you ensure that someone is efficient and aligned with your direction under these circumstances?

Liang Wenfeng: Ensure that values are aligned during recruitment, and then use corporate culture to ensure alignment in pace. Of course, we don't have a written corporate culture because anything written down can hinder innovation. More often, it's about leading by example. How you make decisions when something happens becomes a guideline.

36Kr: Do you think that in this wave of competition for LLMs, the innovative organizational structure of startups could be a breakthrough point in competing with major companies?

Liang Wenfeng: According to textbook methodologies, what startups are doing now wouldn't survive.

But the market is changing. The real deciding force is often not some ready-made rules and conditions, but the ability to adapt and adjust to changes.

Many large companies' organizational structures can no longer respond and act quickly, and they easily become bound by past experiences and inertia. Under this new wave of AI, a batch of new companies will certainly emerge.

4. True Madness

Innovation is expensive and inefficient, sometimes accompanied by waste.

36Kr: What excites you the most about doing this?

Liang Wenfeng: Figuring out whether our conjectures are true. If they are, it's incredibly exciting.

36Kr: What are the essential criteria for recruiting for the LLM team?

Liang Wenfeng: Passion and solid foundational skills. Nothing else is as important.

36Kr: Are such people easy to find?

Liang Wenfeng: Their enthusiasm usually shows because they really want to do this, so these people are often looking for you at the same time.

36Kr: Developing LLMs might be an endless endeavor. Does the cost concern you?

Liang Wenfeng: Innovation is expensive and inefficient, sometimes accompanied by waste. That's why innovation only emerges after economic development reaches a certain level. In very poor conditions or in industries not driven by innovation, cost and efficiency are crucial. Look at OpenAI; it also burned a lot of money before achieving results.

36Kr: Do you feel like you're doing something crazy?

Liang Wenfeng: I don't know if it's crazy, but there are many things in this world that can't be explained by logic, just like many programmers who are also crazy contributors to open-source communities. They're exhausted from the day but still contribute code.

36Kr: There's a kind of spiritual reward in that.

Liang Wenfeng: It's like hiking 50 kilometers; your body is exhausted, but your spirit is fulfilled.

36Kr: Do you think curiosity-driven madness can last forever?

Liang Wenfeng: Not everyone can be crazy for a lifetime, but most people, in their younger years, can fully engage in something without any utilitarian purpose.