🎸Alibaba's o1-Style Model QwQ, AI Agent Operates Computers & Phones, and U.S. to Tighten Chip Restrictions on China

Weekly China AI News from November 25, 2024 to December 1, 2024

Hi, this is Tony! Welcome to this week’s issue of Recode China AI, a newsletter for China’s trending AI news and papers.

Three things to know

Alibaba’s QwQ outperforms OpenAI’s o1-preview on math benchmarks.

Zhipu AI releases an AI agent to operate a desktop computer like a human.

The U.S. is expected to announce new restrictions on semiconductor equipment and AI memory chip sales to China this week.

Alibaba Previews QwQ: An Open-Source Challenger to OpenAI’s o1

What’s New: In my previous issue, I discussed a model called Marco-o1 from Alibaba’s international division. I mentioned that Alibaba’s leading research team, Qwen, might develop its own o1-style large reasoning model.

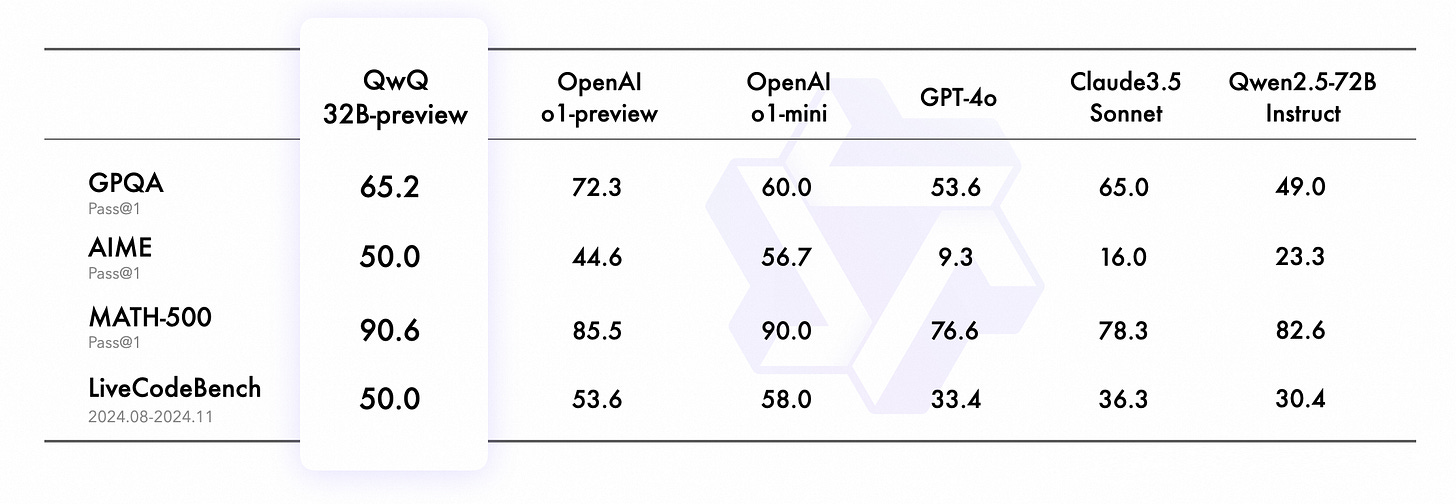

The very next day, Qwen introduced QwQ, short for Qwen with Questions and pronounced like “quill”. The specific release includes QwQ-32B-Preview, an experimental research model that outperforms OpenAI’s o1-preview on AIME and MATH tests — benchmarks for evaluating AI performance in math problems — but it trails o1-mini on AIME. You can try the demo here.

How It Works: Much like OpenAI’s o1 and DeepSeek-R1, QwQ excels in mathematics and programming through long, reflective thought processes.

According to its blog, the model can perform deep introspection — questioning its assumptions, engaging in self-dialogue, and examining every step of its reasoning. QwQ-32B-Preview can process up to 32,768 input tokens.

As a preview release, QwQ-32B-Preview has limitations, including unexpected language switches, underperformance in tasks requiring common sense reasoning, and occasional logical loops.

Despite these, the model gained significant traction. Terence Tao, a renowned mathematician and AI enthusiast, called QwQ-32B-Preview significantly better than previous open-source models for solving math problems. It's also the #1 trending model on Hugging Face.

In my own test, I asked the model to reverse all the letters in “I love Qwen-QwQ”. QwQ got it right after a thought process generating nearly 4,500 words. OpenAI’s o1 also answered correctly in just six seconds. Meanwhile, DeepSeek-R1 failed the task entirely. (If you can read Chinese, I found this testing of QwQ on Zhihu, China’s Quora, quite convincing.)

QwQ is available under Apache 2.0 license, allowing for download and use, which makes it more open than Meta’s Llama models.

Future Directions: Qwen’s research team said they will keep exploring the reasoning abilities of these LLMs, by studying process reward models, LLM critique, advancing complex multi-step reasoning, and reinforcement learning with real-world feedback.

Why It Matters: QwQ is a great model for Alibaba and the open-source community. Let’s take a moment to celebrate this progress!

As I mentioned last time, the release of DeepSeek-R1 and now Alibaba’s QwQ shows that the “inference compute” paradigm pioneered by OpenAI’s o1 is no longer exclusive. The gap between OpenAI and other AI labs is narrowing.

However, releasing an o1-style model and scoring high on benchmarks doesn’t automatically translate to real-world success. Users will favor models that can answer complex questions quickly and accurately — something QwQ is still developing.

Considering Alibaba’s progresses with both pre-trained LLMs like Qwen and reasoning models like QwQ, will it continue investing in Chinese AI startups? The e-commerce giant already pours at least one billion dollars into Chinese AI startups including Moonshot AI, Baichuan AI, Zhipu AI, MiniMax, and 01.AI.

Zhipu AI Unveils AI Agents that Can Use Computers and Phones

What’s New: Chinese AI startup Zhipu AI last week introduced GLM-PC, an AI agent designed to operate a desktop computer like a human. Demonstrated at the company’s Agent OpenDay, GLM-PC can for example schedule and attend virtual meetings and put together a meeting note. The feature is now open for beta testing.

How It Works: Similar to Anthropic’s computer-use feature for Claude but less advanced, GLM-PC can view a computer screen, click buttons, scroll, and type on a keyboard. It can operate software programs designed for human users, though CEO Zhang Peng said that its current capabilities are limited to basic behaviors.

GLM-PC also features a unique indivisible screen mode that allow it to manage background tasks without disrupting the user’s active work.

GLM-PC is built on Zhipu AI’s multimodal CogAgent model, an 18-billion-parameter visual language model (VLM) that specializes in graphic user interface understanding and navigation.

AutoGLM Upgrade: Zhipu AI also announced a major upgrade to AutoGLM, its agent for web and mobile app navigation. AutoGLM can now complete tasks involving more than 50 steps. In a live demo, CEO Zhang showcased its abilities by directing AutoGLM to send a red packet worth RMB 20,000 to attendees.

The web agent, AutoGLM-Web, can visit a video streaming site, play a specific episode, and post comments – all completed as a series of actions under one prompt. APIs for AutoGLM will be available to enterprise clients in the coming weeks.

Why it Matters: AI like Claude’s computer-use feature and GLM-PC has the potential to transform tasks previously reliant on human operators such as software development.

However, this level of control also raises concerns about data access and privacy. To address this issue, CEO Zhang suggested a framework to ensure security in an interview with Chinese media. For instance, sensitive tasks could be processed locally, while more complex operations are handled in the cloud.

Weekly News Roundup

The Biden administration is preparing to announce new restrictions on semiconductor equipment and AI memory chip sales to China. These measures, expected to be unveiled as early as this week, aim to further limit China’s technological advancement but are less severe than initially proposed. (Bloomberg)

ChangXin Memory Technologies, who develops AI memory chips, is notably excluded from the entity list.

Some Huawei suppliers and two SMIC factories will be sanctioned.

Export restrictions will include high-bandwidth memory (HBM) used in GPUs and AI chips. The U.S. government could end up adding about 200 Chinese companies to the Department of Commerce’s Entity List (Wired).

Baidu’s Apollo robotaxi service has been granted a permit to test autonomous vehicles in Hong Kong. The Transport Department of Hong Kong issued the license last Friday. Baidu Apollo is allowed to conduct trials with 10 autonomous vehicles in North Lantau. The license is valid for five years, from December 9, 2024, to December 8, 2029. (Reuters)

Pony.ai completed its initial public offering (IPO) on November 27, listing on the Nasdaq Global Select Market under the ticker symbol PONY. The company raised about $413.4 million, combining the IPO proceeds with concurrent private placements. (Bloomberg)

ByteDance has filed a lawsuit against a former intern, seeking RMB 8 million ($1.1 million) in damages for allegedly sabotaging an AI training project. The case has been accepted by the Haidian District People's Court in Beijing. (SCMP)

Trending Research

Deep Learning Scaling is Predictable, Empirically

This is not recent research but an older paper published in 2017 by Baidu Research. It has recently gained attention as one of the first studies on scaling laws in neural networks. Researchers investigated the relationships between training data size, model size, and generalization error to understand scaling trends in deep learning. They found that generalization error follows predictable power-law scaling with training set size across various machine learning domains, including machine translation, language modeling, image classification, and speech recognition. Improved models shift the learning curve downward but do not alter the power-law exponent, which determines the learning curve’s steepness. Model size scales sublinearly with data size, indicating efficiency in resource use as datasets grow.