📽️ Zhipu AI’s ‘Her’ Chatbot, Alibaba’s Multimodal Model Release, and miHoYo Founder’s New AI Venture

Weekly China AI News from August 26, 2024 to September 1, 2024

Hi, this is Tony! Welcome to this week’s issue of Recode China AI, a newsletter for China’s trending AI news and papers.

Three things to know

Zhipu AI introduced a new video call feature to its chatbot that allows users to engage in real-time video conversations with AI.

Alibaba’s new multimodal model Qwen2-VL can chat through camera, play card games, and control mobile phones by acting as an agent.

Cai Haoyu (Hugh Tsai), co-founder of Genshin Impact’s creator miHoYo, has reportedly launched a new AI venture called Anuttacon.

Zhipu AI Introduces Real-Time Video Call Feature for Chatbot

What’s New: Chinese AI startup Zhipu AI introduced a new video call feature to its chatbot, ChatGLM, allowing users to engage in real-time video conversations with AI directly from their phones. The feature, announced last week at KDD 2024, is set to launch on August 30 for select users, with a broader rollout planned following initial feedback and iterations.

Why It Matters: The new video chat feature comes amid growing interest in multimodal AI applications, where AI can interact seamlessly across text, audio, and video. Zhipu AI’s proactive rollout positions it ahead of its U.S. counterparts like OpenAI’s GPT-4o and Google’s Astra, which remain in demo stages.

How It Works: Chinese media outlets recently tested the video call feature. Below is a short summary of its capabilities and shortcomings:

The chatbot accurately identified everyday objects, such as a cup with a subtle Starbucks logo and cartoon characters on a glass, and more complex items like an Xbox controller.

When the camera panned around an office, the AI correctly deduced that the user was in a brainstorming meeting and recognized video editing tasks on a computer screen.

The AI incorporates conversational fillers like hmm and oh that mimick human speech. This interaction brings a more lifelike presence compared to existing AI models.

The chatbot can provide step-by-step cooking instructions, such as for sweet and sour pork, by recognizing the dish in the video feed. It can also assist with troubleshooting, like identifying a reversed battery in a calculator.

During tests, the AI guided users through solving simple math problems.

However, the feature is not without flaws. The AI sometimes misidentified similar-looking characters, such as confusing “8” and “b,” or occasionally generated nonsensical responses.

Model Updates: Alongside the video call feature, Zhipu AI has also unveiled significant upgrades to its core AI models, reinforcing its commitment to advancing large-scale AI capabilities:

GLM-4-Plus: The latest version of Zhipu’s proprietary GLM series, this LLM offers better language understanding, instruction adherence, and long-text processing. It uses AI-generated synthetic data to boost performance and uses Proximal Policy Optimization (PPO) for better reasoning in areas like math, coding, and algorithms. Benchmarks indicate that GLM-4-Plus is on par with OpenAI’s GPT-4o and Llama3.1 (405B parameters) for language tasks.

CogView-3-Plus: The latest text-to-image model from Zhipu AI rivals leading models like MJ-V6 and FLUX, offering improved image generation and editing capabilities.

GLM-4V-Plus: This vision understanding model excels in both image and video comprehension, as well as time-aware video analysis capabilities.

CogVideoX: The newly open-sourced video generation model features 5 billion parameters.

Alibaba’s New Multimodal Model Analyzes 20-Minute Videos with Ease

What’s New: Alibaba has released Qwen2-VL, the latest model in its vision-language series. The model can chat through camera, play card games, and control mobile phones by acting as an agent.

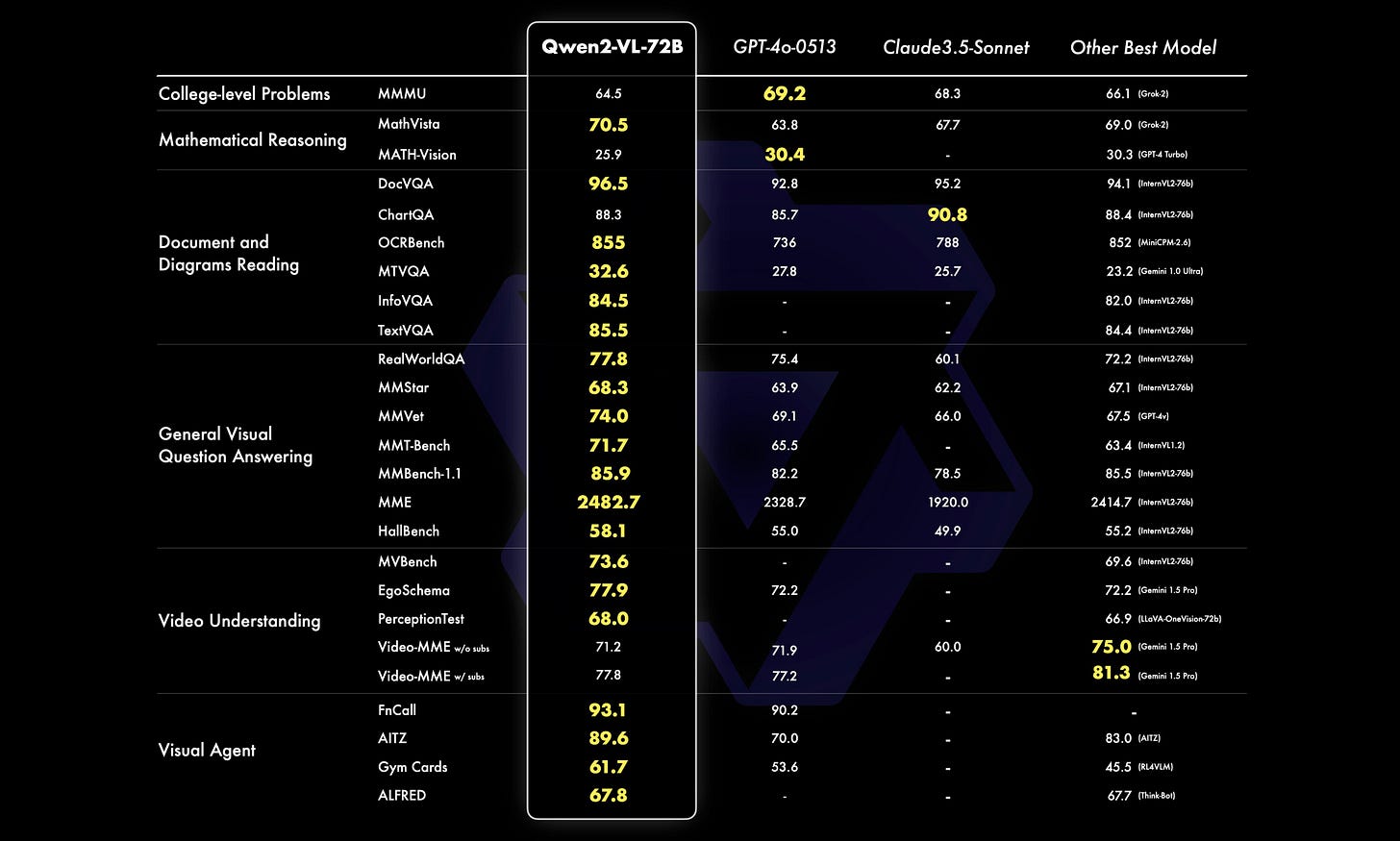

The advanced 72 billion parameter version of Qwen2-VL achieves state-of-the-art (SOTA) visual understanding across 20 benchmarks, yet it still trails behind GPT-4o and Claude 3.5-Sonnet in the widely-recognized MMMU (Multi-Modal Model Understanding).

The model is available in three versions: the open-source 2B and 7B models, and the more advanced 72B model, accessible via API.

How It Works: Qwen2-VL stands out with several key upgrades:

Thanks to an architecture improvement called Naive Dynamic Resolution support, Qwen2-VL can handle images of any resolution. This means the model can adapt to and process images of different sizes and clarity.

Another key architectural innovation is Multimodal Rotary Position Embedding (M-ROPE), which enables the model to understand and integrate text, image, and video data.

Qwen2-VL excels in specific benchmarks like MathVista (for mathematical reasoning), DocVQA (for understanding documents), and RealWorldQA (for answering real-world questions using visual information).

The model can analyze videos longer than 20 minutes, and then provide detailed summaries, and answer questions.

Qwen2-VL can also act as a control agent, operating devices like mobile phones and robots using visual cues and text commands.

The model can recognize and understand text in images across multiple languages, including European languages, Japanese, Korean, and Arabic, making it accessible to a global audience.

miHoYo Founder Launches New AI Startup Anuttacon

What’s New: Cai Haoyu (Hugh Tsai), co-founder of miHoYo, the creator of the blockbuster game Genshin Impact, has reportedly launched a new AI venture called Anuttacon, after stepping down as chairman in September 2023.

Anuttacon’s goal is “to create new, innovative, intelligent and deeply engaging virtual world experiences and AGI products harnessing the full potential of AI technology.”

Connections with miHoYo: According to Chinese media, Anuttacon’s registration address matches miHoYo’s Singapore headquarters, HoYoverse. Additionally, former executive of miHoYo and Chinese video site Bilibili, Ray Wang, now serves as Anuttacon’s President of User Ecology, according to LinkedIn.

Anuttacon is still in stealth mode but the company has attracted talents like Tong Xin, a leading computer graphics researcher from Microsoft, who has joined as a Partner Research Manager, and Wu Xiaojian, a former Meta researcher from Llama 3’s team.

In a recent LinkedIn post, Cai said that AI-generated content has already transformed game development. He believed that only the top 0.0001% of elite talent will be needed, while most average to professional developers should consider switching careers.

Why It Matters: Cai’s pivot from miHoYo to AI highlights the growing convergence between AI and gaming. Cai believes that AI will polarize the gaming industry: Either exceptional high-end games from top teams or games generated by individuals. This could herald a new age where AI not only enhances but also democratizes game development. However, it also raises concerns about the future employment of game developers.

Weekly News Roundup

Chinese AI startup MiniMax has introduced video-01, a text-to-video AI model, to compete with OpenAI’s Sora and other domestic AI firms in the burgeoning AI market. (SCMP)

ByteDance is reportedly setting up a LLM Research Institute and recruiting top AI talents, according to one Chinese media outlet. However, people familiar with the matter told Chinese media Yicai that despite a long-term LLM project, ByteDance has not decided to build an independent institute. Besides, Huang Wenhao, a tech co-founder of 01.AI, has joined ByteDance.

EnFlame, a Tencent-backed Chinese AI chip start-up, has initiated the pre-IPO tutoring process with an investment bank, aiming to list publicly amidst China's push for semiconductor self-sufficiency. (SCMP)

On August 29, the AI Forum of the China Internet Civilization Conference in Chengdu unveiled the “Generative AI Industry Self-Regulation Initiative.” According to the press release, the initiative aims to provide guidelines for the development of generative AI, ensuring data security and privacy, and fostering a positive content ecosystem.

China’s perspective on AI safety has evolved rapidly, with increasing technical and policy focus on mitigating potential catastrophic risks associated with advanced AI systems. (Carnegie Endowment)

TIME reveals a stark global divide in the ownership and location of powerful AI chips, with the U.S. and China dominating the market, leaving many countries with limited to no access to these critical resources. (TIME)

Trending Research

Researchers from the University of Hong Kong and Microsoft propose AgentGen, a novel framework designed to enhance the planning abilities of LLM-based agents through automated environment and task generation. It utilizes an inspiration corpus for environmental diversity and introduces a bidirectional evolution method, Bi-Evol, to create tasks with a smooth difficulty gradient. On AgentBoard, AgentGen-tuned LLMs, such as Llama-3 8B, outperform models like GPT-3.5 and even GPT-4 in certain scenarios.

Researchers from Chinese AI startup Baichuan open source a data processing pipeline and present BaichuanSEED, a LLM that serves as a competitive baseline in the field. It emphasizes the significance of extensive data collection coupled with effective deduplication techniques to enhance the model’s performance. The model aims to demonstrate the potential of these methods in improving language understanding and generation capabilities

CogVLM2: Visual Language Models for Image and Video Understanding

Researchers from Chinese AI startup Zhipu AI present CogVLM2, which includes CogVLM2, CogVLM2-Video, and GLM-4V. These models are engineered to enhance vision-language fusion, support higher resolution inputs, and cater to a broader range of modalities and applications. The CogVLM2 model for image understanding builds upon a visual expert architecture and refined training recipes, with the capability to handle input resolutions up to 1344x1344 pixels. The CogVLM2-Video model, designed for video understanding, incorporates multi-frame input with timestamps and introduces a method for automated temporal grounding data construction.