🤳🏻Will RetNet Replace Transformer? ChatGLM Creator Now Worth $500M; Alibaba-Backed Startup Launches Lensa-Like AI Avatar App

Weekly China AI News from July 17 to July 23

Dear readers, this week I'll discuss:

An innovative neural network architecture called Retentive Networks that could replace Transformers as the backbone of large language models.

Chinese startup Zhipu AI behind the ChatGLM chatbot reportedly received an investment from Meituan.

An AI avatar app that can generate stylized portraits from your selfies quickly gained popularity in China.

Plus, researchers proposed a virtual "gaming company" that consists of AI agents to streamline software development.

Successor to Transformers? New AI Architecture Enables Efficient Large Language Models

What’s new: GPT-4 is brilliantly smart, but it only allows users to ask 25 questions within three hours. This limitation is due to the significant costs of inference. Transformers, the de facto backbone architecture of large language models (LLMs), still suffer from high inference costs in terms of memory, latency, and throughput due to the O(n) complexity per step. This makes deployment of LLMs challenging.

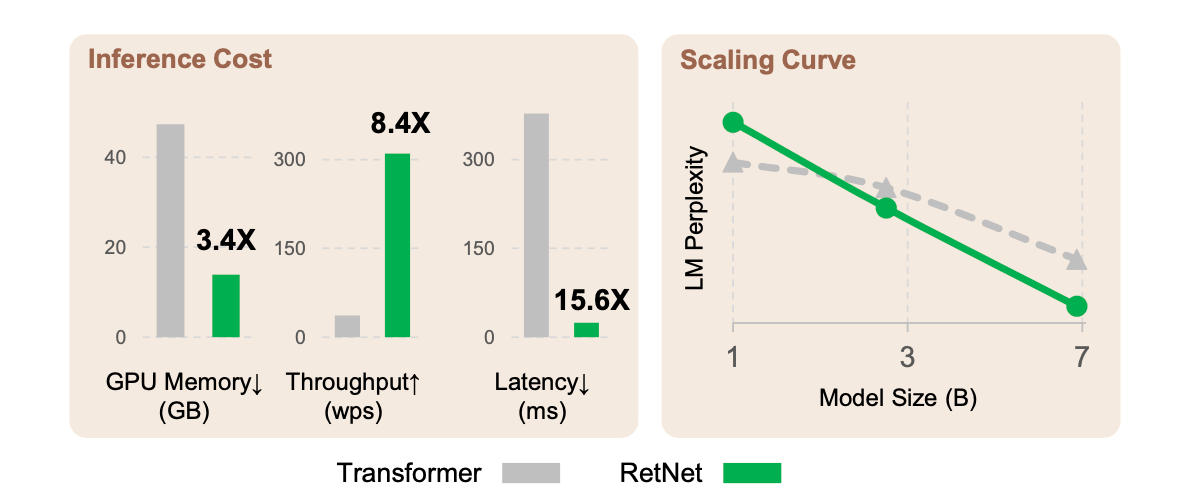

A group of researchers from Microsoft Research Asia and Tsinghua University proposed a new architecture called Retentive Network (RetNet) to improve training efficiency and inference cost of LLMs. RetNet has significantly better inference efficiency - 8.4x faster throughput, 70% less memory, and lower latency than Transformers for a 7B model.

How it works: RetNet introduces a multi-scale retention mechanism to replace multi-head attention. It allows parallel, recurrent, and chunkwise recurrent representations: Parallel for efficient training, Recurrent for O(1) inference cost, Chunkwise recurrent for efficient long sequence modeling.

Experiments on language modeling tasks show RetNet achieves comparable performance to Transformers. In addition to faster inference throughput, it also enables 25-50% less memory and 7x faster training than vanilla Transformers.

Why it matters: The authors said RetNet breaks the “impossible triangle” of achieving good performance, low inference cost, and parallel training simultaneously. The results demonstrate RetNet could be a strong successor to Transformers for LLMs, allowing larger, more capable models to be deployed efficiently.

Read the paper Retentive Network: A Successor to Transformer for Large Language Models for more.

Zhipu AI Raises Hundreds of Millions in B Round Led by Meituan

What's new: Chinese AI startup Zhipu AI completed a B round of funding worth hundreds of millions of RMB at a valuation of $500 million a few months ago, 36kr reported. The sole investor was Meituan Strategic Investment.

Who’s Zhipu AI: Zhipu AI was founded in 2019 out of Tsinghua University’s Knowledge Engineering Group (KEG). The startup has developed LLMs like GLM-130B and open sourced ChatGLM-6B that allows inferences on a single consumer-grade GPU. Its technology has reportedly shown promising results optimizing Meituan’s ad algorithms.

In addition to LLMs, Zhipu AI has also created text-to-coding platform CodeGeeX and text-to-image platform CogView.

Why it matters: Despite the growth of China’s generative AI industry, Zhipu AI’s funding is among the rare instances that surpasses $100 million.

This investment complements Meituan’s recent acquisition of Lightyear, another AI startup founded by Meituan Co-founder Huiwen Wang, underscoring the company’s growing ambitions in the AI sector. However, it remains uncertain how Meituan plans to manage these two competing startups under its umbrella.

AI Avatar App Miaoya Camera Turns Selfies into Stunning Portraits

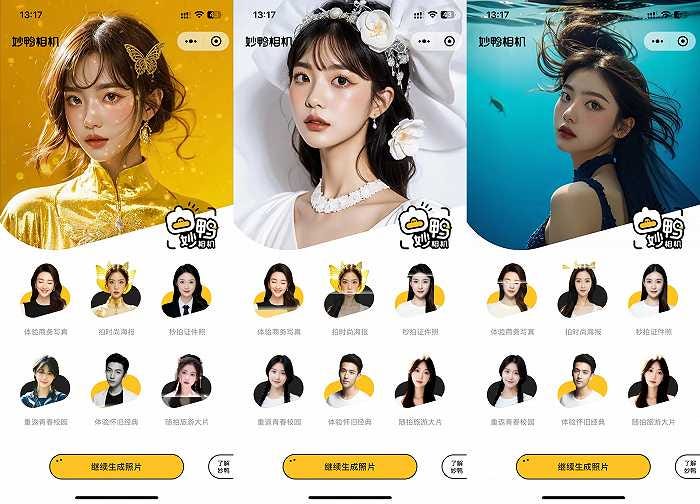

What's new: A new AI avatar app called Miaoya Camera (妙鸭相机) has gone viral on China’s Internet, with its servers crashing soon after launch due to high demand.

How it works: Miaoya Camera users need to upload 20 photos containing their face and pay 9.9 RMB to generate a personalized digital avatar using AI. The digital avatar will automatically be used to create different types of photos, including business headshots and portraits.

Miaoya Camera was developed by a startup backed by Alibaba Digital Media & Entertainment Group.

Why it matters: Miaoya Camera’s popularity shows the huge demand for AI-generated content among Chinese users. Despite potential privacy and policy risks, the startup claims users' photos are only used to create the avatars then automatically deleted.

Weekly News Roundup

💯 Global leading market intelligence firm IDC released a technology assessment report for China-based LLMs, where Baidu’s ERNIE secured the top spot in terms of the overall scores. The report evaluates LLMs from three aspects: Product, Industry, and Service.

⛏ Huawei and Shandong Energy Group held a press conference to announce the first commercial use of Huawei’s Pangu large model in mining scenarios.

✈️ Ctrip released the first tourism-specific large language model “Ctrip Wendao”, trained on 2 billion high-quality unstructured tourism data.

✍🏻 Tencent-backed digital publisher Yuewen Group released the first LLM in China’s online literature industry, “Yuewen Miaobi", and an application product “Writer Assistant Miaobi Edition” based on this model.

🚛 JD.com Yanxi LLM has been officially launched, along with two other models fine-tuned for logistics and health.

🫙 Huawei released two new AI storage products, OceanStor A310 and FusionCube A3000, supporting up to 400GB/s bandwidth.

🚙 Autonomous driving supplier HoloMatic Technology has completed the final round of its Series C financing, raising hundreds of millions of RMB. The round was co-led by the Science City (Guangzhou) Investment Group and GAC Capital.

Trending Research

Communicative Agents for Software Development

A new AI system called ChatDev is dramatically accelerating software development using natural language conversations with LLMs. By dividing the process into stages like design and testing, ChatDev allows AI agents to collaborate through chat to quickly complete projects. In experiments, it developed software in under 7 minutes for less than $1, while identifying bugs and maintaining efficiency. (Affiliations: Department of Computer Science and Technology at Tsinghua University School of Computer Science at Beijing University of Posts and Telecommunications, Department of Computer Science at Brown University)

Researchers have developed a novel technique to estimate normals and global orientations for raw point clouds, which are widely used in geometry processing. Existing methods struggle with sparse, noisy data and complex shapes. The new approach defines requirements for acceptable winding numbers using a smooth objective function. It examines Voronoi vertices to balance winding number occurrences and align normals with outside poles. Experiments demonstrate improved performance on sparse, noisy clouds and intricate geometry, compared to previous techniques. (Affiliations: Shandong University, The University of Hong Kong, The University of Texas at Dallas, Qingdao University of Science and Technology, Texas A&M University)