What is China's GPT-3?

China's research orgs and tech giants are vying to build the best Chinese pre-training language models.

Generative Pre-trained Transformer 3 (GPT-3) is a language-generative model created by San Francisco-based research powerhouse OpenAI last year. The model has 175 billion parameters and is trained on 45 TB text data from Wikipedia and books. Introduced in the paper Language Models are Few-Shot Learners, which was later crowned the Best Paper Award at the NeurIPS 2021 conference, GPT-3 achieved incredible performance in many NLP tasks.

Limited GPT-3 APIs were later offered to the research community and enterprise developers for understanding customer feedback, creating storytelling in RPG games, and even automating code programming.

In the meantime, Chinese tech companies and research institutes quickly jumped on the bandwagon to build their versions of GPT-3 for Chinese NLP tasks. Thanks to deep learning, which is relatively language agnostic compared to conventional NLP technique, multiple Chinese GPT-3 models were introduced in less than a year, claiming state-of-the-art performance in Chinese NLP tasks.

BAAI’s Wudao 1.0

In late March, state-sponsored research lab Beijing Academy of Artificial Intelligence (BAAI) introduced Wu Dao (悟道), the so-called “first large-scale Chinese intelligent model system.” The lab boasted over 100 scientists from Tsinghua University, Peking University, and other elite universities who have contributed to the project.

Wu Dao 1.0 comprises four different models tailored for language understanding, multimodal pre-training, language generations, and biomolecular structure prediction.

Wen Yuan (文源) is a large-scale generative Chinese pre-trained language model that has 2.6 billion parameters and is trained on 100 GB of Chinese training data. The model is said to handle multiple NLP tasks such as conversation, essay generation, cloze test, and language understanding. Essentially it’s not so different from GPT-3, but smaller and tailored for Chinese. You can read the paper on arXiv and access the code on GitHub.

Wen Lan (文澜) is a large-scale multimodal pretraining model, like OpenAI’s Clip, which aims to understand inputs across graphics, text, and video. The model has 1 billion parameters and is trained on 50 million graphic pairs collected from open sources.

Wen Hui (文汇) is a pretraining language model that emphasizes cognitive and reasoning challenges. With 11.3 billion parameters, the model is said to achieve near-human performance on poetry generation on the Turing test. Other major contributions include a vector-based fine-tuning tactic called P-Tuning that enables an auto-regressive model to outperform an auto-encoder model in language understanding tasks, and the Inverse Prompting algorithm to improve language generation results. Unfortunately, I didn’t find the paper for more technical details.

Wen Su (文溯) is a large-scale training model for biomolecular structure prediction. The model is built on BERT and trained on voluminous biological data including a 100 GB UNIPARC database. The lab hasn’t released its test result.

Alibaba’s PLUG

In mid-April, Alibaba’s Damo Academy announced PLUG (Pre-training for Language Understanding and Generation), a 27-billion-parameter model capable of generating any kind of text including poems and novels while handling questions and answers, translation, even simple arithmetic operations. Damo Academy said it is the world’s largest open-source Chinese text pre-training language model. Trained on over one terabyte of data, PLUG temporarily topped the classification leaderboard of the Chinese Language Understanding Evaluation (CLUE) benchmark.

PLUG is built on the foundation of Damo Academy’s NLU model StructBERT and NLG model PALM. StructBERT incorporates language structures at the word and sentence levels for deep language understanding, and PALM jointly pre-trains an autoencoding and autoregressive language model on a large unlabeled corpus.

The research team first trained a basic StructBERT model with 24 layers/8192 hidden size as an encoder on 300B tokens of training data. Then they used this well-trained encoder to initialize the generation model and connected it with a decoder of 6 layers / 8192 hidden size. Trained on 100B tokens of data, the team applied the Masked LM task in the first 90% of the training to obtain the NLU capability and removed the task in the last 10% so the model. The team spent 35 days on training with 128 A100 graphic cards.

Here is the demo site: https://nlp.aliyun.com/portal#/BigText_chinese (you need an Aliyun account first). The only question is I didn’t find its paper to access more technical details or its open-source code.

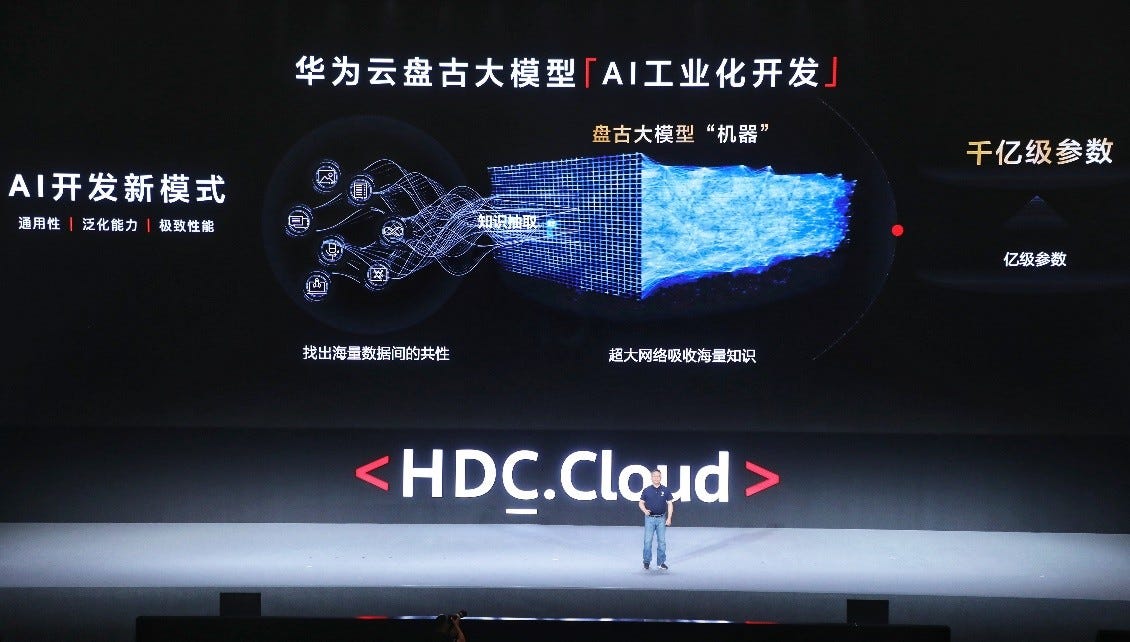

Huawei’s Pangu

At the annual Huawei Developer Conference in late April, Huawei Cloud dropped a bombshell: PanGu (盘古) NLP, the world’s largest Chinese language understanding and generative model. A tech insider joke, Chinese companies often name their technologies characters and places in Chinese mythology (Pangu is the first living being in the universe, and Baidu’s AI chip Kunlun, the name of a mythical mountain believed to be a Taoist paradise).

In a joint effort with Recurrent AI, a startup providing AI-enabled corporate services to improve sales efficiency, Huawei Cloud researchers trained PanGu NLP with 40TB of Chinese text data. The model achieved state-of-the-art performance across the Chinese Language Understanding Evaluation (CLUE) benchmark - the total leaderboard, classification, and reading comprehension.

According to the Huawei Chief Architect of MindSore, the release of PanGu NLP includes a Transformer encoder-decoder model for language understanding and a Transformer decoder model for language generation. The latter, named PanGu-α, has up to 200 billion parameters - even bigger than GPT-3. PanGu-α is trained on MindSpore and a cluster of 2048 Ascend 910 AI processors. Huawei researchers also collected 1.1TB of high-quality Chinese data from 80TB data to pre-train the model.

Huawei researchers didn’t contribute significant innovations to the model design but developed a query layer on top of the stacked Transformer layers. The main challenge is to implement a distributed training system. In the paper, their system adopted five-dimensional parallel training - data, operator, pipeline, optimizer, and rematerialization. You can read the paper for more details.

The research of Chinese GPT-3 is strong proof of an organization’s distributed training capability, software hardware design, and cloud compute. Just like GPT-3 is the best selling point of Microsoft Azure, Chinese GPT-3 is also the best PR for companies to attract and retain their cloud clients.

![Open AI's GPT-3: The Artificial Intelligence Creating all the Buzz | [x]cube LABS Open AI's GPT-3: The Artificial Intelligence Creating all the Buzz | [x]cube LABS](https://substackcdn.com/image/fetch/$s_!zkkO!,w_1456,c_limit,f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fbucketeer-e05bbc84-baa3-437e-9518-adb32be77984.s3.amazonaws.com%2Fpublic%2Fimages%2F14fdeea5-8817-435e-8d07-83f8ba90ed9d_820x350.jpeg)