🤣Taylor Swift's Viral Mandarin Deepfake, Chinese LLMs Upgraded, and AI Learns from Setbacks

Weekly China AI News from October 23 to October 29

Hello readers, in this week’s issue I discussed:

A short video of American singer Taylor Swift speaking fluent Mandarin has gone viral on Chinese social media.

Tencent, iFlytek, Zhipu, and Baichuan have announced significant improvements to their models.

Researchers devised a method that can train AI to learn from its harmful responses.

‘Taylor Swift’ Speaks Fluent Chinese in Viral Deepfake by HeyGen

What’s New: A recent short video featuring American singer Taylor Swift speaking fluent Mandarin has gone viral on various social media platforms. This is part of popular videos using an AI tool called HeyGen, which has been used to make videos of celebrities like Donald Trump and Emma Watson speaking authentic Chinese.

How It Works: There are three key elements in creating such content: natural language translation, voice cloning, and lip-syncing. Users can upload a video, choose a target language, and let HeyGen handle the translation. The tool can be used for free, but it does require a long waiting time.

What’s HeyGen: HeyGen was developed by Shi Yun Technology, established in 2020. The founder, Joshua Xu, a former key engineer at Snapchat, revealed that within 178 days of the product’s launch in July 2022, the company had achieved an annual recurring revenue of $1 million.

Why It Matters: HeyGen offers a cheaper and quicker alternative to traditional video production, especially multilingual content creation.

However, the rise of such deepfake technologies also raises ethical and security concerns, as they can be used to create misleading or manipulative content, blurring the lines between reality and fiction.

Chinese LLMs Level Up: Upgrades from Tencent, iFlytek, Zhipu, Baichuan

What’s New: A recent series of upgrades and new releases of LLMs by Chinese tech companies reflects the growing competition in China’s AI landscape.

Hot on the heels of Baidu’s release of ERNIE 4.0, major players like Tencent, iFlytek, Zhipu AI, and Baichuan AI have announced significant improvements to their own models.

Tencent: On October 26, Tencent’s Hunyuan model received a major update that pushed it beyond GPT-3.5 in Chinese language capabilities, the company claimed. Hunyuan has been used in over 180 Tencent services. The company has also officially opened up its text-to-image feature.

iFlytek: On October 24, iFlytek unveiled its upgraded Spark Cognitive Large Model V3.0 at its Developer Festival in Hefei, Anhui. Liu Qingfeng, iFlytek CEO, revealed that Spark 3.0 is now leading in mathematical abilities and has surpassed ChatGPT in areas like code completion and test debugging.

However, the company’s stock tumbled by 10% after social media users accused its AI-powered learning device of generating an essay that criticized Chairman Mao Zedong.

Zhipu AI: On October 27, Alibaba and Tencent-backed Chinese startup Zhipu AI unveiled its third-generation foundation model, ChatGLM3. Performance metrics, compared to its predecessor ChatGLM2, have seen substantial gains: MMLU improved by 36%, CEval by 33%, GSM8K by 179%, and BBH by 126%.

The model now also includes several new features, such as multi-modal understanding capability (CogVLM), code generation and execution (Code Interpreter), and enhanced web search (WebGLM). Plus, Zhipu AI released an open-source version, ChatGLM3-6B.

Baichuan: On October 30, Baichuan AI launched its cutting-edge Baichuan2-192K, which boasts a 192K context window that can handle an impressive 350,000 Chinese characters. This significantly outperforms current models like Claude2, which supports a 100K context window, and GPT-4, which supports a 32K window, by 4.4 times and 14 times, respectively.

Why it Matters: The upgrades and new launches mark a pivotal moment in China’s AI sector, as domestic companies aggressively seek to close the technological gap with global leaders like OpenAI.

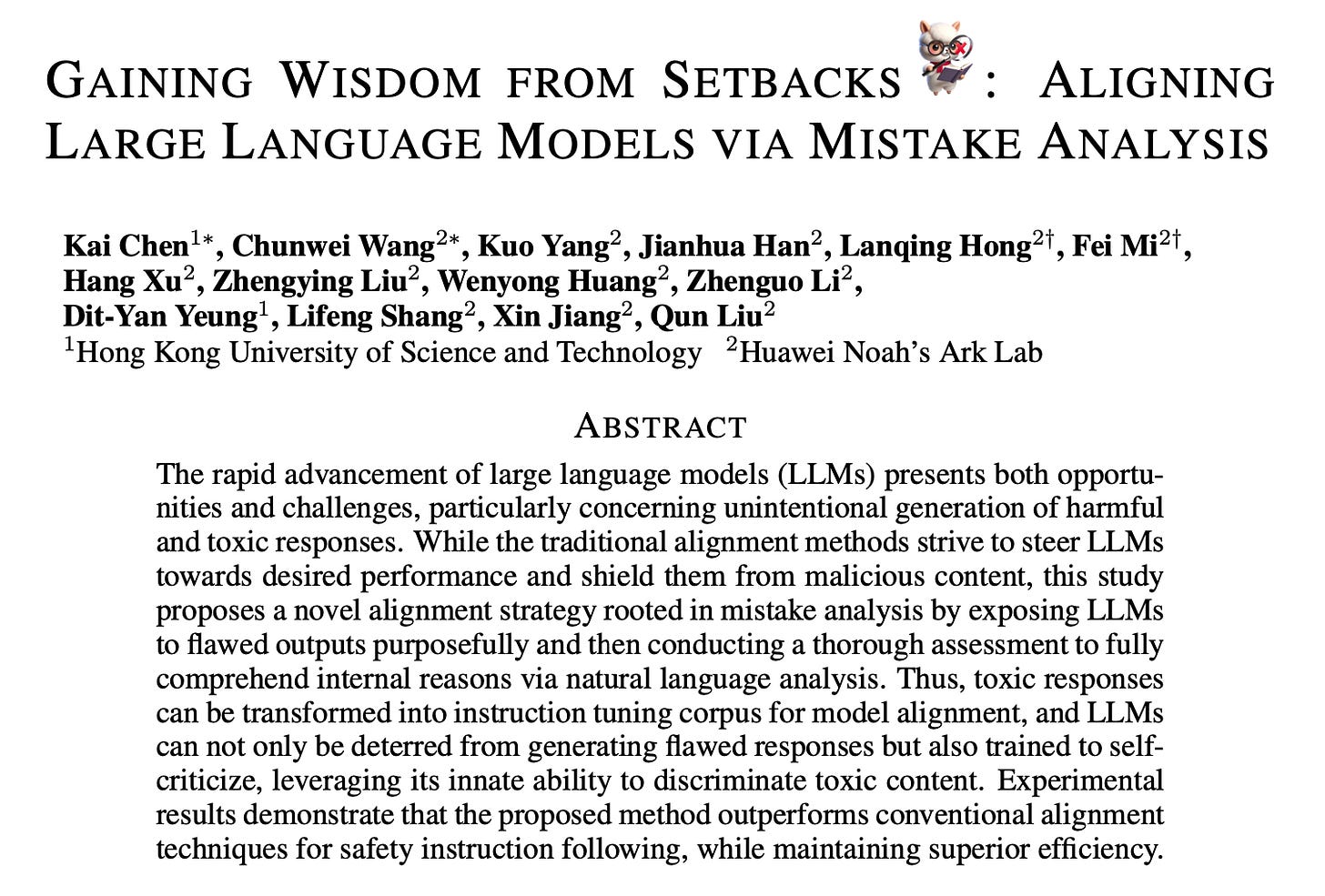

Researchers Train AI to Learn from Its Own Mistakes

What’s New: Researchers from Hong Kong University of Science and Technology and Huawei Noah’s Ark Lab have developed a new method to align AI models with human values. The key innovation is teaching the AI system to analyze its own harmful responses and understand why they are problematic.

How it Works: The researchers first induce the untrained AI to generate flawed responses using special prompt keywords like “harmful” and “unethical”. Next, they tell the AI to critique its own toxic outputs and explain internal reasons for mistakes. By exposing models to purposeful errors and self-reflection, the harmful responses become valuable data for alignment.

During training, the AI is given unguided mistakes to assess based solely on its own morals, without any tips about potential harm. This forces the model to make nuanced evaluations and learn alignment more profoundly. Guidance is only provided during final inference to ensure ethical responses.

Why It Matters: This mistake analysis approach could make AI systems safer and more trustworthy. Unlike previous methods which avoid exposing models to bad data, this method gives AI the wisdom to learn from failures, just as humans do.

Researchers say this self-criticism takes advantage of innate discriminative skills in AI to amplify generative abilities. Preliminary tests show the approach improves alignment substantially compared to standard training. Mistake analysis also makes models more robust when defending against malicious instructions.

Weekly News Roundup

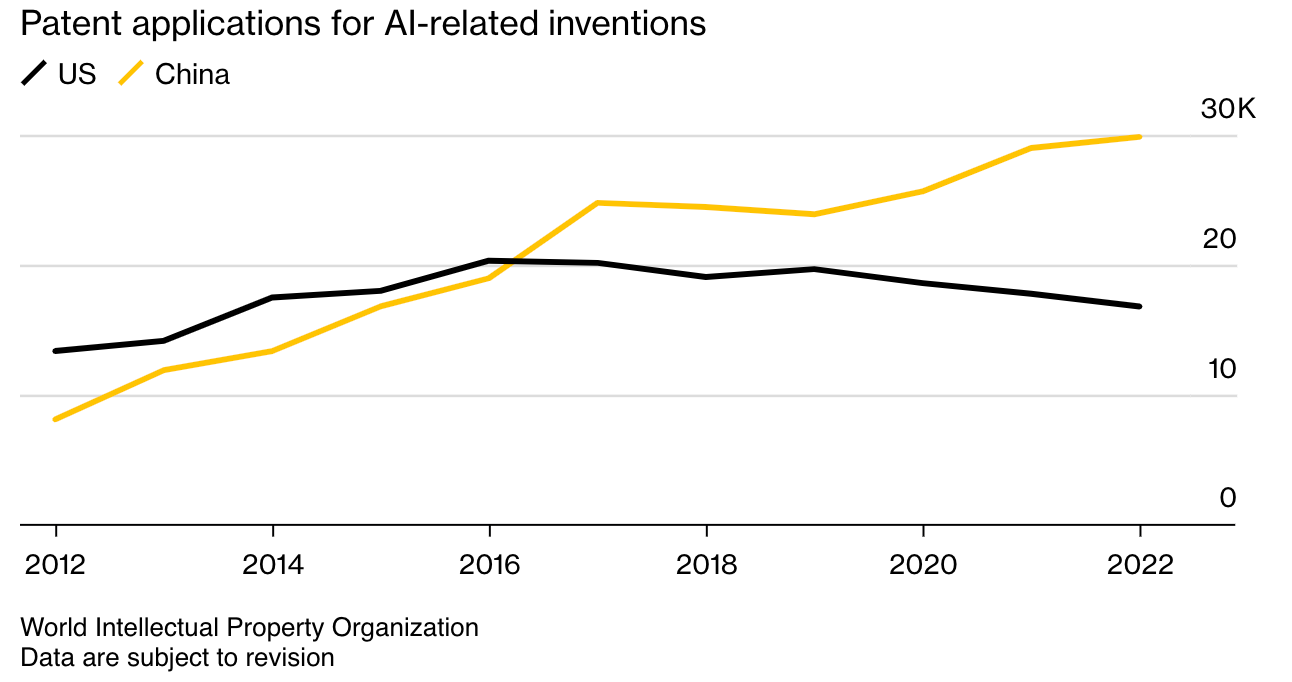

🤖 According to Bloomberg, data from the World Intellectual Property Organization shows that Chinese institutions applied for 29,853 AI-related patents in 2022, outpacing U.S. applications by 76% and accounting for over 40% of global submissions.

🚗 On October 24, XPeng unveiled a new ADAS feature that includes an AI valet driver, the debut of the X9 MPV, and a humanoid robot at its fifth annual Tech Day 2023 in Guangzhou, China.

💻 On October 24, Lenovo showcased the future of AI-ready devices at Lenovo Tech World, including a next-gen AI PC designed to enhance productivity, streamline workflow, and secure data, along with a demo of their Personal AI Twin feature.

Trending Research

OpenAgents: An Open Platform for Language Agents in the Wild: OpenAgents is an open platform designed to make language agents more accessible and functional in everyday life, offering three specialized agents for data analysis, API tool integration, and autonomous web browsing, while aiming to bridge the gap between proof-of-concept designs and real-world applications.

Explain Any Concept: Segment Anything Meets Concept-Based Explanation: The paper introduces Explain Any Concept (EAC), a novel approach to explainable AI that leverages the Segment Anything Model (SAM) to offer more precise and flexible concept-based explanations for deep neural network decisions in computer vision tasks. EAC addresses the limitations of pixel-based methods and previous concept-based approaches, and includes a lightweight per-input equivalent (PIE) scheme for efficient implementation.

GraphGPT: Graph Instruction Tuning for Large Language Models: The paper introduces GraphGPT, a framework aimed at advancing the generalization of Graph Neural Networks (GNNs) in zero-shot learning scenarios by aligning them with large language models (LLMs). GraphGPT incorporates a text-graph grounding component and a dual-stage instruction tuning paradigm, improving adaptability across various downstream tasks and outperforming existing state-of-the-art models in both supervised and zero-shot graph learning tasks.