Microsoft Asia Researchers Scale Transformers to 1,000 Layers; BYD EV Armed with Baidu Self-Driving Tech; Future NLP Challenges

Weekly China AI News on March 14

News of the Week

Top Chinese Professor Explains Two Major Challenges Facing NLP

Dr. Maosong Sun, a Professor of the Department of Computer Science and Technology at Tsinghua University, is one of the top natural language processing scholars in China. He recently wrote an article elaborating on the two major challenges of natural language processing. I made a quick translation of his bullet points and you can find his full article here.

Challenge One: How far can this strategy of maximizing scale (data, model, computing power) go? Another question is, what should we do? I believe the answers may come from both exploitation and exploration. From an exploitation perspective:

The algorithms used to build and train foundation models are relatively coarse-grained. Some of the “mistakes” made by foundation models are expected to be partially solved by improving algorithms.

The research and development of new methods complementing foundation models, such as few-shot learning, prompt learning, and adapter-based learning, should be extended.

Should we filter a large amount of noise in big data?

Leaderboards are undoubtedly critical for model development. But leaderboards are not the only gold standard. Apps are the ultimate gold standard.

Companies that develop foundation models should open their models up to academia for testing.

Foundation models urgently need to find a killer app to convincingly demonstrate its capabilities.

From an exploration perspective, we should study problems like:

Why do large-scale pre-trained language models suffer from deep double descent (which seems to go beyond the golden rule of “data complexity and model complexity should basically match” in machine learning)?

Why does the “base model” have the ability to learn few or even zero times? How are these abilities acquired? Are there emergent phenomena of complex giant systems?

Why does prompt learning work? Does this imply that several functional partitions are spontaneously generated within the foundation model, and the learning of each prompt just provides the key to activate each functional partition?

If so, what might the distribution of functional partitions look like? Since the core training algorithm of the “foundation model” is extremely simple (language model or cloze model), what does this imply?

Challenge Two: Become wiser. The following sentence is a typical word sense disambiguation problem for machine translation: Little John was looking for his toy box. Finally, he found it. The box was in the pen. John was very happy.

Pen generally means “a writing implement with a point from which ink flows” but it could also mean “an enclosure for confining livestock”. Clearly, the latter explanation fits well with the context, but Google Translate still returns “盒子在笔里”.

There are two major hurdles that hinder the research of small data, knowledge-rich, and reasoning AI. First, the formal knowledge database and the world knowledge database are still seriously lacking. Knowledge graphs like Wikidata may seem large, but if you take a closer look, you can see that the scope of knowledge they cover is still very limited. In fact, Wikidata has obvious structural deficiencies, mostly about static attribute knowledge of entities, and almost no formal description about actions, behaviors, states, and logical relationships of events.

Second, the ability to systematically acquire formal knowledge such as “actions, behaviors, states, and event logic relationships” is still seriously lacking. Large-scale syntactic-semantic analysis of an open text such as Wikipedia text is the way to go. But unfortunately, this syntactic and semantic ability is not yet available (although in recent years, with the help of deep learning methods, there has been considerable progress).

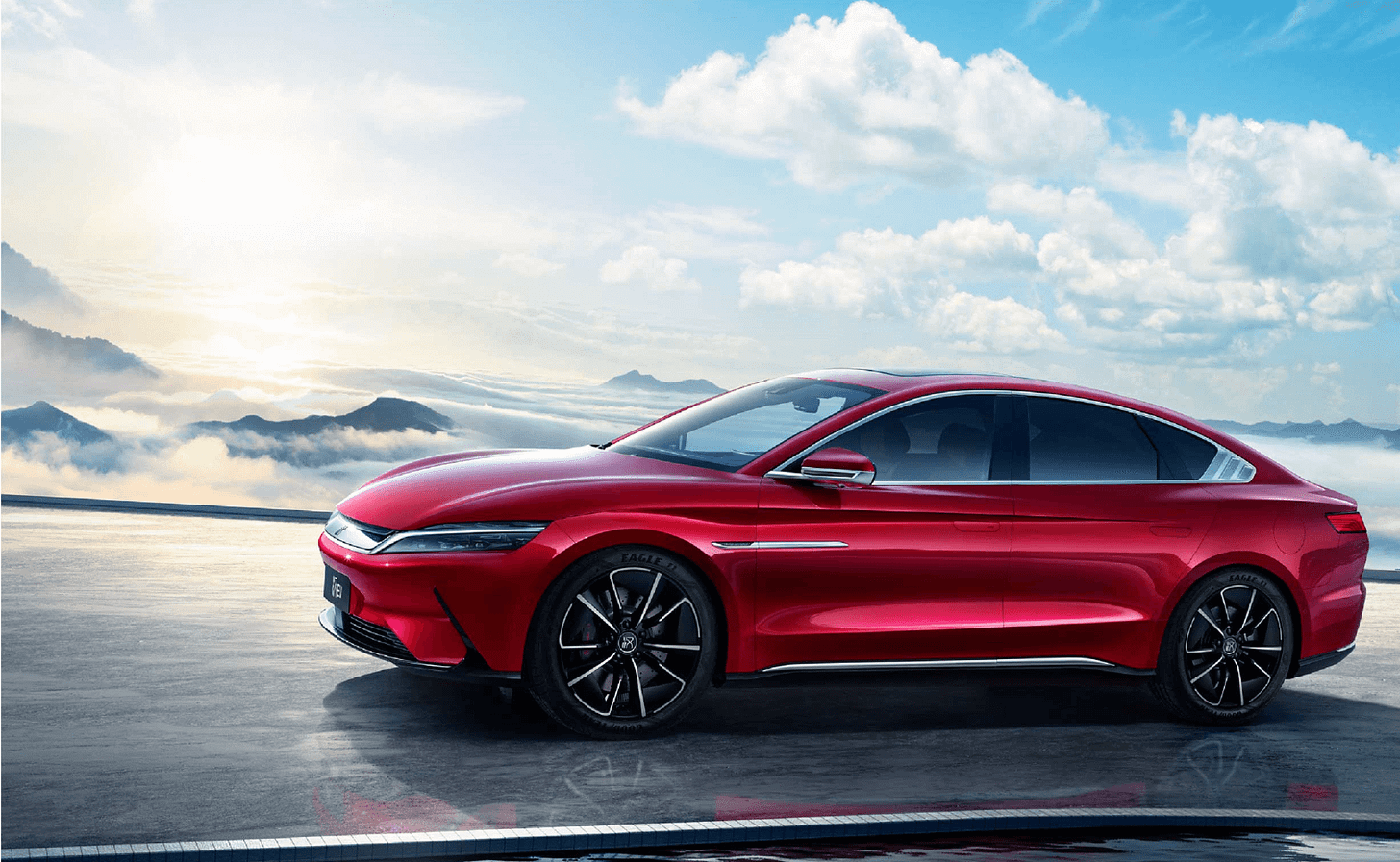

BYD Arms Electric Vehicle with Baidu Self-Driving Tech; Neta Chooses Huawei

BYD (Build Your Dreams), China’s leading new energy vehicle maker, has chosen China’s search engine giant and AI specialist Baidu to supply in-car self-driving technologies for BYD’s multiple popular models.

Said Baidu CEO Robin Li in its latest earnings call on March 1st, “recently, we received a nomination letter from BYD one of China’s largest automotive and technology companies intending to adopt ASD in particular ANP and AVP for multiple popular models.” BYD hasn’t made any official comments on this potential cooperation.

BYD-Baidu’s alliance is seen as a firm challenger against Tesla and its popular advanced driver assistance system AutoPilot and Full-Self Driving. Last year, BYD sold a total of 603,783 new energy passenger vehicles, buses, forklifts & trucks worldwide, while Tesla delivered 936,172 vehicles around the world.

Baidu’s self-driving tech, named Apollo Self-Driving (ASD), is a light camera-based solution that can navigate urban roads and highways and park automatically. The solution is rooted in Baidu’s well-known Apollo Robotaxi programs.

Neta, a Chinese budget-EV startup selling low-cost Telsa-like cars, also unveiled its ADAS system named TA Pilot. The system is built on Huawei’s hardware including two 96-line lidars. Hozon, an owner of Neta brand, is eyeing a Hong Kong IPO.

Papers & Projects

Researchers from Microsoft Asia Research proposed a new technique that can scale Transformers up to 1,000 layers. On a multilingual benchmark with 7,482 translation directions, the 200-layer model with 3.2B parameters significantly outperforms the 48-layer state-of-the-art model with 12B parameters by 5 BLEU points, which indicates a promising scaling direction. You can read more about the paper on arXiv.

AlphaFold, an AI system developed by DeepMind that can predict a protein’s 3D structure from its amino acid sequence, is a scientific breakthrough that advances the role of AI in fundamental research. However, the training and inferencing of the Transformer-based model are time-consuming and expensive. A team of researchers from the National University of Singapore (NUS), HPC-AI Technology, Helixon, and Shanghai Jiao Tong University proposed a light version of AlphaFold named FastFold that can reduce overall training time from 11 days to 67 hours. The code is open-sourced on GitHub.

Rising Startups

Pony.ai (小马智行), a Beijing-based self-driving upstart, has completed the first close of its Series D financing with a valuation of $8.5 billion. The proceeds from the funding will be used to further augment Pony.ai’s hiring, investment in research and development, global testing of robotaxi and robotrucking on an ever-growing fleet, enter into important strategic partnerships, and accelerate its development toward mass production and mass commercial deployment.

Data Grand (达观数据), an AI-powered RPA startup, has raised RMB 580 million ($91.76 million) in its Series C funding. Founded in 2015, the Shanghai-based startup is offering efficient, convenient, and controllable office robot products and solutions for enterprise business scenarios using AI technologies like NLP and OCR.

AI Rudder (赛舵智能), an AI startup that develops AI-powered voice solutions to improve B2C communications, has raised $50 million in its Series B funding round. Founded in 2019, the Shanghai-Singapore-based company provides voice AI assistants to take on high-volume repetitive tasks.

Rest of the World

The U.S. Department of Transportation’s National Highway Traffic and Safety Administration (NHTSA) issued the first-of-its-kind final rule that allows fully autonomous vehicles to be sold and operated without manual driving controls like steering wheels or brake pedals. “For vehicles designed to be solely operated by an ADS, manually operated driving controls are logically unnecessary,” the agency said.

In a Nature paper, DeepMind introduced Ithaca, the first deep neural network that can restore the missing text of damaged inscriptions, identify their original location, and help establish the date they were created. According to DeepMind, Ithaca achieves 62% accuracy in restoring damaged texts, 71% accuracy in identifying their original location and can date texts to within 30 years of their ground-truth date ranges.

Reuters reported that Ukraine's defense ministry on Saturday began using Clearview AI’s facial recognition technology, the company's chief executive said, after the U.S. startup offered to uncover Russian assailants, combat misinformation, and identify the dead. Clearview AI, a U.S. facial recognition company, has spurred controversy by feeding public images from social media to train its facial recognition software for law enforcement probes.