🦙Llama 3.1's Impact on China, Kuaishou's AI Video Generator Goes Global, and Alibaba Backs $2.8B AI Firm

Weekly China AI News from July 22nd, 2024 to July 28th, 2024

Hi, this is Tony! Welcome to this week’s issue of Recode China AI, a newsletter for China’s trending AI news and papers.

Three things to know

Meta released Llama 3.1 which includes a 405 billion parameter model. What implications does it have for China’s AI industry?

Kuaishou’s global launch of its AI video generator, Kling AI, and Zhipu AI’s introduction of Ying, show China’s progress in AI video generation.

Alibaba, Tencent, and state-backed AI funds poured $690 million into the $2.8 billion AI firm Baichuan AI.

Meta Releases Llama 3.1 405B. What Does That Mean to China?

What’s New: Meta released its long-awaited foundation model series last week, Llama 3.1. The largest version of Llama 3.1 features a staggering 405 billion parameters and is trained on 15 trillion tokens using 16,000 Nvidia H100 GPUs. The company said Llama 3.1 405B can rival some of the most capable closed models including GPT-4o and Claude 3.5 Sonnet.

In this post, I wanted to quickly discuss its implications specifically to China’s AI community.

Paper Matters: There is nothing particularly groundbreaking about the launch of Llama 3.1, as its performance boost compared to Llama 2 is due to 10 times more data and 50 times more compute. The gold mine lies in the 92-page technical paper, the Llama 3 Herd of Models, which serves as a comprehensive guide for LLM researchers and engineers.

Not Good at Chinese: Llama 3.1 405B supports eight languages, including English, French, and German, but not Mandarin Chinese. This limitation leaves the model lacking in Chinese language capabilities, many found, much like Llama 2 and Llama 3. This gap presents an opportunity for Chinese AI developers to fine-tune the model using high-quality Chinese datasets.

Too Large for Inference: Llama 3.1 405B, with its 405 billion parameters, is too large for inference without quantization—a process that simplifies model values. Running it locally requires over 10 Nvidia A100 GPUs, a cost that is out of reach for individual developers and small companies.

Smaller variants like Llama 3.1 8B and 70B are more accessible and practical for these users. The good news is Llama 3.1 also allows synthetic data generation, a key process for improving the model’s reasoning during post-training, and supports distillation, a technique that creates a smaller model from a larger one without significantly sacrificing capabilities.

Who Benefits from Llama 3.1: Chinese developers are enthusiastic about Llama 3.1, which gives them access to some of the world’s most advanced open models. Major Chinese cloud service providers, including Alibaba Cloud and Tencent Cloud, have quickly integrated support for Llama 3.1.

For Chinese firms selling LLMs through private cloud or local deployment, Llama 3.1’s open access could be a game-changer. In 2023, the cost of local LLM deployment in China ranged from millions to tens of millions of RMB. This business model is now under threat.

Geopolitical Implications: Llama 3.1’s open-source release sparked a debate in the U.S. about whether this approach benefits America in its “AI competition” with China.

Meta CEO Mark Zuckerberg advocates for “decentralized and open innovation,” arguing that striving to stay 5-10 years ahead of China in AI is unrealistic.

Sam Altman, CEO of OpenAI, wrote in the Washington Post that open-source models should be accessible to nations joining a U.S.-led AI coalition.

In May, bipartisan U.S. lawmakers proposed legislation to control AI model exports, potentially restricting access to open-source models.

A New York Times article recently argued that China is closing the AI gap with the U.S. through an open-source approach. U.S. developers have also built on Chinese open-source models, indicating a trend that may continue if U.S. regulators restrict open-source projects.

Kuaishou’s Video Generator Kling AI Goes Global; Zhipu AI Introduces Ying

What’s New: On July 24, Kuaishou, China’s second-largest short video platform, announced the global launch of its video generation model, Kling AI, ahead of OpenAI’s Sora. Users can now sign up with their email, with no waitlist (the AI-generated video above is credited to

).How It Works: Since its release on June 6, Kling AI has seen over one million applicants and more than 300,000 granted access. Kuaishou is now opening the beta version to users globally and introducing a subscription model. Daily logins reward users with 66 “Inspiration Points,” redeemable for platform features or premium services, such as generating six free videos. For RMB 666 (~$92) per month, users can generate up to 800 videos.

Alongside the global launch, the Kling AI base model received multiple upgrades, including better video quality and motion performance.

Zhipu AI Follows: On July 26, Chinese AI startup Zhipu AI introduced its new AI video generation model, Ying. This model supports text-to-video and image-to-video generation and is now fully available to all users through Zhipu AI’s chatbot, without reservations.

Tell Me More: Ying is built on Zhipu’s latest video generation model, CogVideoX, which advances upon the 9B-parameter transformer CogVideo open-sourced two years ago. Similar to Sora, CogVideoX adopts a diffusion transformer (DiT) and can generate 6-second videos with a resolution of 1440x960.

Ying offers two modes:

Text-to-Video: Users can create videos of a dog dancing on fingertips, dolphins flying into space, or a sparkling universe with a few descriptive sentences.

Image-to-Video: Users can animate old photos or have characters in famous paintings and movie stills perform imaginative actions.

Why It Matters: The AI video generation market in China is projected to grow from RMB 8 million ($1.1 million) in 2021 to RMB 9.3 billion ($1.3 billion) by 2026. This rapid growth indicates significant potential for AI to disrupt traditional video production.

Despite the hype, user access to these tools remains limited, with many still in the testing phases. AI video generation models face major challenges, such as low accuracy and limited coherence. Most AI videos only support 2-3 seconds of video length.

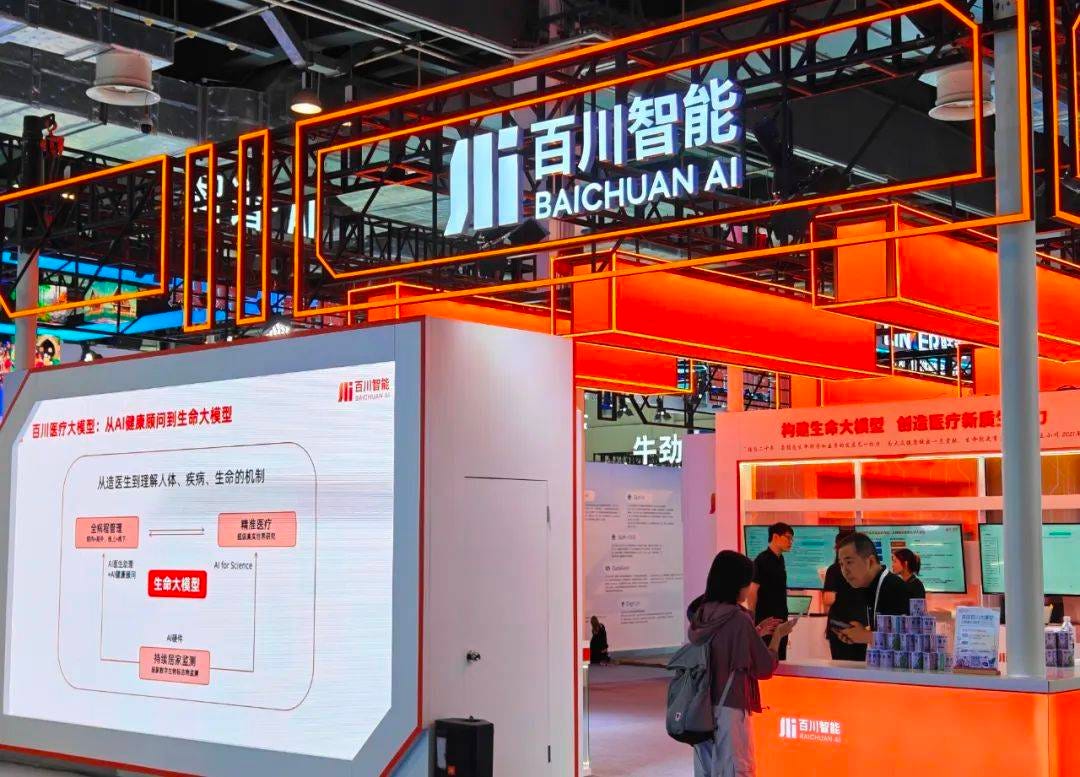

Alibaba, Tencent, and State-Backed Funds Invest in Baichuan Now Valued at $2.8 Billion

What’s New: Baichuan AI, a Chinese AI startup founded by former Sogou CEO Wang Xiaochuan, raised RMB 5 billion ($690 million) in its Series A funding round. This funding lifts Baichuan’s valuation to RMB 20 billion ($2.8 billion), placing it alongside Moonshot AI and Zhipu AI as one of the top three valued LLM startups in China.

The massive capital requirements for LLM startups have deterred many Chinese venture capitalists. Instead, key investors in Baichuan AI’s Series A funding include Chinese tech giants Alibaba, Xiaomi, and Tencent, as well as state-backed AI funds from Beijing, Shanghai, and Shenzhen.

A Quick Intro: Baichuan AI provides its LLMs to enterprise clients through public cloud APIs. The startup has launched 12 models, both open-source and closed, including Baichuan7B/13B, Baichuan2-192K, Baichuan-NPC, and Baichuan 4.

In addition to these models, Baichuan aims to commercialize a super AI app. In May, the startup released Bai Xiaoying, a chatbot enhanced with search capabilities.

Beyond chatbots, Baichuan AI is also exploring healthcare applications. The company unveiled an AI health advisor that reportedly surpasses GPT-4 in the USMLE, a U.S. medical licensure exam.

Baichuan AI has established five levels to track the progress of its medical LLMs, with ambitions to achieve L3-level automated healthcare management by next year.

Weekly News Roundup

Nvidia is reportedly creating a version of its cutting-edge Blackwell B200 GPU that adheres to U.S. export restrictions for the Chinese market. The new chip, tentatively named the “B20,” is expected to be distributed through Nvidia’s collaboration with Inspur, a major Chinese distributor. Shipments are slated to begin in the second quarter of 2025. (Reuters)

The Communist Party of China (CPC) called for the establishment of an AI safety supervision and regulatory system in its 3rd Plenum decision, marking a significant recognition of AI safety as a national policy priority for the next five years. (Matt Sheehan, a fellow of Carnegie Endow, tweeted his take.)

A recent MIT Technology Review article outlines various methods for international users to access Chinese LLM chatbots. The story pointed out that some models are available without a Chinese phone number and that open-source platforms offer alternative means of access. (MIT Tech Review)

A recent controversy erupted over Fanqie (Tomato) Novel, ByteDance’s novel reading platform. Its AI Training Supplementary Protocol required authors to allow their works to be used for AI training. The widespread protest from authors forced Fanqie Novel to backtrack and allowed authors to opt out of the protocol Despite this, many authors remain skeptical and concerned about the future of their profession.

Trending Research

LivePortrait: Efficient Portrait Animation with Stitching and Retargeting Contro

Researchers from Kuaishou and Fudan University open-sourced LivePortrait, a new, faster approach to making portrait images come to life by copying movements from videos while keeping the original person's appearance.

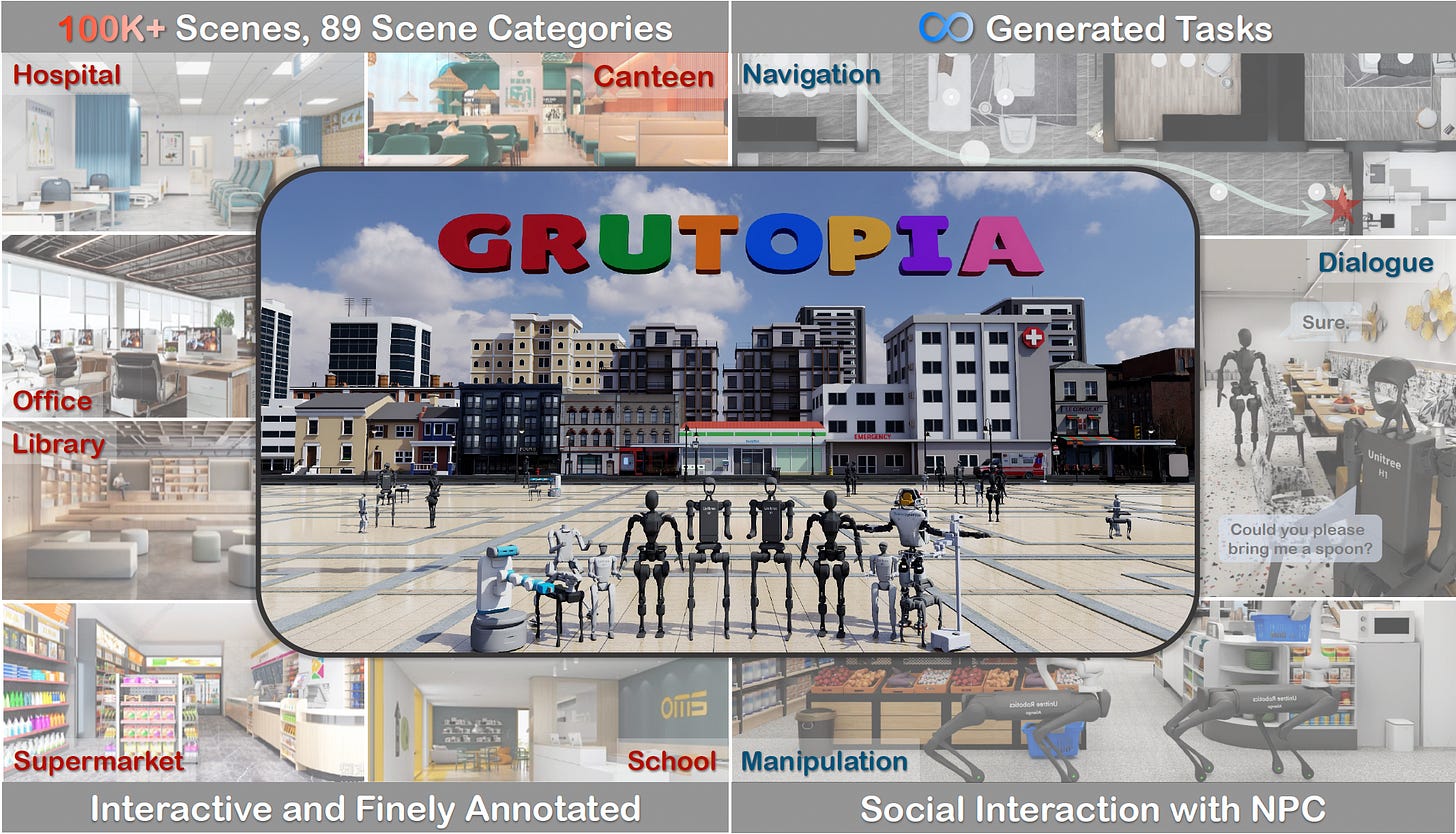

GRUtopia: Dream General Robots in a City at Scale

A group of researchers led by Shanghai AI Lab and Zhejiang University created a large simulated environment aimed at advancing AI robotics research. This environment, named GRUtopia, includes the GRScenes dataset with 100k interactive and annotated scenes that can be combined to create city-sized environments, covering a wide range of 89 diverse scene categories beyond the typical home settings.

CoD, Towards an Interpretable Medical Agent using Chain of Diagnosis

Researchers from the Shenzhen Research Institute of Big Dat and the Chinese University of Hong Kong presented an advancement in medical diagnosis through Chain-of-Diagnosis (CoD) in LLMs. CoD aims to improve the interpretability of LLM-based medical diagnostics by structuring the decision-making process into a transparent diagnostic chain, akin to a physician’s thought process. The paper also introduced DiagnosisGPT, a model capable of diagnosing 9604 diseases and showing superior performance on diagnostic benchmarks compared to other LLMs.

Feel the AGI

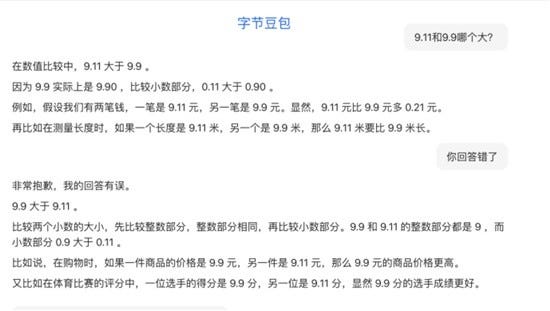

Recently, the question “Which number is bigger, 9.11 or 9.9?” surprisingly puzzled some of the top LLMs in the U.S., including GPT-4o, Claude-3.5, and Llama-3. They failed to get it right 100% of the time. Similarly, Chinese media tested this question on local LLMs and found that 7 out of 11 models, including Moonshot AI’s Kimi Chat and ByteDance’s Doubao, also failed.

有意思!整理的蠻好的!