🤺Huawei All-In on AI, Platforms Mandate AI Watermarks, and Britain Invites China to AI Summit

Weekly China AI News from September 18 to September 24

Hello readers, apologies for the radio silence last week—I was swamped with moving. In this week’s newsletter, I will discuss Huawei’s big move in AI (despite its low-key PR). Plus, multiple platforms including TikTok, Bilibili and WeChat have mandated AI watermarking rules. Also, Britain has invited China to its upcoming high-profile AI event.

Huawei Goes All-In on AI, Emphasizing Computing Power

What’s new: At Huawei Connect 2023 in Shanghai on September 20, Huawei’s Deputy Chairwoman Sabrina Meng announced the company’s ambitious “All Intelligence” strategy. The goal is to develop foundational AI technologies and a robust computing infrastructure for a wide range of industries.

How it works: The All Intelligence strategy is a multi-faceted approach: Making all objects connectable, empowering all applications with models, and leveraging ubiquitous computing power to accelerate intelligence.

Huawei also introduced Atlas 900 SuperCluster, an AI computing cluster consisting of thousands of Ascend 910 processors, Huawei’s most powerful AI chips released in 2019. Huawei said Atlas 900 can support training of massive AI foundation models with over one trillion parameters. The cluster comes equipped with Huawei’s CloudEngine XH16800 switch and can connect up to 2,250 nodes per cluster, powering up to 18,000 NPUs.

Why it matters: This represents Huawei’s first significant strategic shift in a decade since launching the “All Cloud” strategy in 2013, and “All IP” in 2003. Ren Zhengfei, the company’s founder, further commented in a previous talk.

We are on the cusp of the fourth industrial revolution, the cornerstone of which is enormous computing power.

Huawei is also positioning itself as an alternative to NVIDIA’s dominant presence in China’s AI training chip market, where NVIDIA could hold a 95% market share. Amid ongoing chip restrictions from the U.S. and geopolitical uncertainty, Huawei seeks to offer a viable second option. iFlyTek Chairman Liu Qingfeng once praised Huawei’s Ascend 910 chip, stating that it is comparable in performance to NVIDIA’s A100.

The pressing question remains: How will Huawei continue to provide AI chips beyond its stockpiles, given that TSMC, the maker of the 7nm Ascend 910 chip, stopped taking new orders from Huawei following the U.S. ban? A glimmer of hope appeared with Huawei’s recent breakthrough—the Kirin 9000s SoC for its Mate 60 smartphones, reportedly produced by China's SMIC, offers a potential solution to this quandary.

How Platforms and Regulations Address AI-Generated Content

What’s new: Platforms from Chinese companies are taking steps to introduce content labeling for AI-generated content. TikTok, Bilibili, and WeChat recently announced various features for labeling AI-generated or technologically enhanced content, while academic and corporate research teams have developed watermarking tools.

How it works:

TikTok launched a feature that tags AI-generated content. Content without proper tagging could face removal.

Bilibili introduced a “Creator Declaration” feature to allow labels like “AI-generated” or “Fictional Content” to be added to videos.

WeChat requires that self-media creators add labels to their published content, especially if generated using deep synthesis technologies.

SenseTime introduced an AI governance platform called SenseTrust, which features a digital watermarking solution.

Shanghai Jiao Tong University released an open-source tool named “Mist”, which places invisible watermarks on images to prevent AI imitation.

Early precedents include Douyin and Xiaohongshu, which began requiring creators to label content as “Generated by AI” starting in May. Douyin released guidelines on watermarking and metadata standards for AI-generated content to help users distinguish between virtual and real content.

Why it matters: AI labeling systems have significant implications for transparency, regulatory compliance, and security. As AI-generated content becomes increasingly indistinguishable from human-made content, AI labeling allows users to understand the origin of the content and safeguards against misinformation.

Global regulation: The European Union and China have mandated AI-generated content to be watermarked or labeled.

The European Union approved a draft of the “Artificial Intelligence Act” on June 14, requiring AI-generated content to be labeled to improve transparency. The act has not yet officially come into force.

China’s generative AI regulation went into effect on August 15. The new regulation mandates service providers to adopt necessary measures to ensure the accuracy and reliability of generated content and to provide transparent information sources.

Britain Invites China to Upcoming AI Safety Summit

What’s new: Britain has invited China to its upcoming AI Safety Summit, foreign minister James Cleverly confirmed in a statement last Tuesday, Reuters reported. The event, scheduled for November 1-2, aims to bring together tech executives, academics, political leaders, and international governing bodies to discuss the safety and risks of AI technology.

How it works: The summit will focus on regulatory frameworks, ethical considerations, and practical safety measures surrounding AI. By inviting China, Britain seeks to engage one of the leading nations in AI technology in these crucial conversations. Cleverly emphasized that Britain’s approach is to protect its institutions while aligning with international partners.

Why it matters: The invitation reflects a new British foreign policy to engage, rather than isolate, China. Cleverly visited China last month, becoming the most senior minister who came to China in the past five years. In another Bloomberg report, UK Chancellor of the Exchequer Jeremy Hunt said that the West needs to start conversations with China on effective ways to regulate AI.

Weekly News Roundup

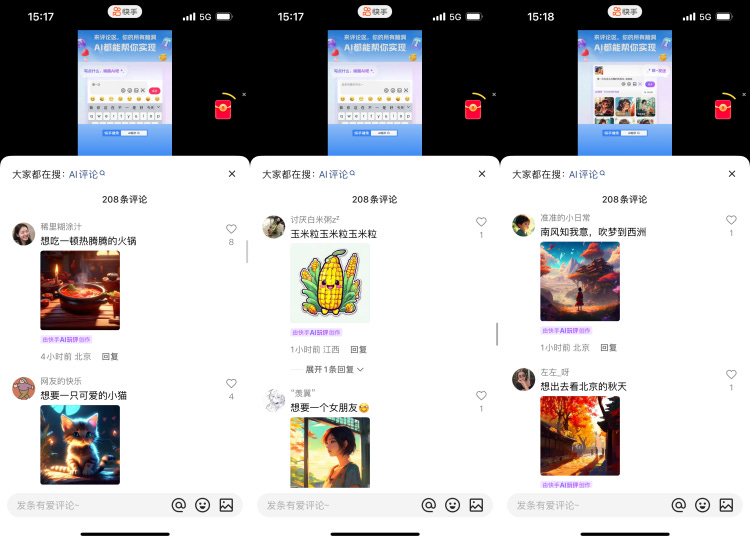

📸 Kuaishou releases its new model Kolors, powering a feature called “Kuaishou AI Reviews” that allows users to generate creative text-based images in video comments.

🦄 Fourth Paradigm, a Hong Kong-based AI unicorn in China, announces plans to list its H-shares on the Main Board of the Hong Kong Stock Exchange under the code 6682.HK. The IPO, aiming to issue 18.396 million shares globally, kicked off on September 18 and was expected to end and be priced on September 21. With a per-share price range of HK$55.60 to HK$61.16, the total fundraising amount is projected to be between HK$1.02 billion and HK$1.13 billion. The company plans to go public on September 28.

💰 Zhipu AI has reportedly completed its B-4 financing round, led by heavyweights like Tencent and Alibaba Cloud, reaching an estimated valuation of around $1 billion.

📜 Baidu’s ERNIE Bot receives copyright approval from the China Copyright Protection Center, with its current version being V1.0.0.

🤖 Shanghai’s AI lab and other institutions unveil InternLM-20B, a medium-sized language model with 20 billion parameters, demonstrating improvement in understanding, reasoning, and programming capabilities, and is available for free commercial use on Alibaba’s ModelScope.

🚗 Baidu Apollo and Pony.ai have obtained permission from Beijing authorities to operate commercial driverless robotaxi services in Beijing’s Yizhuang Economic Development Zone.

Trending Research

In a comprehensive survey, researchers examine the potential of LLMs as a foundation for versatile AI agents. While most efforts in AI development focus on specialized algorithms, the study argues that what’s lacking is a universal model for creating adaptable agents. LLMs, given their flexibility, offer hope for achieving Artificial General Intelligence by serving as a starting point for designing AI agents that can work across different scenarios.

Paper: The Rise and Potential of Large Language Model Based Agents: A Survey

Affiliations: Fudan NLP Group (MiHoYo was listed as an affiliation in the first version of the paper)

Researchers introduce LongLoRA, a cost-effective method for extending the context sizes of LLMs without heavy computational demands. The paper outlines two key strategies: the use of sparse local attention for efficient fine-tuning and a revisited parameter-efficient regime for expanding context. LongLoRA shows promising results across various tasks and sizes of LLMs, and even allows for context extension with just a few lines of code. It aims to make LLMs more versatile while keeping computational costs low.

Paper: LongLoRA: Efficient Fine-tuning of Long-Context Large Language Models

Affiliations: CUHK, MIT