🧠 Former Microsoft Exec Believes OpenAI Could be 10 Times Bigger than Google

Weekly China AI News from May 1 to May 7

My dear friends! People in China’s bustling AI industry took a well-deserved Labor Day break. So instead of presenting you with the latest news, I wanted to share an insightful perspective from Qi Lu, a former executive at Microsoft and Baidu, on ChatGPT and LLMs. Also, the World Health Organization has finally declared that Covid-19 is no longer an emergency. We can now close the chapter on the past heart-wrenching three years, embrace the new normal, and step forward together with hope, love, and compassion in our hearts.

Weekly News Roundup

🧬 A team of researchers from Baidu Research has developed an AI algorithm that can rapidly design highly stable mRNA vaccine sequences. Named LinearDesign, the algorithm results in a 128-fold increase in the COVID-19 vaccine’s antibody response. Interestingly, the researchers employed a technique from natural language processing (NLP) for this study. Identifying the optimal mRNA sequence is similar to determining the most likely sentence among a set of similar-sounding alternatives.

🔗 Mogo Auto, an autonomous driving startup established in 2017, has recently completed a RMB 580 million financing in its Series C2 round. Tencent participated in the funding.

🤖 iFlyTek has introduced Spark Desk, a competitor to ChatGPT that is said to outperform in language generation, question-answering, and mathematics. Additionally, Spark Desk can assist students in reviewing and correcting their homework using iFlyTek’s AI Learning Pad. An early user who gained access to Spark Desk found that its data database is limited to September 2021, similar to ChatGPT.

Ex-Microsoft Exec Qi Lu Predicts OpenAI Could Grow 10 Times Larger than Google

What’s new: Qi Lu, a former Microsoft executive leading development of Bing, Skype, and Microsoft Office, the previously Baidu COO, and a leading advocate for AI in China, shared his perspectives on ChatGPT, OpenAI and LLMs in a recent event.

Lu said he can’t help but marvel at the staggering speed of the big model era, admitting that even he struggles to keep up with it.

Lu is delighted to see Sam Altman, OpenAI CEO and a friend of his, making a difference in the world.

Any change that affects society and industries is always due to structural changes. These structural changes often involve a shift from marginal costs to fixed costs, resulting from large-scale expenses.

As for the future growth boundaries of OpenAI, Lu believes that OpenAI will definitely be larger than Google, with the only question being whether it will be 1, 5, or 10 times larger.

Lu also offers some advice to entrepreneurs, saying that while having strong technical skills as a founder may seem impressive and important right now, it won't be that crucial in the future. Technology like ChatGPT will be able to help with those tasks. As a founder, what's becoming increasingly important and valuable is the willingness and mental strength to succeed.

Below is the full English script (courtesy of ChatGPT) - it’s suuuuuuper loooooong! Please note that in China, foundation models (FMs) or large language models (LLMs) are commonly referred to as big models or large models (大模型). You can find the original script here.

1. The core of the social inflection point is a large-scale cost that shifts from marginal to fixed.

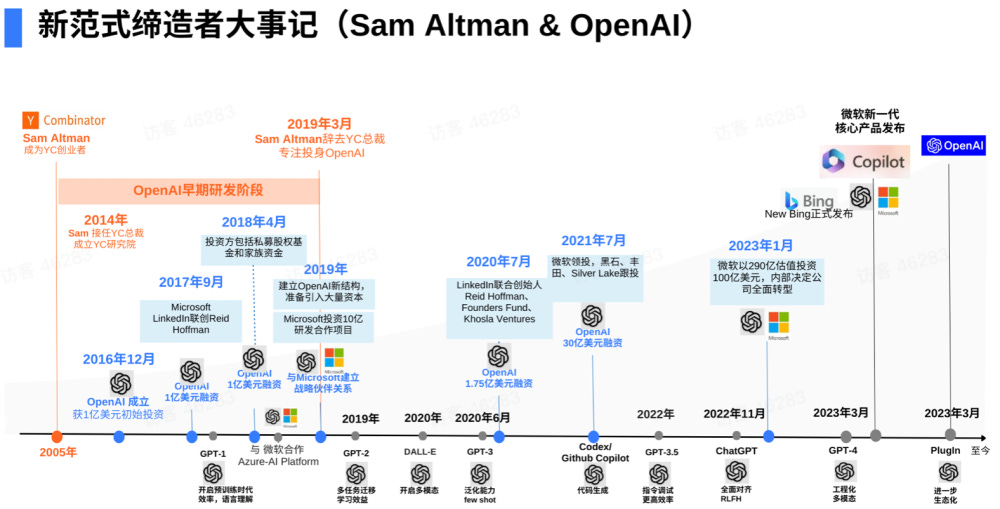

I met Sam Altman in 2005 when he was under 19 years old and I was already in my 40s. We became friends despite the age difference. He was a kind and quirky kid, and today I am glad he can change the world. Recently, during the Chinese New Year, I spent three months in the US and had some conversations with Sam at OpenAI.

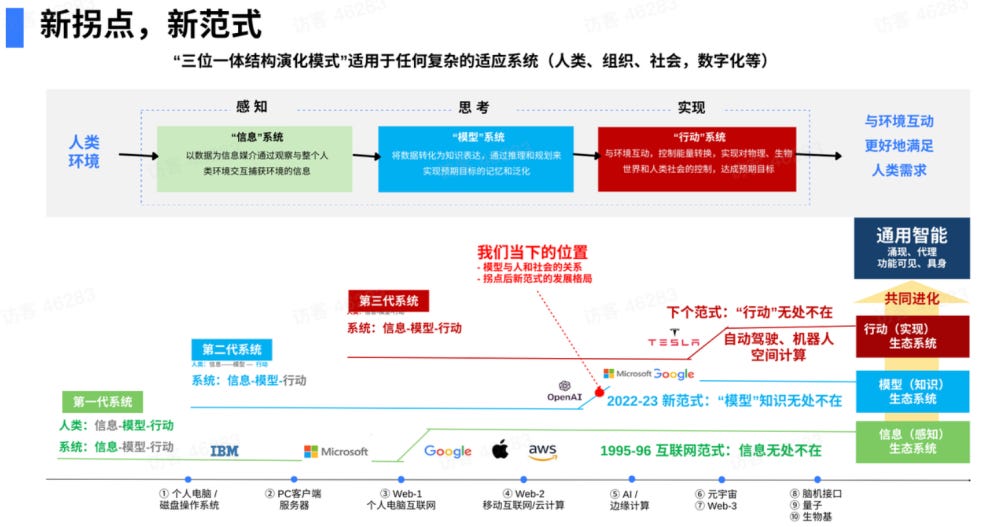

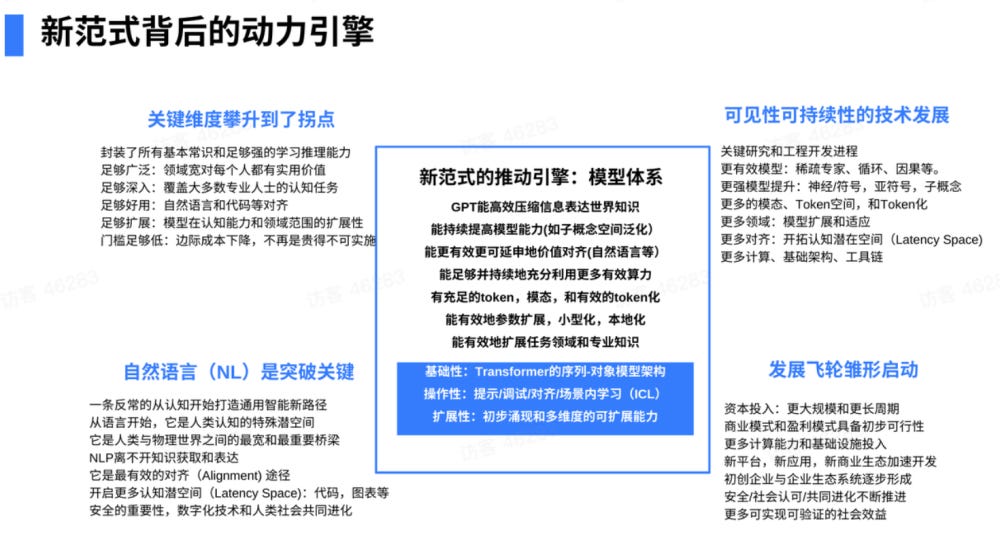

First, how to understand this new paradigm? This figure explains everything that ChatGPT and OpenAI have brought. Based on first principles, you will naturally deduce the opportunities and challenges in the field.

This figure is the "trinity evolution model," which essentially explains that any complex system, including a person, a company, a society, or even a digital system, is a combination of three subsystems:

The subsystem of information, which obtains information from the environment;

The subsystem of models, which expresses and reasons about the information;

The subsystem of action, which interacts with the environment to achieve human goals.

Any system is a combination of these three subsystems, especially digital systems. Digitization and humans cannot be separated. People also need to obtain information, express information, and take action to solve problems or satisfy needs.

Based on this, we can draw a simple conclusion. Today, most digital products and companies, including Google, Microsoft, Alibaba, and ByteDance, are essentially information-moving companies. Remember that everything we do, including most companies present here, is just moving information. Nothing more than that. But it is good enough to change the world.

As early as 1995-1996, we reached an inflection point through the PC Internet. I had just graduated from Carnegie Mellon University (CMU) at that time. Numerous companies emerged, including a great company called Google. Why did this inflection point happen? Why did it have explosive growth? Clarifying this viewpoint can explain today's inflection point.

The reason is that the marginal cost of obtaining information began to shift to a fixed cost.

Remember, anything that changes society or industry is always a structural change. This structural change is often a type of large-scale cost that shifts from marginal to fixed cost.

For example, when I was studying at CMU, if I wanted a map to leave Pittsburgh, it would cost $3, which was expensive to obtain information. Today, although maps still have a price, they are all at a fixed price. Google pays $1 billion a year to make a map, but the cost for each user to obtain map information is basically zero. In other words, when the cost of obtaining information becomes zero, it will definitely change all industries. This has happened in the past 20 years, and today there is basically free information everywhere.

Why is Google great? It turns the marginal cost into a fixed cost. Google has a high fixed cost, but it has a simple business model called advertising. It is a high-profit, world-changing company, which is the key to the inflection point.

What is the inflection point in 2022-2023? It is unstoppable, and the reason is the same. The cost of models is shifting from marginal to fixed, and the big model is the technical core and industrial foundation. OpenAI has set it up, and its development speed will climb quickly. Why are models and this inflection point so important? It is because models have an inherent relationship with people. Every person is a combination of three models:

Cognitive model: We can see, hear, think, and plan;

Task model: We can climb stairs, move chairs, and peel eggs;

Domain model: Some of us are doctors, some are lawyers, and some are coders.

That's all. Our contributions to society are a combination of these three models. People don't make money based on physical labor, but by using their brains.

Think about it, if you don't have much insight, but your modeling ability is similar to that of a big model, or the big model gradually learns all your models, what will happen? In the future, the only valuable thing is how much insight you have.

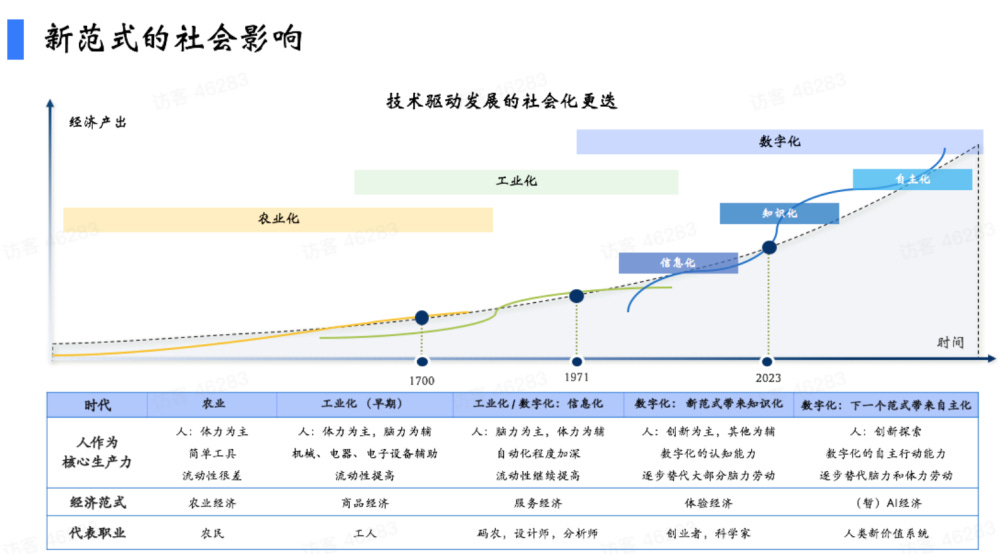

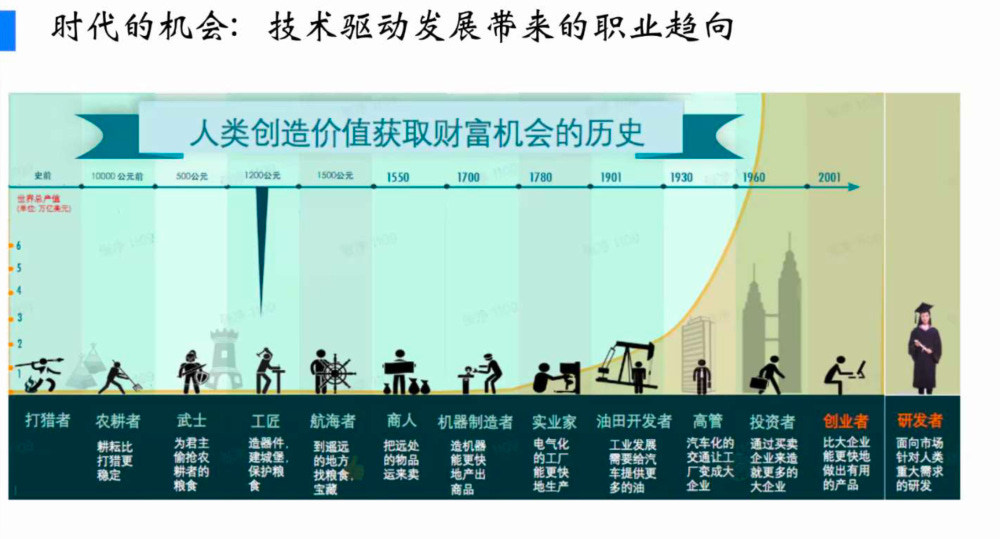

Human society is technology-driven. In the agricultural era, people used tools to do simple labor. The biggest problem was that people were tied to the land, lacked mobility, and had no freedom. The biggest change for people during the industrial development was that they could move, and they could go to cities and factories. In the early industrial system, physical labor was the main focus, with mental labor as a supplement. But with mechanization, electrification, and electronification, people's physical labor has decreased. After the information age, people mainly rely on mental labor, and the economy has shifted from a commodity economy to a service economy. Coders, designers, and analysts have become typical occupations of our era.

This time, the inflection point of big models will affect everyone in the service economy and blue-collar workers, because they are models, and unless they have unique insights, the big models they serve are already in place. We believe that the typical professions of the next era will be entrepreneurs and scientists.

Therefore, this change will affect everyone. It affects the whole society.

2. What are the three inflection points I have seen?

What is the next inflection point?

The next inflection point will be a combination: "action" everywhere (autonomous driving, robots, spatial computing). That is, people need to act in physical space, and the cost of doing so is shifting from marginal to fixed. In 20 years, everything in this house will have a mechanical arm and automation. I can press a button for anything I need, and the software can do it, rather than having to find someone to do it.

So, which companies can reach and stand at the next inflection point? I believe Tesla has a high probability, as its autonomous driving and robotics are already advanced. Microsoft is climbing with OpenAI today, but how can it stand at the next inflection point?

Here are the three inflection points we see:

Today, information is everywhere, and in the next 15-20 years, models will be ubiquitous. When you open your phone, any networked device, the models will come to you. They will teach you how to solve legal problems, how to perform medical tests. Any kind of model will be ubiquitous.

In the future, automated and autonomous actions will be ubiquitous.

Humans and digital technology will evolve together. Sam often talks about this, and he believes that they must evolve together to achieve general intelligence (AGI). The four essential elements of general intelligence are emergence, agency, affordance, and embodiment.

To summarize, by analyzing the fundamental trinity structure of the future, and by looking at past inflection points, we can clearly see the current inflection point: the essential cost of models will shift from marginal to fixed, and one or even multiple great companies will emerge. Undoubtedly, OpenAI is currently leading the way.

Although it may be a bit early to say, I personally believe that in the future, OpenAI will definitely be bigger than Google. It's just a matter of whether it will be one time, five times, or ten times bigger.

3. The Core Beliefs of OpenAI, Two Things That Even Surprise Sam Himself

Now I will talk about OpenAI's major achievements from a technical perspective, and how it is driving the era of large models.

Why am I talking about OpenAI and not Google or Microsoft? To be honest, because I know that Microsoft has several thousand people working on this, but they are not as good as OpenAI. At the beginning, Bill Gates didn't even believe in OpenAI. He probably didn't believe in it even six months ago. Four months ago, when he saw the demo of GPT-4, he was stunned. He wrote an article saying, "It’s a shock, this thing is amazing". Even Google was surprised.

The key technologies that OpenAI has developed along the way are:

GPT-1 marks the first time pre-training was used for efficient language understanding.

GPT-2 mainly uses transfer learning to efficiently apply pre-training information in various tasks, and further improves language understanding capabilities.

DALL·E is in another modal.

GPT-3 mainly focuses on the ability to generalize and few-shot generalization.

GPT-3.5 instruction following and tuning are the biggest breakthroughs.

GPT-4 has already started to be implemented in engineering.

The Plugin in March 2023 is ecological.

Why is OpenAI's financing structure designed this way? It is inseparable from Sam's early goals and his judgment of the future. He knew he needed to raise a lot of money, but the stock structure posed a significant challenge - it is easy to mix returns with control. So he designed a structure that would not be constrained by any shareholder. OpenAI's investors have no control, and their agreements are in the form of debt. If they make 2 trillion dollars, then they will become a non-profit organization and everything will go back to society. This era requires new structures.

It (OpenAI) is unstoppable. Even Sam Altman himself is surprised, he didn't expect it to happen so quickly.

If you are interested in technology, Ilya Sutskever (OpenAI co-founder and chief scientist) is very important. He firmly believes in two things.

First is the model architecture. It should be deep enough, and once it reaches a certain depth, bigness is betterness. As long as there is computing power and data, the bigger the better. They started with LSTN (long short term memory), but later switched to Transformer when they saw it.

The second thing that OpenAI believes in is that any paradigm that changes everything will always have an engine that can continuously advance and create value.

This engine is essentially a model system, with the core being the Transformer model architecture, which is a sequence model: sequence in, sequence out, encode, decode, or decode only. But the ultimate core is GPT, which is the Transformer after pre-training, and it can highly compress information. Ilya has a belief that if you can efficiently compress information, you must have knowledge, otherwise you cannot compress information. So if you can efficiently compress information, you got to have some knowledge.

Ilya firmly believes that GPT-3, 3.5, and of course GPT-4, already have a world model in them. Although what you're doing is predicting the next word, this is just an optimization method, and it has already expressed the information of the world, and it can continuously improve the model's ability, especially in doing generalization in the subconcept space, which is currently the focus of research. Knowledge graphs really don't work. If any of you are doing knowledge graphs, I seriously tell you, don't use knowledge graphs. I've been doing knowledge graphs for more than 20 years, and it's just pretty bad. It does not work at all. You should use Transformer.

More importantly, use reinforcement learning, align with human feedback, and align with human values. Because GPT has been doing this for over four years, the knowledge has already been encapsulated in it, and it was really impossible to use in the past, and it was also very difficult to use.

The biggest thing is alignment engineering, especially instruction following and natural language alignment. Of course, it can also align with code, tables, and charts.

Doing large models is difficult, and a big challenge is infrastructure. When I was at Microsoft, we didn't use network cards on every server, and we put FPGAs on them instead. The network IO bandwidth speed is infinite bandwidth technology (Infiniband), and servers can directly access memory between servers. Why? Because Transformer is a density model, it's not just a matter of computing power, it also requires extremely high bandwidth, and just think about it, GPT-4 needs to be trained with 24,000 to 25,000 cards, how many people in the world can do such a system? All the data, data center network architectures are different. It's not a three-layer architecture, it must be an east-west network architecture. So a lot of work needs to be done here.

Tokens are very important. There may be 40-50 confirmed tokens in the world, which are the language tokens and modality, and now there are more tokenizations. Of course, now more models are being parameterized and localized, and domain-specific knowledge can be integrated into these large models. Its controllability mainly relies on prompts and debugging, especially according to instructions, alignment debugging, or in-context learning, which has already been implemented quite clearly. Its operability is getting stronger and stronger. Its scalability is basically sufficient.

Putting it together, this engine is not perfect. I've never had an engine that's good enough and strong enough.

The inflection point came from three things:

It encapsulated all the knowledge in the world.

It has sufficient learning and reasoning ability, with GPT-3's abilities lying between high school students and college students, and GPT-4 is not only able to enter Stanford but also ranks high among Stanford students.

Its domain is wide, its knowledge is deep, and it is easy to use. The biggest breakthrough in natural language is usability. Its scalability is also good. Of course, it is still very expensive, such as more than 20,000 cards and several months of training for such a large project. But it is not too expensive--Google can do it, Microsoft can do it, several large companies in China can do it, and start-ups can do it by raising funds.

Combined, the critical point of the paradigm has arrived, and the inflection point has come.

Just to elaborate a bit more, I have been working on natural language for more than 20 years. There used to be 14 tasks in natural language processing, and I could identify verbs, nouns, and analyze sentences clearly. Even if you analyze it clearly, you know that this is an adjective, this is a verb, this is a noun--but is this noun a pack of cigarettes? Or your uncle? Or a tomb? Or a movie? No idea. What you need is knowledge. Natural language processing is useless without knowledge.

The only way to make natural language work is you have knowledge. And that's exactly what Transformer did, compressing so much knowledge together. This is its greatest breakthrough.

4. The Future is an Era Where Models are Everywhere

In the next 2-3 years, OpenAI's goal is to make their models sparser. Currently, their models require too much bandwidth, so they need to expand the attention window or add recursion causality reasoning and brainstorming capabilities. There are also grounding aspects, such as sub-symbolism and subconceptualization, that can be improved. Additionally, they plan to add more modalities, more token space, more model stability, more latent space alignment, more computation, and more infrastructure tools. These goals will likely take up the next 2-3 years, and we have a general idea of what is needed to make this engine even larger.

However, the flywheel has mainly been started by large amounts of capital investment. In the US, from January to March 2023, there will be unstoppable growth as money pours in every month. China is similar, with a business model and revenue model that has initial scale, and infrastructure, platform applications, and ecosystems are accelerating development. Both startups and large companies are entering the field.

Of course, there are still many issues with social security and regulation that are OpenAI's biggest headache. Sam is spending a lot of effort in the US to get society to recognize this technology. OpenAI's core focus is to slow down the pace of progress, receive feedback from users with each new version, identify potential risks, and have enough time to fix them. However, with the growth flywheel forming, the core developmental path will be model extendibility and the model ecosystem in the future. It will be an era where models are everywhere.

In the future world of models, there will be more large models with more complete modalities and world knowledge. Having more knowledge, modalities, and improved learning and generalization capabilities and mechanisms will strengthen the models.

Moreover, there will be more alignment work to be done. OpenAI's current focus is on achieving a wide alignment. While not everyone can accept ChatGPT, it is stable and comprehensive enough for most people to accept, much like the US Constitution. There is a lot of alignment work to be done for natural language, code, mathematical formulas, and forms.

There will also be more modal alignment. At the human scale level, which primarily describes humans in their language, the current modalities are language and graphics, with more modalities being added in the future. This is at the level of large models. In the era of large models, there will be more models built on top of large models. I believe there are mainly two types of models and their combinations. The first type is models of things. Every human demand has its own domain/work model, including structural models, process models, demand models, and task models, especially memory and priors.

The second type is models of humans, including cognitive/task models, which are individual, and include professional models, cognitive models, motion models, and human memory priors. Humans are basically a combination of these types of models. Lawyers or doctors, for example, will have many models in their respective fields.

There is an essential difference between models that humans build and those that are learned, which I have gained more insight into over the past 1-2 months.

Firstly, humans are always building models. The advantage of human models is that they are deeper and more specialized when it comes to generalization, and are usually represented by symbols (such as mathematical formulas) or structures (such as flowcharts). However, they are not very useful in practice. Human models are either used to solve very macroscopic problems like physical formulas or very microscopic ones. For everyday problems in our lives, physics is not very helpful. There is no model that can tell me the shape of a tree's leaves or why a dog's fur is a certain color. Moreover, human models are static and do not change with the scene.

Today, there are many models, such as digital twins, which are difficult to use because the physical world is constantly changing and these models are rigid and inflexible. Particularly when it comes to models built with knowledge graphs, which I have been working on for decades, they are extremely difficult to calculate and the functional structure is very poor. So while human models have the advantage of being highly specialized, they also have major shortcomings.

In contrast, learned models are essentially scene-based because their tokens are scene-based. They are highly adaptable to changes in the environment, as the tokens also change with the environment. The generalization and expansion of sub-concept spaces still require a lot of theoretical work, but this kind of model has great potential for development. They are useful because they can align with human usage tendencies or natural language, tables, etc. Their computational nature is inherently process-oriented. However, there is a major problem here, namely that humans tend to use structures to express their knowledge tendencies, but the real problem-solving ability lies in processes, and humans are not suitable for expressing themselves using processes.

The ChatGPT model complements the human model and can be integrated over the long term. We can see a future of more models, new domains, new specialties, new structures, new scenes, and new adaptability forming a closed loop, constantly strengthening cognitive and reasoning abilities. Of course, ultimately, grounding is necessary, and it must be grounded with perception and the ability to act, forming true intelligence.

In a sense, 20-30 years from now, this model world will have many similarities with the biological world. Large models, I believe, are like genes, with different types and then evolving. We can see the future core technology model world moving forward with this method.

We now have a structural understanding of the paradigm of this era. So, how can we embrace this era?

5. Structural impacts on every person and industry with a "holy shit" moment every week or two.

In the past 10 months, I have personally read a lot of things every day, but recently I just can't keep up. The development speed is very, very fast. Recently, we started issuing a "Large Model Daily" because I just can't keep up with the papers and code anymore - it's just too much. Basically, there will be one or two "HOLY SHIT" moments every week for everyone in every industry.

Holy shit! You can do this now.

The world is changing rapidly. I once had this feeling in 1995-1996, but this time it's even stronger. Why? The cost of models has shifted from marginal to fixed, and knowledge creation is the evolution of models and knowledge.

The production capital has been greatly improved on two levels. First, all brain work can reduce costs and increase productivity. We currently have a basic assumption that the cost of coders will decrease, but the demand for coders will increase significantly, so coders don't have to worry. Because the demand for software will increase significantly, everyone will buy it if it's cheap. Software can always solve more problems, but not all industries can. This is the broad-based improvement of production capital.

Second, production capital has been deeply improved. Some industries' production capital is essentially model-driven, such as the medical industry, which is a model industry. A good doctor is a good model, and a good nurse is a good model. The medical industry is essentially driven by strong models. Now that models have improved, so has science. In the core gaming industry, our production capacity will be fundamentally and deeply improved. The development speed of the industry will accelerate because the speed of scientific development and development has accelerated, and the heartbeat of each industry will accelerate. Therefore, we believe that the next inflection point will accelerate. Using large models to make robots, automation, and autonomous driving cannot be stopped.

It will have a profound and systemic impact on everyone. Our hypothesis is that everyone will soon have a co-pilot, not just one, but maybe five or six. Some co-pilots are strong enough to become drivers, and they can automatically help you do things. In the longer term, we will each have a team of drivers to serve us. The future human organization is a real person, working together with his co-pilot and driver.

There is no doubt that it will also have a structural impact on each industry and will be systematically reorganized. Here is a simple formula ([$X hours (artificial) - $Y (hardware and scale)] x Quantity = cost reduction and efficiency improvement). For example, how much is the average hourly wage for a person who uses their brain, subtract ChatGPT's price (currently around $15 per hour, in three years it may be less than $1, and in five years it may be a few cents), and then multiply it by how many quantities there are. Cost reduction or efficiency improvement allows coders to become super coders, and doctors to become super doctors.

Everyone can calculate according to this formula. If you are a hedge fund on Wall Street, you can short a lot of industries.

For example, lawyers in the United States average $1,500 an hour. I've already seen information online every day that says, "If you want a divorce, don't look for a divorce lawyer, ChatGPT can handle it for you cheaply!" (Everyone laughs)

Developers, designers, coders, and researchers are all the same, some have more demand and some have lower costs. Especially in core industries, science, education, and healthcare, these are the three industries that OpenAI has long been most concerned about and are fundamental to society as a whole.

Especially healthcare. In China, demand far exceeds supply. Moreover, China is a government-driven market economy, and the government can play a larger role because it can bear fixed costs.

Education is the most important thing. If you are a university, your first concern is how to administer exams. There is no way to do it. But if you ask ChatGPT, you will know everything. More importantly, how to define a good college student in the future? Suppose there is a college student who knows nothing, not even physics or chemistry, but knows how to ask ChatGPT questions, is he still a good college student? Opportunities and challenges coexist.

In summary, this era is advancing at a high speed, and the speed is increasing. It is determined by the structure and is unstoppable.

6. The gold rush era of big models, structural breakdown of opportunity points

Now, I'll give you a structured thinking framework. In a sense, you can match your position and understand how I think about today's opportunity points.

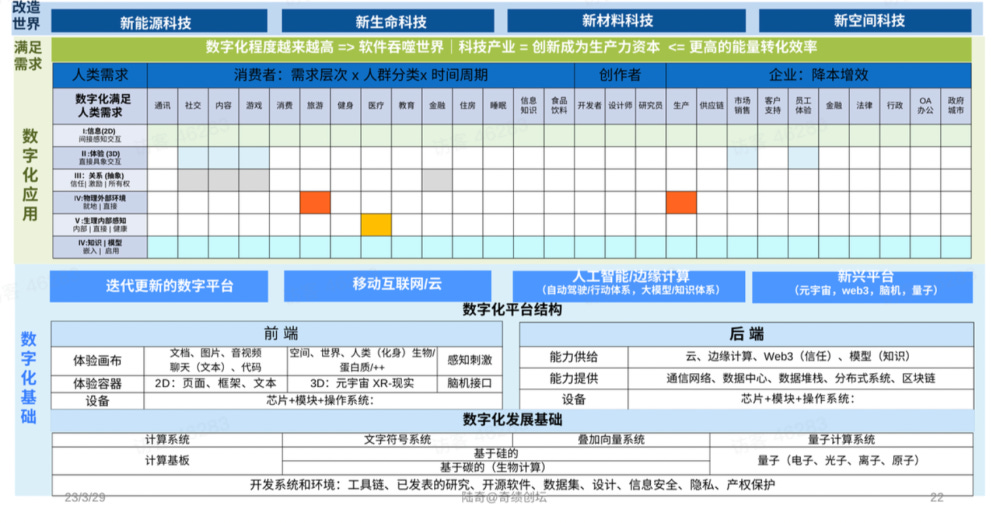

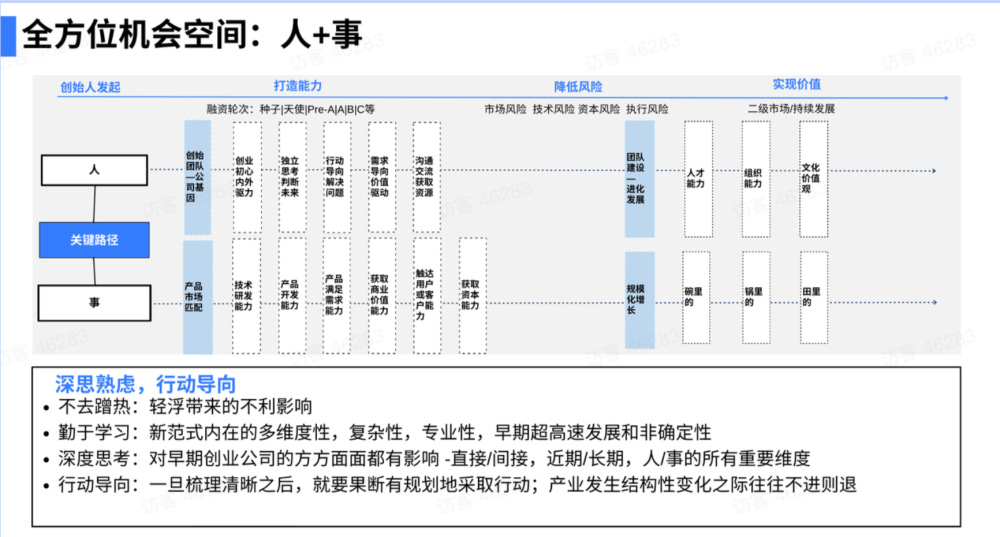

This chart is about entrepreneurial innovation driven by human technology, and all opportunities are on this chart.

First, at the bottom are digital technologies because digitization is an extension of human beings. The foundation of digitization includes platforms, development foundations, and open-source code, open-source design, and open-source data. Platforms have front-end and back-end, and there are many opportunities here.

Second, the wave is using digital capabilities to solve human needs. We have fully listed the digital applications on this chart.

C-end, which divides all people into groups, and what they do for 24 hours. There are communications, socializing, content, game consumption, travel, fitness, and more. There is a special type of person in the C-end, who changes the world, which are coders, designers, and researchers. They create the future. Microsoft, a large company, is based on a simple idea: Microsoft wants to write more software, help others write more software, because writing software is the future.

B-end, the needs of companies are the same, to reduce costs and increase efficiency. They need to produce, with supply chains, sales, customer service, and more. After these needs are met, there are six visible experiential structures in digitization: providing you with information, two dimensions are enough; providing you with three-dimensional interactive experiences, in games, metaverses; abstract relationships between people, including trust relationships, Web 3; automatic driving, robots, etc., in the physical world; the internal use of carbon machines in humans, today it is brain-machine interface, and there will be more in the future, it will be silicon-based in the future; finally, it's giving you a model.

Finally, humans are a strange species. Not only do we need to meet these needs, but we also need to change the world. While satisfying the world, we also need to gain more energy. So we need energy technology; we need to convert energy in the form of life science, biological processes, or use mechanical processes, material structures to convert energy, or new spaces. This is the third wave.

So there are basically three types of entrepreneurial companies: digital foundations, using digital to solve human needs, and changing the physical world with digitization. With this large framework, we can systematically match our position and understand which points we need to focus on.

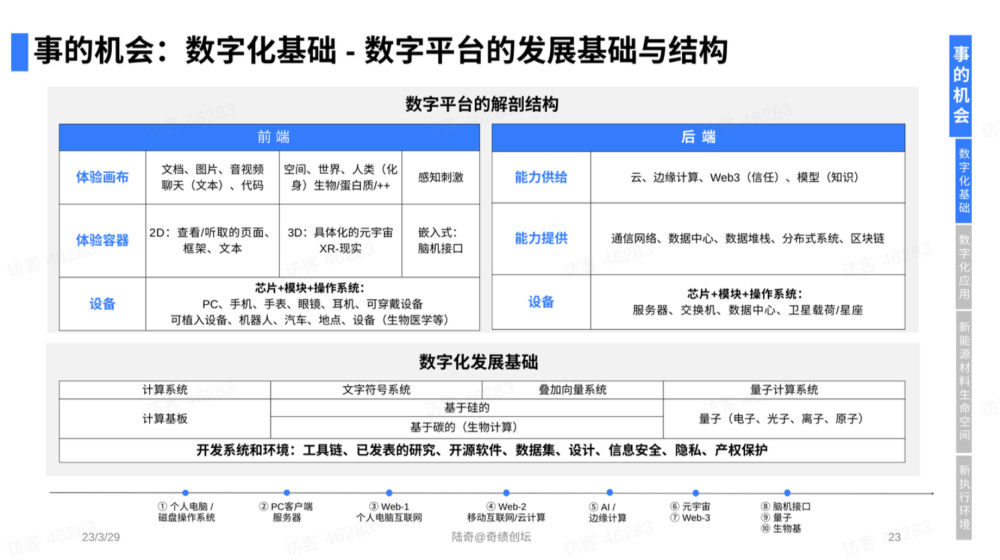

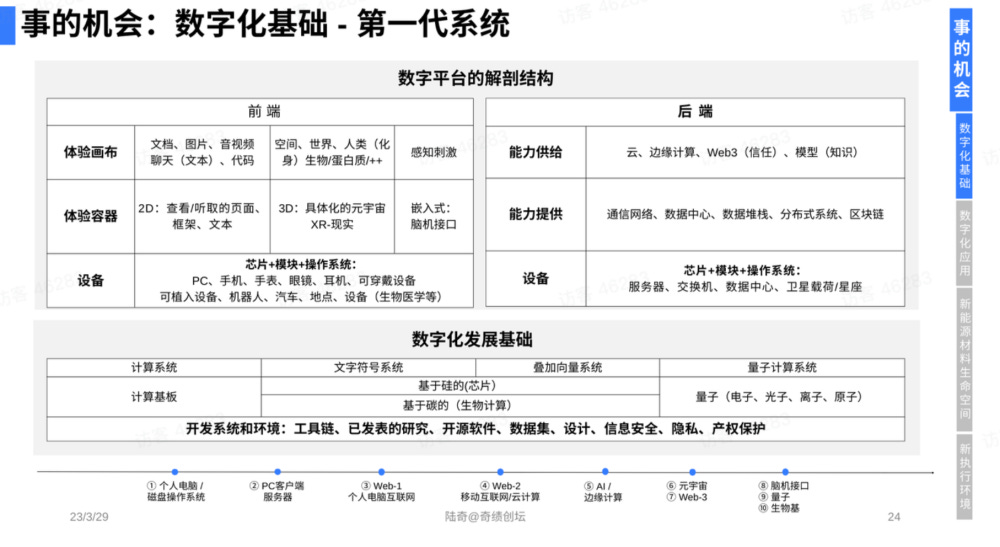

First, let's talk about the digital foundation, which has a stable structure that remains the same no matter how it develops. I have dealt with most systems to some extent over the past 30 years, and this structure is indeed quite stable.

The core is the front end and back end - the front end is a complete and extendable experience, and the back end is a complete and extendable capability, including the device side, such as computers, phones, glasses, cars, etc., which contain chips, modules, and operating systems. Trillion-dollar companies are all in this layer.

Secondly, there are containers for experiences, two-dimensional and three-dimensional containers, as well as internally embedded containers.

Above the container, anyone who writes code knows about the canvas, which can be a document, a chat, a code, a space, a world, a digital person, or a protein in a carbon-based life form. This is the front end.

The back end is the same, with devices, servers, switches, data centers, etc. at the bottom, as well as chips, modules, and operating systems.

The middle layer is very important, including the network data stack, distributed systems, and blockchain, among others.

At the top is the cloud, which provides capabilities. The ability to supply is like a natural water source, where you can access computing power, storage, and communication. In the era of models, opening up means models.

This is the digital foundation. Symbolic computation, or so-called deep learning, involves adding vector floating-point calculations based on silicon or carbon.

If you are an entrepreneur in this area, where are the opportunities?

First, information-moving is still a major opportunity in this era.

If you're working on models, I think everything needs to be redone. Big models come first. Many devices need to be redesigned to support big models, and there are opportunities in containers, the cloud, and the underlying infrastructure, including the open-source ecosystem, which is where the real opportunities lie.

The third-generation system has already begun to develop robots, automation, and autonomous systems. Son Masayoshi is going all-in on this. This can also be done with big models. Musk also sees this opportunity. These are opportunities that startups can fully grasp at the next turning point in the third generation.

At the same time, the "third-generation++ system," which refers to carbon-based biological computing, is parallel. There are many opportunities for companies in this category, such as quantum computing. The metaverse and Web 3 are a bit cold today, but from the perspective of the long river of history, it is only a matter of time because these technologies can truly bring future human value.

So, if you're a startup, the opportunity is in the basic layer. This is the best business. Why? This era is a lot like the gold rush era. If you went to California to pan for gold back then, a lot of people would die, but people who sold spoons and shovels could always make money. This is the so-called "shove and pick business."

Big models are platform opportunities. Based on our assessment over the past few days, platforms that prioritize models will have larger volume than those that prioritize information. Platforms have the following characteristics:

They are ready to use out of the box.

They must have a simple and good enough business model, so developers can live on them, make enough money, and support themselves, otherwise it's not a platform.

They have their own killer application. ChatGPT itself is a killer application. Today, platform companies are like being in the Apple ecosystem. No matter how well you do, once Apple grows big, it will confiscate you because it wants to use the underlying technology. So you are the platform. Platforms generally have their anchor points and strong support points. OpenAI has many opportunities in the long term - it could be the first $10 trillion company in history.

This is a fierce competition for platform dominance. The future will be dominated by a company with a large volume in this area. Competition in this field is incredibly fierce. The price is too big, and it's too bad to miss out. You have to give it a try.

Today, the robustness and fragility of models are still a problem. When using this model, you must start with a slightly narrower scope and strict limitations so that the experience is stable. When the model's capabilities become stronger, you can then broaden the scope and gradually progress to find the appropriate scenarios. The balance between quality and width is essential. Also, when considering the development path, you need to decide whether to modify the product based on the current foundation, restart the stove, or proceed in parallel. Do you change and redo the team and buy a company outside?

Innovation, especially the implementation of startups, is always a combination of technology-driven and demand-driven. In the implementation process, the key is to understand the demand, grasp and satisfy the demand. In the long run, technology-driven is always the main driver, but in the implementation process, understanding, analyzing, and organizing demand is the most critical aspect.

There is a secret that everyone knows now - OpenAI is using GPT-4 to build GPT-5, and every programmer is an amplifier of their own abilities. This results in a different scale effect, Matthew effect, barriers, competition landscape, intellectual property rights, and internationalization patterns. China clearly has opportunities in this area.

7. I have a few pieces of advice for entrepreneurs.

The internal structure of a startup is a combination of people and things. The founder/founding team is the initial driving force with intrinsic and extrinsic motivations, independent thinking, action orientation, demand orientation, and resource acquisition through communication. The next step is product-market fit, which involves research and development of technology, products, and delivery. The business model is about receiving money, more growth, reaching more customers, raising more money, and achieving future value. Organizationally, talent, organizational structure, and cultural values are developed through systematic construction to open up towards the future. All of this is the sum of a company.

Our advice to every student is to think before acting.

Don't be flamboyant and don't follow the trend. I personally oppose trend-chasing. If you want to build big models, you need to think carefully about what it means and how it relates to your startup direction in which dimension(s). Trend-chasing is the worst behavior and will waste opportunities.

You should be diligent in learning at this stage. There are multiple dimensions to the new paradigm, with considerable complexity. You should read relevant papers, especially since development is occurring so quickly and uncertainty is high. Learning takes time, and I strongly recommend it.

After thinking it through, take action that is goal-oriented and planned. If this change has a structural impact on the industry you're in, it's either go forward or go backward. There's no way to stay in place. If your industry is directly impacted, you have to take action.

Next, I want to talk about several dimensions - each company is a combination of abilities.

In terms of product development, if your company is primarily software-based, there is no doubt that this will have a long-term impact on you. Especially if you're doing C-end, the design of the user experience will certainly be affected, and you need to seriously consider what to do in the future.

If your company is doing its own research and development, it will have a short-term, partial, and indirect impact, which can help you think about technology design. The long-term development of core technology will also be affected. Today, chip design is a large number of tools, and in the future, big models will undoubtedly impact chip research and development. Similarly, protein is protein structure design. Whatever you do, future technology will have an impact. It may not have a direct impact in the short term, but it may have a significant impact in the long term.

The ability to meet demand is related to reaching users, supply chains, or operations. GPT can help you with software operations, but hardware supply chains may not. In the long run, there are opportunities for change because the structure of the upstream and downstream will change. You need to judge whether the structure of your industry will change.

Exploring business value, reaching users, and raising funds can help you think and iterate.

Finally, let's talk about talent and organization.

First of all, let's talk about founders. Today, founders' technical abilities may seem very important, but in the future, they will not be as important. As ChatGPT and other technologies can help you with the technical aspects. What will be more important and valuable for founders in the future is their willpower and determination. This includes having a unique judgment and belief about the future, persistence, and strong resilience. These will be the core qualities that will make a founder more and more important in the future.

For startup teams, tools can help explore directions, accelerate idea iteration, product iteration, and even resource acquisition.

For the cultivation of future talents, on the one hand, it is necessary to learn tools, think and explore opportunities, and cultivate one's own prompt engineer at an appropriate time.

Finally, let's talk about organizational culture building. It is important to think deeply and prepare early to seize the opportunities of the times, especially considering how to coordinate between different roles, such as code writing and design, where many functions may already have a co-pilot.

We are facing both opportunities and challenges in this era. We recommend that you consider this opportunity from all angles.