🪷Chinese Perspectives on OpenAI DevDay, NVIDIA‘s New AI Chips for China, and Zen Buddhism Meets AI

Weekly China AI News from November 6 to November 12

Hello readers, what a crazy week for AI, from OpenAI’s GPT-4 Turbo and GPTs, to Elon Musk’s Grok, Amazon’s rumored Olympus, and Humane’s AI pin. Although AI developments in China were relatively quiet last week, there’s still plenty to explore:

OpenAI’s dev day was a hot topic among AI enthusiasts in China. Check out what Chinese developers, startup founders, and VCs think of the event.

NVIDIA is not giving up on China’s AI chips market, set to announce three new AI chips on November 16.

Abbot Shi Yongxin of the Shaolin Temple surprisingly addressed a gathering at Meta’s headquarters in San Francisco about “Zen and AI”.

ERNIE Bot has surpassed 70 million users, said Baidu CTO.

Mixed Reactions from Chinese Entrepreneurs and VCs on OpenAI’s DevDay: Shock, Enthusiasm, and Concerns

What’s New: OpenAI’s first-ever Developer Day in San Francisco last Monday sparked a level of excitement reminiscent of tech’s golden days. Insider even claimed that the enthusiasm matched the days when Steve Jobs unveiled the iPhone.

This event didn't just electrify the American tech community; it also captivated AI enthusiasts in China. Top Chinese tech media reporters stayed up until 2 am to catch the live stream and churned out in-depth reviews by dawn. Developers’ excitement, shock, and reflections spread across social media. The event was acclaimed as the AI Chunwan, drawing parallels to the globally watched Chinese New Year’s Eve Spring Festival Gala.

Chinese Perspectives: In a comprehensive survey by Chinese tech media outlet Leiphone, numerous Chinese startup founders and venture capitalists shared their insights on OpenAI’s Developer Day. Their perspectives offer a unique glimpse into how this significant event resonated with the tech community in China.

👍🏻“Chasing OpenAI in OpenAI’s way is a daunting task,” said Zhou Bowen, founder of Frontis.ai and Chair Professor of Tsinghua University.

👎🏻“The conference didn’t showcase any significant technological advancements,” Jia Yangqing, creator of Caffe and founder of Lepton.ai, noted. “The highlight was the extended and more affordable support for GPT-4 and GPTs, particularly the latter with its new JSON mode and function calls.”

👍🏻“Small and medium developers should abandon delving deeper into LLM technology,” Guo Xu, CTO of a medical AI company, said. “Instead, they should shift their focus to applying these technologies in specific business scenarios.”

👍🏻“The essence of this AI revolution is in AI agents, with LLMs serving as just one part of the infrastructure,” said Zhang Jinjian, founding partner of Vitalbridge.

👍🏻“LLMs form the base for new AI-native software. OpenAI’s GPT Store and Assistant API have significantly influenced the development landscape,” Zhou Jian, CEO of Xbotspace, remarked. Regarding the GPT Builder feature, Zhou noted its limited development, calling it more of a “toy” than a practical tool, especially for professional engineering teams.

👎🏻“OpenAI’s tools are primarily shallow, UI-related, and don’t delve into the core logic of serious AI applications. It seems they aim to standardize the user interface, similar to Apple’s approach,” Zhang Hailong, CEO of Babel.cloud, added.

👍🏻“OpenAI’s new releases won’t affect Chinese LLM players. Those who could use OpenAI wouldn't consider domestic ones. Those who couldn’t use it before won’t use it later either, as OpenAI is still not compliant to enter the Chinese market,” said AI entrepreneur Ding Xin.

👍🏻“It’s hard for global developers to keep up with OpenAI.” Wu Bo, CEO of Yiyuan.ai, mentioned that we need to chase OpenAI more vigorously and invest on a larger scale. The entire LLM field is expected to accelerate the survival of the fittest or differentiate based on differences.

NVIDIA Adapts to U.S. Export Controls with New AI Chips for China

What’s New: NVIDIA is set to announce three new AI chips tailored for the Chinese market on November 16, as reported by Chinastarmarket. This move comes as a response to the latest U.S. chip export restrictions.

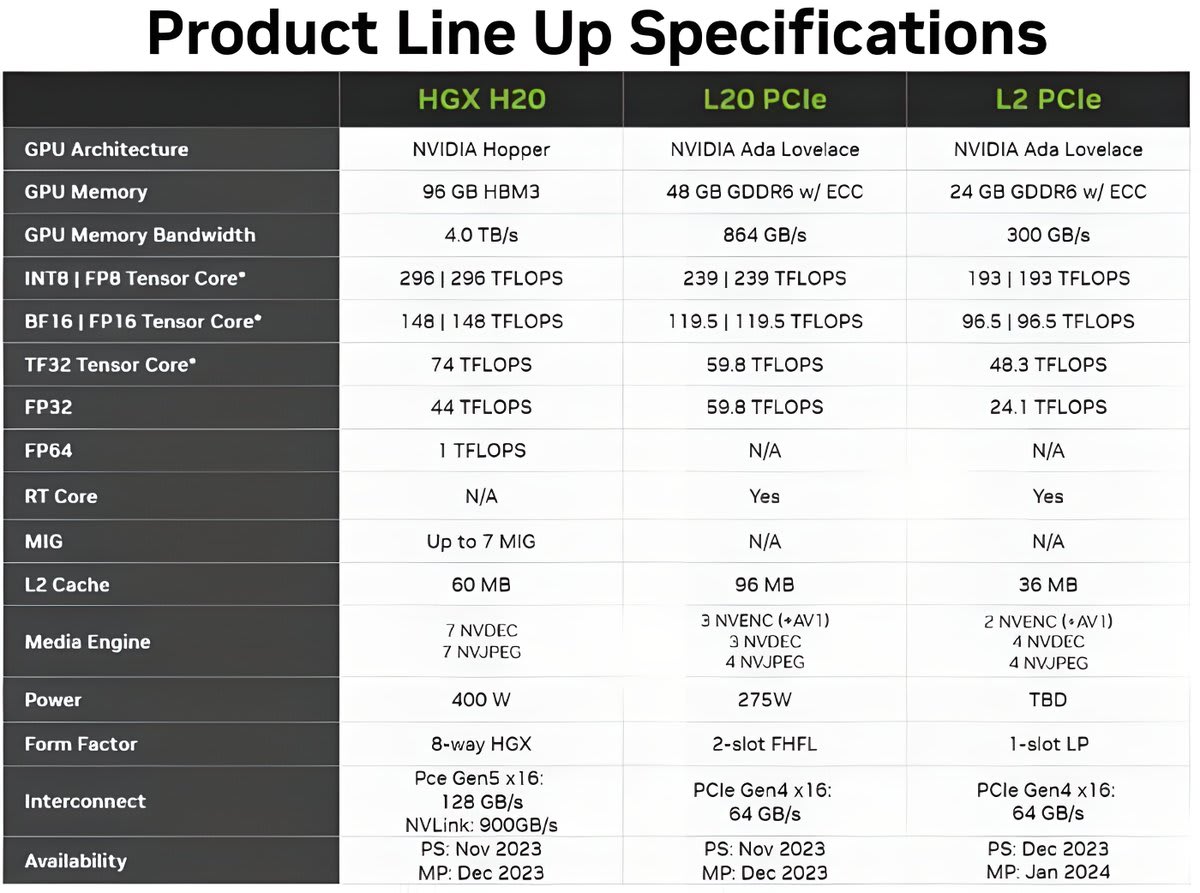

The new models, named HGX H20, L20 PCIe, and L2 PCIe, are based on NVIDIA’s Hopper and Ada Lovelace architectures. These chips are not just modified but significantly scaled-down versions compared to the original H100 chip, TMTPost reported.

How It Works: The upcoming NVIDIA chips target different AI computing needs, with the HGX H20 focused on training, while the L20 and L2 PCIe chips are geared towards inference tasks.

The leaked product specification document reveals that the HGX H20 chip delivers 1 FP64 TFLOPS for HPC, compared to the H100’s 34 TFLOPS, and 148 FP16/BF16 TFLOPS, significantly lower than the H100's 1,979 TFLOPS.

TMTPost exclusively reported that the H20 chip is expected to deliver only about 20% of the compute of NVIDIA’s flagship H100 GPU “theoretically”. This reduction is achieved by limiting bandwidth and compute speed, increasing HBM memory, and enhancing NVLink interconnect modules to improve cost efficiency. Despite a lower price point compared to the H100, the H20 is still expected to be more expensive than domestic Chinese AI chips like Huawei’s 910B.

Why It Matters: NVIDIA’s new chips underline the company’s commitment to the Chinese market, despite the tightening export controls by the U.S. This limitation is to navigate the new export control rules set by the U.S. Department of Commerce’s Bureau of Industry and Security (BIS). Implemented on October 17, These rules significantly tighten restrictions on semiconductor products, including NVIDIA’s high-performance AI chips, with prohibitions taking effect on October 23. The ban immediately impacted key products like the A800, H800, and L40S.

Zen Buddhism Meets AI: Abbot Shi Yongxin’s Dialogue at Meta

What’s New: Shi Yongxin, the current abbot of Shaolin Temple, surprisingly showed up and addressed a gathering at Meta’s headquarters in San Francisco on November 2, 2023. His speech is titled “Zen Buddhism Encounters AI,” an intersection of ancient Eastern philosophy with modern technology.

How It Works: Abbot Shi Yongxin elaborated on the profound impact of AI on traditional beliefs and how the convergence of Zen Buddhism with 21st-century technology offers new insights.

Abbot Shi said “AI can assist by searching relevant scriptures, thereby addressing doubts and providing support and convenience for (Zen) practitioners” if they encounter confusion and obstacles.

However, he posed crucial questions about the role and limits of AI. For example, while AI can process data and mimic human perception, it lacks the consciousness and enlightenment central to Zen. He suggested that in an era of tech advancement, maintaining a clear mind and seeking inner enlightenment remains vital.

Why It Matters: Abbot Shi’s recent visit to Meta seemed to be a part of his North American tour. This event, marking Meta’s first public engagement with outside celebrities since the pandemic, is also a positive step towards enhancing cross-cultural communication between the U.S. and China.

Weekly News Roundup

📈 Baidu CTO Wang Haifeng announced at the 2023 World Internet Conference in Wuzhen that its AI chatbot ERNIE Bot has reached 70 million users and 4,300 application scenarios since its public launch on August 31.

🎨 Douyin’s video editing app Jianying is testing Dreamina, a text-to-image tool, where users can create four AI-generated images featuring various artistic styles from abstract to realistic.

🔓 Alibaba Group CEO Wu Yongming revealed at the 2023 World Internet Conference in Wuzhen that Alibaba is set to open-source a 72-billion-parameter model, marking the largest open-source LLM in China.

🚀 Zhipu AI, backed by Alibaba and Tencent, is reportedly raising funds at a valuation of 20 billion yuan. Zhipu AI recently announced a cumulative fundraising of over 2.5 billion yuan this year, with investors like Meituan, Ant Group, Xiaomi, and Hongshan (formerly Sequoia China).

🛡️ Huawei recently filed a patent for “A Language Model Protection Method, Device, and Computer Equipment Cluster” aimed at copyright protection for AI models, enabling auto-generation of watermarked response messages based on user requests.

Trending Research

The I2VGen-XL approach significantly improves video synthesis by addressing challenges in semantic accuracy, clarity, and spatio-temporal continuity. It uses a two-stage process with hierarchical encoders and additional text input to enhance details and resolution. The model is trained on millions of text-video and text-image pairs, outperforming current methods in extensive experiments. The source code and models will be publicly available. Read the paper I2VGen-XL: High-Quality Image-to-Video Synthesis via Cascaded Diffusion Models.

Latent Consistency Models (LCMs), distilled from latent diffusion models, accelerate text-to-image tasks with high-quality output and require only ~32 hours of A100 GPU training. The report extends LCMs to larger models with less memory use and introduces LCM-LoRA, a universal acceleration module for various image generation tasks. LCM-LoRA shows strong generalization abilities as a plug-in neural PF-ODE solver. Read the paper LCM-LoRA: A Universal Stable-Diffusion Acceleration Module.

This study applies brain localization concepts to LLMs, discovering a core region responsible for linguistic competence, comprising about 1% of the model’s parameters. Perturbations in this region significantly impact language skills. The research also finds that linguistic improvement doesn't always mean increased knowledge, suggesting separate knowledge areas within LLMs. This exploration provides valuable insights into the intelligence foundation of LLMs. Read the paper Unveiling A Core Linguistic Region in Large Language Models.

Multimodal Large Language Models (MLLMs), like Google’s Bard, face significant safety and security risks due to their vulnerability to adversarial attacks, particularly in vision-based inputs. This study reveals Bard’s susceptibility, with a 22% success rate in misleading it using adversarial examples, also affecting other MLLMs like Bing Chat and ERNIE bot. Despite Bard’s defenses like face and toxicity detection, these mechanisms are found to be easily evadable, highlighting the need for improved robustness in MLLMs. The research demonstrates a 45% attack success rate against GPT-4V using the same adversarial methods. Read the paper How Robust is Google's Bard to Adversarial Image Attacks?