🚗 China's New Autonomous Vehicle Regulation, Microsoft's AI Pledge for Shanghai, and AI Deciphers 'Interstellar' After Viewing

Weekly China AI News from December 4 to December 10

Hello readers, while last week was relatively calm in China's AI landscape, there were still some noteworthy updates. The Ministry of Transport in China introduced an encouraging new guideline for autonomous vehicles, particularly beneficial for robotaxi services. In a significant meeting, Microsoft President Brad Smith and the Shanghai chief discussed bolstering AI initiatives in the city. AI has now advanced to the point where it can watch films like Interstellar and Forrest Gump and respond to related queries.

China’s New Guideline for Autonomous Vehicles Gives Green Light to Driverless Robotaxis

What’s New: China’s Ministry of Transport (MOT) published the Guidelines for Autonomous Vehicle (AV) Transport Safety Services (Trial Implementation) last week, a significant step in regulating and promoting safe applications of AV in transport services.

How It Works: The Guidelines focus on safety operator deployment, requirements for autonomous transport operators, and the operational scope for autonomous driving (AD). The latter two aspects are highlighted below. The Guideline encompasses four vehicle categories: Taxis, City Buses, Passenger Transport Vehicles (including Intercity Buses), and Freight Trucks.

Fully autonomous taxis, or robotaxis (Level 5, with no steering wheel or brake pedals) can operate with remote safety operators (not onboard) in designated areas, subject to local approval. The Guidelines require a maximum ratio of one remote operator per three vehicles.

Taxis with conditional (Level 3) and high (Level 4) autonomous capabilities, along with city buses and passenger transport vehicles, must have one safety officer on board.

Freight trucks are required to have onboard safety operators.

Safety operators must undergo specialized training and be familiar with the autonomous vehicle’s routes.

Autonomous buses are allowed in controlled or simple road conditions.

Robotaxis can operate in areas with good traffic conditions.

Passenger transport vehicles should proceed cautiously with autonomous operations.

Autonomous freight vehicles are permitted for point-to-point highway transportation and in controlled urban roads.

Autonomous vehicles are prohibited from transporting hazardous road goods.

Why It Matters: The Guideline is a significant development in the regulation of autonomous vehicles in China. It authorizes fully driverless robotaxis to operate on public roads, a major step forward from the previous practice where only local governments, such as in Beijing, provided approvals to robotaxi operators like Baidu Apollo and Pony.ai.

Compared with the draft version released in August 2022 for public opinion, the final Guideline introduces one noteworthy change: it mandates onboard safety drivers for city buses and passenger transport vehicles, regardless of their level of autonomous driving capabilities. Accordingly, the final Guideline expands the operation conditions for city buses from “Closed Rapid Transit Bus” to “Controlled or Simple Road Conditions.”

Shanghai Welcomes Microsoft to Facilitate AI for Local Enterprises

What’s New: On December 5, Shanghai Party Secretary Chen Jining met with Microsoft President and Vice Chair Brad Smith. During the meeting, Chen expressed that Shanghai welcomes Microsoft to advance AI technologies to power corporate transformation.

How It Works: Chen said Microsoft as a leading global technology enterprise, will find a promising opportunity in Shanghai’s transformation towards digitalization, intelligence, and sustainability. Chen emphasized Shanghai's commitment to creating a market-oriented, law-abiding, and international business environment. This commitment includes adapting regulatory systems to high-level openness and new technological changes.

Smith shared Microsoft’s business progress and future plans in China and reaffirmed Microsoft’s confidence in China’s future. He pledged ongoing investment in research and development and deepened relationships with Chinese partners. Microsoft also aims to empower fundamental research, industrial upgrades, and the city’s green and low-carbon transformation with AI.

In March, Microsoft and Shanghai’s Xuhui District announced a continuation of their strategic collaboration. This partnership focuses on leveraging Microsoft’s tech to digitize local industries, including manufacturing, healthcare, logistics, and the Internet.

Why It Matters: The meeting, coupled with the statements made, indicates Microsoft’s willingness to expand its AI presence in China beyond research and development. Microsoft Copilot, the company’s AI chatbot for productivity, has been open to 169 countries starting December 1, 2023, but it remains unavailable in mainland China.

AI Can Now Watch Interstellar and Pen Its Own Review

What’s New: AI is now able to watch and understand the 2-hour and 49-minute sci-fi epic Interstellar and provides answers to specific plot-related questions, even (Spoiler Alert!!!) deciphering Joseph Cooper’s communication method to Murph Cooper using Morse code via a wristwatch.

How It Works: Meet LLaMA-VID, an innovative model that significantly reduces computational overload by representing each video frame with just two tokens: a context token and a content token. The context token captures the overall image context based on user input, while the content token focuses on the specific visual cues within each frame.

LLaMA-VID has shown exceptional performance on multiple video question-answering and inference benchmarks, surpassing previous methods in both video and image understanding. LLaMA-VID has also been trained on a dataset of 400 movies, generating over 9,000 long video question-answering corpora, encompassing movie reviews, character development, and plot reasoning.

Who’s Behind: Leading the research for LLaMA-VID is Jia Jiaya, previously the head of Tencent’s AI Lab YouTu. Jia is currently a professor at the Chinese University of Hong Kong and the founder of SmartMore, a company that raised $300 million in 2020 and 2021.

Weekly News Roundup

🌟 Meitu releases the MiracleVision 4.0 model, adding AI Design and AI Video capabilities compared to the previous version. The model will be progressively applied to Meitu products starting January 2024.

🌐 The National Supercomputing Center in Guangzhou launched the new generation supercomputing system “Tianhe Xingyi” last week that can support high-performance computing and training of LLMs. The new supercomputer achieves multiple improvements over “Tianhe-2” in general CPU computing, networking, storage, and application service capabilities.

💻 Yibo Digital recently signed an agreement with Baichuan AI, providing Baichuan AI with the computing power and resources of Nvidia smart servers, along with accompanying software, applications, and technical services, in a deal estimated to be worth 1.382 billion yuan.

👤 Baidu AI Cloud launched Super Assistant last week, built upon ERNIE Bot. The Super Assistant is open to enterprises in a browser extension form.

🤖 According to the South China Morning Post, ByteDance is set to launch an open platform allowing users to create their own chatbots, with a public beta version of this Bot Development Platform project expected by the end of this month.

🏆 On December 8, Alibaba’s Qwen model topped HuggingFace’s latest open-source large model rankings.

🚀 Raccoon, an intelligent programming assistant built on SenseTime’s LLM, is now open for public beta testing.

Trending Research

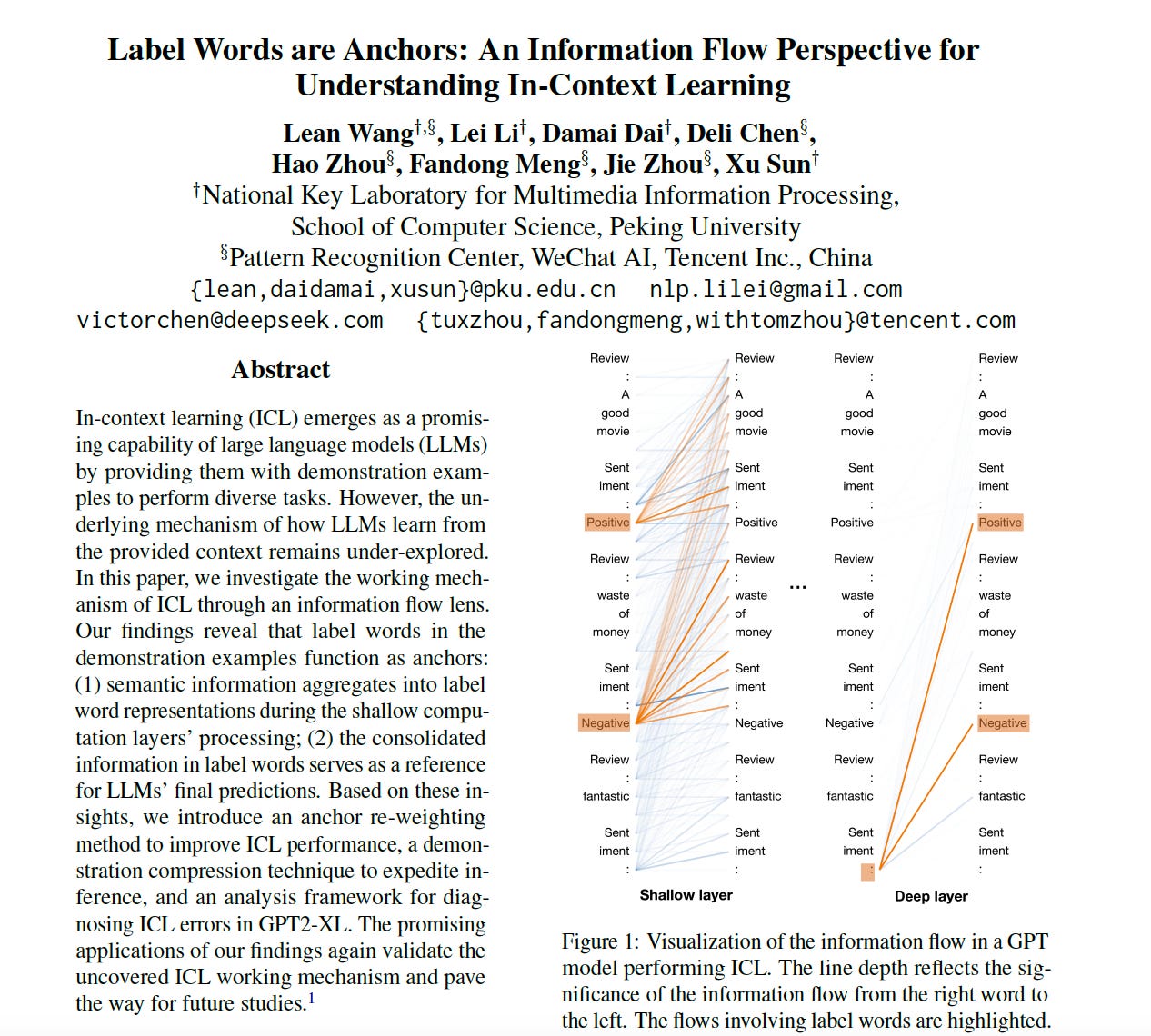

In-context learning in LLMs uses demonstration examples as anchors for semantic information, guiding predictions. This study introduces methods to enhance this learning, speeding up inference, and diagnosing errors in models like GPT2-XL. Read the paper Label Words are Anchors: An Information Flow Perspective for Understanding In-Context Learning (Best EMNLP Long Paper).

Alpha-CLIP, an advanced version of CLIP, focuses on specific image regions using an auxiliary alpha channel and millions of RGBA region-text pairs, enhancing task-specific understanding and enabling controlled image editing across various applications. Read the paper Alpha-CLIP: A CLIP Model Focusing on Wherever You Want.

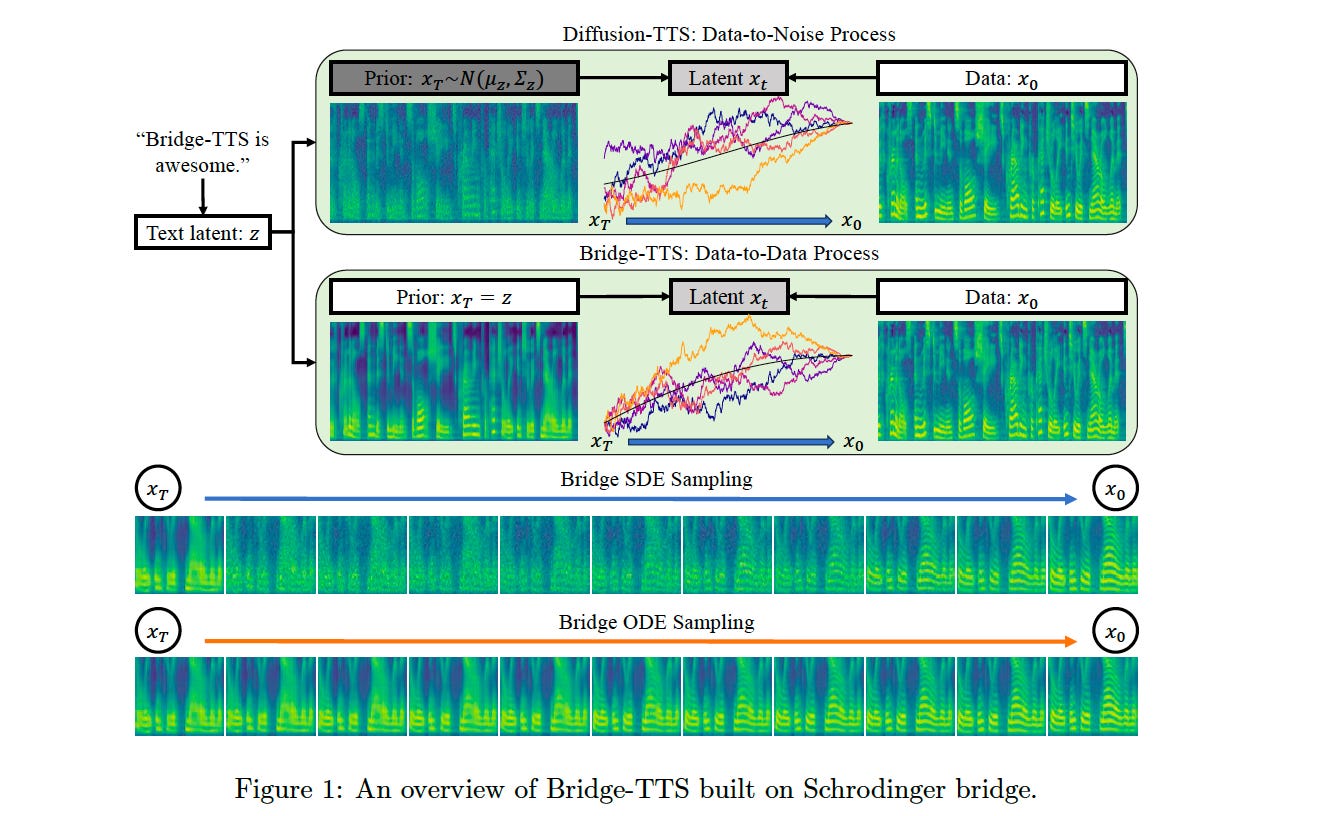

Bridge-TTS, a novel text-to-speech system, replaces the noisy Gaussian prior in diffusion models with a clear, deterministic one from text input, enhancing synthesis quality and efficiency and outperforming existing models in various scenarios. Read the paper Schrodinger Bridges Beat Diffusion Models on Text-to-Speech Synthesis.