China's 1.75-Trillion Language model; Tencent's Wheel-Legged Robot; 16×16 for ViT?

China's AI news in the week of May 31, 2021

A significant change to our Recode China AI newsletter: Starting this week, I will write a weekly newsletter summarizing the latest AI-related stories and investment news in China. This format will present cutting-edge research developments, use cases, and investment opportunities. Please subscribe here to support Recode China AI.

This new Chinese pre-training model is 10 times bigger than GPT-3.

Three months ago, state-sponsored research lab Beijing Academy of Artificial Intelligence (BAAI) introduced Wu Dao (悟道), the so-called “first large-scale Chinese intelligent model system.” Wu Dao 1.0 comprises four different models tailored for language understanding, multimodal pre-training, language generations, and biomolecular structure prediction.

This week, BAAI took a huge step forward and unveiled Wu Dao 2.0 at its annual BAAI Conference in Beijing. A significant improvement over its predecessor, Wu Dao 2.0 offers various capabilities for downstream tasks, including question answering, language generation, semantic understanding, captioning, and text-to-image/video generation. BAAI researchers boasted the model’s performance by claiming state-of-the-art results on 9 benchmarks (shown below). BAAI has opened Wu Dao 2.0’s APIs to the public.

Wu Dao 2.0’s selling point is its Brobdingnagian model size — 1.75 trillion parameters, 10 times larger than GPT-3 from OpenAI — trained on China’s supercomputer Sunway. It also eclipsed Google’s Switch Transformers to become the world’s largest language model.

So how did BAAI successfully enlarge its size? The answer is FastMoE, BAAI’s home-grown Mixture-of-Expert (MoE) system, also the 1st distributed MoE training system based on PyTorch. The magic of MoE is it aggregates many sub-expert networks tailored for different tasks and applies a so-called gate network to assign different experts to process given inputs. More details to be found here: FastMoE: A Fast Mixture-of-Expert Training System.

To be continued….

Tencent's wheel-legged robot can do a 360-degree flip

At the annual International Conference on Robotics and Automation (ICRA), Tencent Robotics X Lab unveiled a wheel-legged robot, christened Ollie. It can perform various control tasks, like a vertical jump ( up to 60 cm), passing through the uneven ground, and even a 360-degree front flip.

A hybrid wheel-legged robot combines the advantages of wheels like mobility and legs over-obstacle capability. An IEEE Spectrum article published last December headlined Wheels Are Better Than Feet for Legged Robots also reflected the rapid ascension of legged robots, such as Boston Dynamics’ Handle and ETH Zurich’s Ascento.

Ollie was presented at ICRA in the paper Balance Control of a Novel Wheel-Legged Robot: Design and Experiments, which “first derive a dynamic model of the robot and then apply linear output regulation along with the model-based linear quadratic regulator to main the standing of the robot on the ground without moving backward and forward mightily.”

Ollie is the latest in Tencent Robotics X Lab’s robotic innovations. Last November, the lab introduced a four-legged mobile robot named Jamoca, which can walk on Chinese quincuncial piles. This March, Jamoca was joined by its cousin named Max, which highly assembles Boston Dynamic’s Spot. Clearly, Tencent’s robots love backflips.

Tencent Robotics X Lab was established in 2018 dedicated to the research and applied use of cutting-edge robotics technologies, as well as exploring the connection of the virtual and physical worlds, according to the company.

Not all images are worth 16×16 words

Last year when Google researchers proposed Vision Transformer, which has achieved remarkable success in computer vision and challenged the dominance of convolution networks, they argued it’s best to split an image into 16x16 tokens. Recently a team of researchers from Tsinghua University and Huawei said that may not be the case.

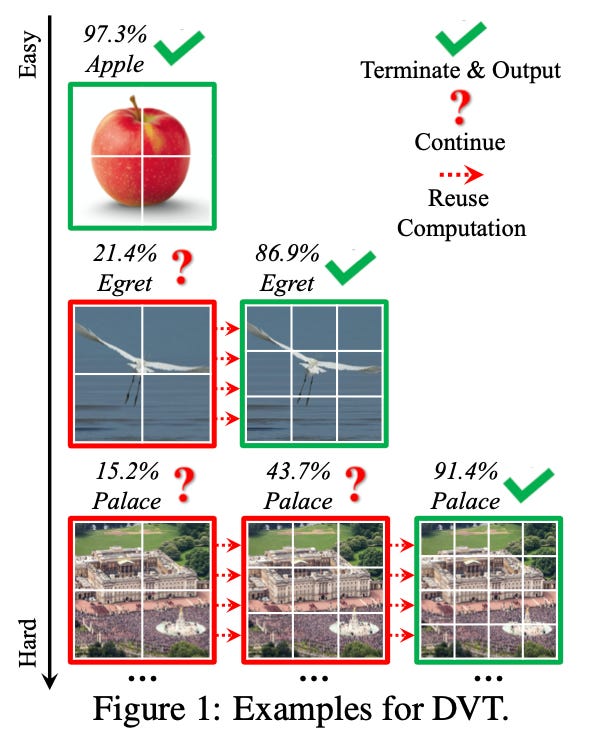

In the paper Not All Images are Worth 16x16 Words: Dynamic Vision Transformers with Adaptive Sequence Length, researchers discovered many “easy” images — like an apple or a bird — can be accurately predicted with a number of 4x4 tokens, therefore reducing unnecessary computational costs. They further proposed a Dynamic Vision Transformer (DVT) to automatically configure a proper number of tokens for each input image. Experiments show DVT outperforms other state-of-the-art vision Transformers on ImageNet, CIFAR-10, and CIFAR-100 in computational efficiency, both theoretically and empirically.

Investment News

Didi Autonomous Driving, the Chinese ride-hailing giant’s self-driving subsidiary, will reportedly close a $300 million new round of financing, of which $200 million will be poured by the Guangzhou Automobile Group. The company has accumulated a total of over $1.1 billion in funding and its valuation will exceed startup Pony.ai.

Zongmu Technology, a Chinese autonomous driving startup, has raised $190 million in its Series D round of funding. The startup has a dazzling list of investors, including Hubei Xiaomi Changjiang Industrial Fund, Fosun Capital Group, Qualcomm Ventures, and Denso Corp. Founded in 2013, Zongmu provides low-cost advanced driving assistance systems (ADAS) and autonomous valet parking solutions for OEMs. This March, Xiaomi announced its initiative to launch an electric car business with a $10 billion investment.

Horizon Robotics, a Chinese AI chip unicorn, is mulling a U.S. initial public offering to raise up to $1 billion, Bloomberg reports. Founded by the former lead of Baidu Institute of Deep Learning Kai Yu in 2015, Horizon Robotics has been developing chips to power next-generation smart vehicles. The company is seen as potentially China's equivalent of NVIDIA or Mobileye.