✍🏻China, US, UK Sign Historic Declaration, Alibaba's LLM Leap, AI Alignment Insights, and Kai-Fu Lee's Unicorn

Weekly China AI News from October 30 to November 5

Hello readers, if you’re reading this instead of tuning into OpenAI’s first Developer Day (10 a.m. PDT), then wow, we might just be soulmates! 😍 In this week’s issue, I discussed:

China joined forces with global powers at the UK-hosted AI Safety Summit and signed the Bletchley declaration.

Alibaba released its next-gen LLM, whilst doubling down on a robust, AI-friendly cloud ecosystem.

A US-China research collaboration unveils a comprehensive survey on AI alignment - but why does it matter?

Kai-Fu Lee’s fledgling startup skyrockets to unicorn, thanks to its 34B-parameter open-source model.

China, US, UK and EU Sign Declaration to Cooperate on Global AI Regulation

What’s New: China, the U.K., the U.S., and EU, along with other nations, signed the Bletchley declaration on November 1 at the first AI safety summit, organized by the U.K. government. The signing nations acknowledged the catastrophic risks posed by AI and agreed to collaborate on AI safety research.

How It Works: The declaration commits existing international bodies and initiatives to ensure AI’s advancement aligns with human rights, transparency, and ethical standards.

It targets the “frontier” AI—highly capable general-purpose models that could potentially outperform current advanced models—underlining the urgency to understand and mitigate their associated risks, particularly in sensitive areas like cybersecurity and biotechnology. The approach is multifaceted: it spans policy-making, scientific research, and the development of safety evaluation systems, all aimed at a transparent and accountable evolution of AI.

China’s Participation: In my September report, I mentioned Britain’s invitation to China for the AI Safety Summit. It was only in the final days before the event that the identity of the Chinese representative was revealed. Wu Zhaohui, China’s vice minister of science and technology, led a delegation to attend the AI safety summit last week.

Matt Sheehan, a fellow at the Carnegie Endowment for International Peace, posted a great explanation of Chinese regulatory entities on X.

For specific focus of UK summit - safety risks from frontier AI models - CAC & MOST differ. CAC has not talked about this or signaled it's a priority. MOST put ensure "human control" over AI in its ethics principles. Same language in AI Gov Intiative, likely at behest of MOST.

In his speech at the opening plenary session of the summit on Wednesday, Wu said, “We uphold the principles of mutual respect, equality and mutual benefits. Countries regardless of their size and scale have equal rights to develop and use AI.” TechCrunch reported that the remarks seem to address the recent supply chain challenges facing Chinese AI firms.

Wu also expressed China’s willingness to “enhance our dialogue and communication in AI safety with all sides.”

Why It Matters: This unprecedented international agreement represents a global consensus on the need for regulations and safeguards in the AI industry. At the same time, China, the U.S., and the U.K. each aspire to build themselves as global frontrunners in AI. China has made significant strides in regulatory measures, having introduced its generative AI regulations in July, followed by a global AI governance initiative in October. The Biden administration also put forth an executive order on AI, right before the AI Safety Summit last week, aimed at mitigating AI-related risks and stimulating AI innovations.

Alibaba Bets on Cloud Dominance to Win China’s LLM Race

What’s New: Alibaba is aggressively positioning itself at the forefront of China’s AI race by upgrading its cloud infrastructure and releasing next-gen LLM, Tongyi Qianwen 2.0, at its Apsara Conference last Tuesday.

Said Joe Tsai, Alibaba’s former CFO and new Chairman, Alibaba Cloud aims to be the most open cloud in the AI era. As evidence, 80% of China’s tech companies and half of the country’s LLM companies run on Alibaba Cloud.

How it Works: Alibaba Cloud CTO Zhou Jingren announced its upgraded Platform for AI, Alibaba Cloud’s ML platform, which hosts hundreds of thousands of chips to support the training of ultra-large models. Its new foundation model platform, Bailian, allows developers to fine-tune a specialized model within a few hours, Zhou claimed.

On the model side, Tongyi Qianwen 2.0, consisting of over 100 billion parameters, showcases exceptional improvements in AI tasks, such as understanding complex instructions and reducing hallucination rates, according to Alibaba. The company also released a new suite of enterprise-level AI models, designed for specific applications from transcription to coding, finance, and medicine.

Why It Matters: Alibaba’s latest developments aim to further entrench its position as a cloud leader, not an LLM developer. The company is fostering an open cloud ecosystem and prioritizing generative AI’s accessibility.

AI Alignment Survey by Top Universities Sets Agenda for Future of Safe AI

What's New: A comprehensive survey paper, authored by a collaboration of researchers from elite institutions, including Peking University, the University of Cambridge, Carnegie Mellon University, the Hong Kong University of Science and Technology, and the University of Southern California, has been released, focusing on the critical issue of AI alignment. This study delves into the core concepts, methodologies, and practices necessary to align AI systems with human values and intentions. This concern that has garnered worldwide attention.

How It Works: The survey introduces a novel framework based on four fundamental principles, known as the RICE principles: Robustness, Interpretability, Controllability, and Ethicality.

This framework divides the alignment process into two main components: forward alignment and backward alignment. Forward alignment involves training AI systems to be aligned with the RICE principles from the outset. It includes learning from feedback and under-distribution shifts to ensure AI systems can adapt to changing environments and maintain alignment even when faced with unforeseen scenarios.

Backward alignment focuses on the governance and verification of AI systems’ alignment through their lifecycle. It encompasses assurance methods such as safety evaluation, interpretability assessments, and checks for compliance with human values. This aspect of the framework also examines current and prospective governance practices by various stakeholders to manage AI risks effectively.

Why It Matters: The paper outlines a clear and actionable path toward responsible AI development. It also calls for a joint effort among governments, industry, and academia to adopt these principles and practices, ensuring that the AI of tomorrow is developed with the best interests of humanity at the forefront.

Weekly News Roundup

🚀 01.AI, an eight-month startup founded by renowned computer scientist Kai-Fu Lee, has raised fundings from Alibaba Cloud and others, with a valuation over $1 billion. 01.AI’s Yi-34B model, available in both Chinese and English, has outperformed Meta’s Llama 2 on a few benchmarks.

💡 On November 1, Baidu introduced a paid plan ($8 per month) for its popular chatbot ERNIE Bot. With ERNIE Bot Pro, subscribers can get access to ERNIE 4.0, along with enhanced image generation, advanced web plugins, and more features. ERNIE Bot 3.5 remains free to the general public.

📘 On November 4, NetEase Youdao’s “Zi Yue” education LLM passed the regulatory filing and opened to the public. Introduced in July this year, Zi Yue has launched six applications, such as virtual human oral language coach and AI essay tutoring. Other LLMs passed filings include Kunlun Tech’s Tiangong model and Luca from Mianbi Smart.

🔧 The Ministry of Industry and Information Technology recently issued a notice on the “Guidance for Innovative Development of Humanoid Robots,” proposing that by 2025, an innovative system for humanoid robots should be preliminarily established, achieving breakthroughs in key technologies such as “brain, cerebellum, and limbs.”

📱 On November 1, Chinese smartphone maker Vivo released its AI model matrix called “Blue Heart,” along with a new generation operating system OriginOS4. The Blue Heart matrix includes five models with different parameter scales: 175 billion, 130 billion, 70 billion, 7 billion, and 1 billion. Additionally, Vivo announced the open-sourcing of its 7 billion Blue Heart model.

Trending Research

GPT-Fathom is an open-source evaluation suite that compares over 10 leading LLMs across consistent settings. It details the progression from GPT-3 to GPT-4, highlighting improvements in reasoning and the effects of specialized training methods like SFT and RLHF, as well as quantifying the “alignment tax” related to model accuracy.

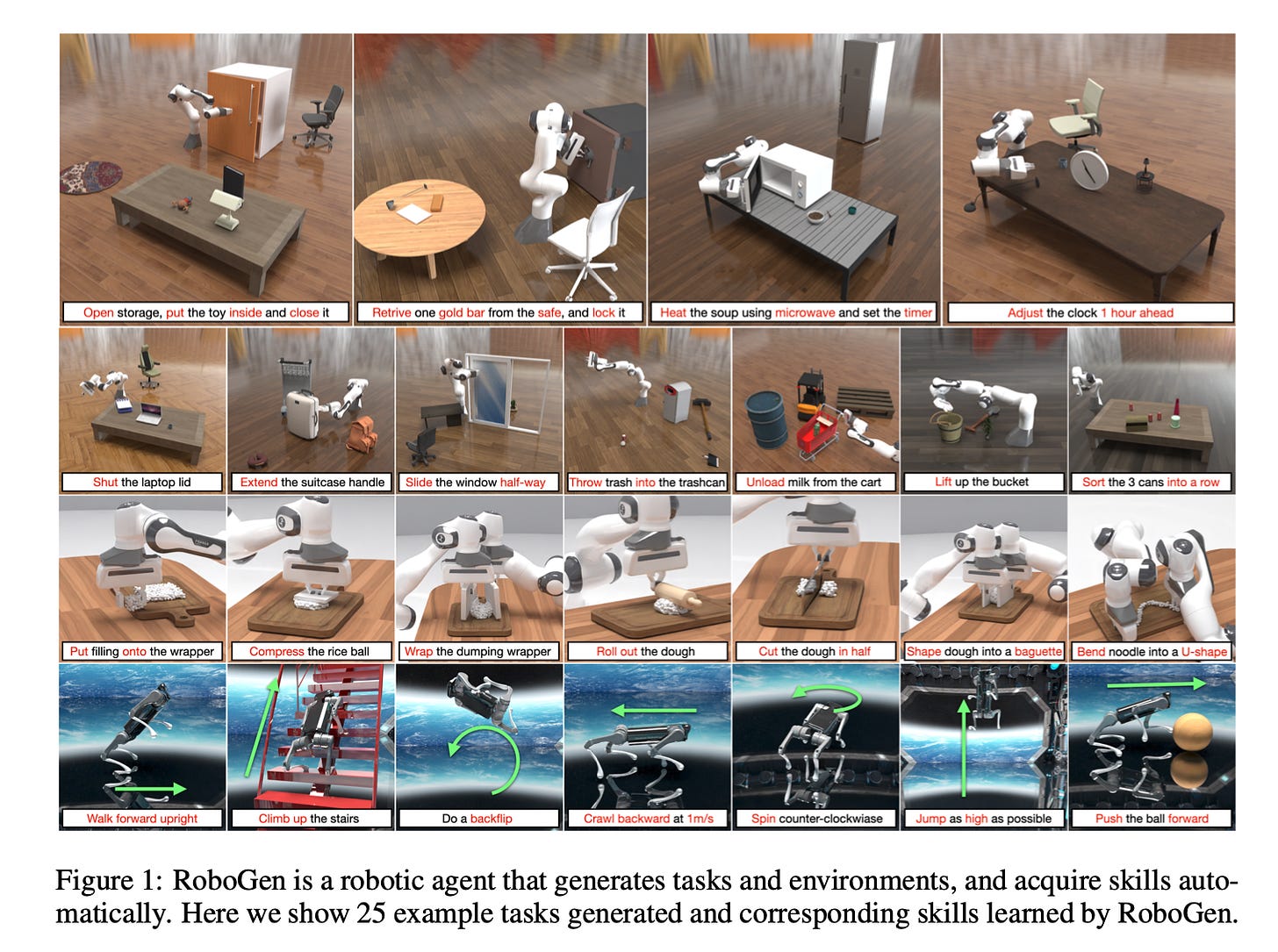

RoboGen introduces a novel generative robotic agent capable of learning a wide array of robotic skills through generative simulation with minimal human input. It utilizes foundation models to create varied tasks and training environments, facilitating a cycle of proposing, generating, and learning tasks. This process allows the agent to learn optimal policies for a range of skills and leverages large-scale model knowledge for robotic applications.

Large multimodal models trained with synthetic captions face Scalability Deficiency and World Knowledge Loss. CapsFusion, a new framework, improves data quality and scalability by merging web and synthetic captions. CapsFusion enhances model performance and efficiency significantly, and offers a promising avenue for future multimodal model training.