👀Baidu's Tech Improves AI Image Accuracy, China’s AI Glasses Expected in 2025, and Alibaba's Coder Beats GPT-4o

Weekly China AI News from November 11, 2024 to November 17, 2024

Hi, this is Tony! Welcome to this week’s issue of Recode China AI, a newsletter for China’s trending AI news and papers.

Three things to know

Baidu unveils iRAG to tackle AI image hallucinations.

Meta’s Ray-Ban smart glasses have spurred Chinese tech companies to explore domestic alternatives.

Alibaba’s latest open-source Qwen2.5-Coder beats GPT-4o in coding.

Before we start, take a look at this quadruped robot from Deep Robotics, a Hangzhou-based startup. This all-terrain robot, called Lynx, can speed downhill, and perform backflips.

Baidu Tackles AI’s Visual Hallucination Problem with iRAG

What’s New: At its annual tech event Baidu World, Baidu unveiled iRAG (Image-Based Retrieval-Augmented Generation), a technology designed to tackle one of generative AI’s most persistent issues: image hallucinations, where AI generates inaccurate or imaginary visual elements. iRAG-assisted image generation is now live on ERNIE Bot and its mobile app.

Deep Dive: RAG (Retrieval-Augmented Generation) is an AI framework that improves the accuracy of LLM-generated answers by combining LLMs with external databases. In text-based RAG, an LLM retrieves relevant information from a knowledge base using a generated query. This retrieved information is then integrated into the LLM’s input, enabling it to generate more accurate and contextually relevant text.

While RAG has made strides in reducing LLM text hallucinations, visual hallucinations in AI-generated images have persisted, from six fingers to wrong car logos to missing elements. That’s why iRAG is proposed.

iRAG builds on Baidu’s image search database, linking it with text-to-image models for more accurate and contextually realistic visuals. Here’s how it works:

The system first analyzes the user's needs to decide which image elements to enhance.

It then searches its database and selects reference images for augmentation.

Baidu’s proprietary text-to-image model generates images, allowing for both creative interpretations (e.g., turning a Newton portrait into a storybook style) and exact recreations (e.g., replicating a car in detail).

Users can also upload reference images for customized results.

Below are a few sample generations. While still a work in progress, iRAG has already improved accuracy in AI-generated images.

Baidu isn’t alone in exploring RAG’s potential for multimodality. Earlier this year, a team from the University of Missouri and NEC Laboratories America introduced another iRAG, aimed at improving video understanding through efficient interactive querying.

Why It Matters: Hallucinations remain a major challenge to integrating AI into practical, everyday use. Baidu’s approach breaks new ground by extending retrieval beyond text. Baidu expects that with iRAG, AI can improve user experience and AI’s trustworthiness by delivering instant, high-quality visuals. However, producing hyper-realistic images could also increase the risk of misinformation and raise copyright concerns — issues that commonly affect other AI technologies, such as video generation.

One More Thing: Baidu also highlighted the impressive growth of its ERNIE models, now managing 1.5 billion daily API calls — a 30-fold increase over the past year. The Chinese tech giant also introduced Miaoda, a no-code app builder, and ERNIE-powered AI Glasses (details below).

Chinese Firms Eye Domestic AI Glasses as Meta’s Ray-Ban Gains Popularity

What’s New: Meta’s Ray-Ban smart glasses are rapidly gaining popularity, with users enjoying features like photo capture, music streaming, and on-demand AI interactions. This success has spurred Chinese tech companies to explore domestic alternatives.

At Baidu World, Baidu’s AI hardware company, Xiaodu Technology, introduced the Xiaodu AI Glasses. Weighing just 45 grams, these lightweight glasses are designed to blend seamlessly into daily life while offering advanced functionality. The glasses are equipped with a 16-megapixel ultra-wide camera with AI stabilization, a four-microphone array for clear sound capture, and open-ear anti-leakage speakers.

Built on Baidu’s ERNIE LLM and powered by the DuerOS operating system, the Xiaodu AI Glasses can provide real-time “walk-and-ask” capabilities, allowing hands-free interaction with the environment. According to Xiaodu, these glasses act as personal assistants, offering first-person photography, real-time Q&A, calorie recognition, object identification, encyclopedia lookup, audio-visual translation, and integration with Baidu services like Maps and Baike.

With up to 5 hours of active use and a 56-hour standby battery life, the Xiaodu AI Glasses charge fully in just 30 minutes. Set to launch in early 2025, they are expected to be an affordable alternative in the market.

Xiaomi’s Entry: Chinese smartphone and EV maker Xiaomi is also reportedly developing AI-powered smart glasses, aiming for a Q2 2025 release. Expected to offer camera and audio capabilities similar to other smart glasses, Xiaomi is partnering with Apple supplier Goertek to develop the product, with ambitions to sell over 300,000 units upon launch.

Additionally, Chinese media reports that OPPO, Vivo, Huawei, Tencent, and ByteDance are meanwhile exploring their own AI glasses projects.

Why It Matters: If these reports hold, 2025 could be the year of an AI glasses war among China’s tech giants and hardware companies. On the other hand, smaller startups, anticipating fierce competition, are mirroring the Meta-Ray-Ban model by teaming up with established eyewear brands. For example, Chinese AR firms Rokid and Xreal are said to be planning collaborations with Bolon and Gentle Monster, while the new AI glasses brand Shanji has joined forces with Hong Kong’s fast-fashion eyewear brand LOHO.

With so many players entering the space, the Chinese AI glasses market is shaping up to be one of the most dynamic fronts for wearable tech innovation.

Alibaba Claims New Coding Model is Better than GPT-4o

What’s New: Alibaba’s Qwen research team just open-sourced the latest Qwen2.5-Coder models for code generation, code reasoning, and fixing bugs. With this release, Qwen introduces six different model sizes, including the high-performing 32B model, which has now achieved state-of-the-art (SOTA) results in various code generation benchmarks, outperforming GPT-4o.

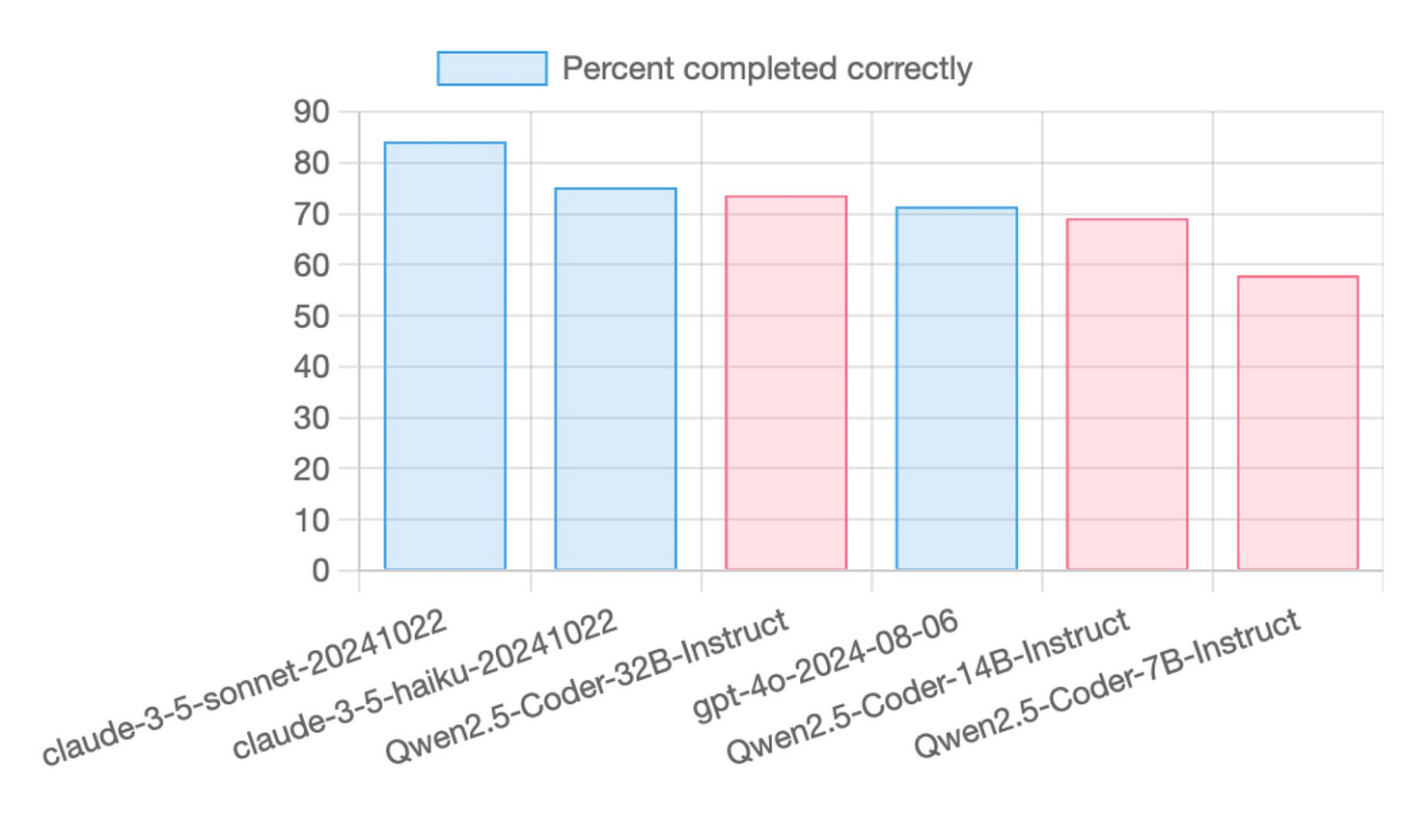

Other benchmarks such as Paul Gauthier’s reputable Aider benchmarks showed that the 32B model scored in between GPT-4o and 3.5 Haiku.

How It Works: In addition to handling over 40 programming languages, Qwen2.5-Coder is reportedly adept at multi-language code repair, scoring top marks in tasks requiring cross-language support.

For developers who prefer a tailored experience, Qwen2.5-Coder offers two model types for each size: a Base model for custom fine-tuning and an Instruct model pre-aligned to support interactive coding assistance. These models excel in environments like the Cursor code editor.

Additionally, in Artifact creation, the models assist in generating complex visual applications such as simulations, websites, and data charts.

How Good is Qwen2.5-Coder? I asked Qwen2.5-Coder on Hugging Face to create a portfolio website, and it did a pretty decent job (screenshots below). However, whenever I clicked “View More” on the “Project 1” section, there was no button to close the page and return to the home section.

I also used the same prompt with GPT-4o and Claude-3.5-Sonnet for comparison. GPT-4o generated a similar website to Qwen’s but with just 80+ lines of code, whereas Claude wrote over 500 lines. The Claude-generated site allows me to open projects, read more on the Blog, and even send a message through the Contact Me section.

While my test was quite preliminary, the results were consistent with benchmark findings.

Why It Matters: Qwen2.5-Coder’s open-source release is a further step toward democratizing access to powerful coding models, particularly in a field often dominated by closed models.

Weekly News Roundup

The Beijing-based GPU and AI accelerator start-up, Moore Threads Technology, has increased its capital base to 330 million yuan and altered its corporate status to a joint-stock company, indicating preparations for an initial public offering. (SCMP)

Shengshu Technology, a Chinese AI startup backed by Baidu Ventures and Ant Group, has announced the expansion of its AI tool Vidu, which now has the capability to generate videos from a combination of text and multiple images. (CNBC)

The CEO of Chinese AI startup Moonshot AI, Yang Zhilin, and co-founder/CTO Zhang Yutao are facing arbitration initiated by former investors of their previous company, Recurrent AI, in Hong Kong. The arbitration request, filed with the Hong Kong International Arbitration Centre, stems from allegations that Yang and Zhang started their new company Moonshot AI and initiated fundraising without securing required waiver agreements from five of Recurrent AI’s investors. The conflict emerged after 2023, with investors reportedly unhappy about their share allocation in the new venture. Despite Moonshot AI’s rapid growth and impressive valuation — recently reaching $3 billion after significant investment from Alibaba — the unresolved disputes and failure to gain unanimous investor support have led to complications.

Trending Research

SeedEdit: Align Image Re-Generation to Image Editing

Researchers from ByteDance’s Seed team introduced SeedEdit, a diffusion model designed for image editing that balances between image reconstruction and re-generation based on text prompts. Unlike previous approaches, SeedEdit uses an iterative alignment process to transform a text-to-image (T2I) generator into a capable image editor. The method relies on creating diverse pairwise datasets and aligning a causal diffusion model for precise editing. Experiments show SeedEdit outperforms other models in prompt alignment and image consistency, making it superior for both text-guided and conditional image editing tasks.

Large Language Models Can Self-Improve in Long-context Reasoning

SEALONG is a self-improvement approach for improving long-context reasoning capabilities in LLMs. SEALONG allows LLMs to self-improve by sampling multiple outputs for each question, scoring them based on Minimum Bayes Risk (MBR), and then fine-tuning based on the most consistent outputs. The study shows that SEALONG improves performance on various LLMs without relying on human annotations or expert model data, achieving substantial gains in accuracy on long-context tasks.