Baidu Unveils New Robotaxi With No Steering Wheel; SMIC Reportedly Manufactures 7nm SoC; Shenzhen Deploys Nose Swab Covid Test Robots

Weekly China AI News from July 18 to July 24

News of the Week

Baidu Jumps Ahead of Tesla by Unveiling New Steering Wheel-Free Robotaxi

What’s new: Chinese search engine and AI giant Baidu last week unveiled its 6th-generation robotaxi, named Apollo RT6. The all-electric, purpose-built, SUV-size autonomous vehicle features a detachable steering wheel that could be removed when Chinese regulations permit. RT6 is expected to provide robotaxi rides in a trial operation starting in 2023 on Apollo Go, Baidu’s autonomous ride-hail service that is now available to the public in 10 Chinese cities.

Staggeringly low production cost: One striking advantage of RT6 is its unprecedented low production cost. At RMB 250,000 (~$37,000) per unit, RT6 will be manufactured at a large scale in the sense of reaching into tens of thousands. Robin Li, Baidu co-founder and CEO, said taking a robotaxi in the future will be half the cost of taking a taxi today.

Unlike other robotaxis that are retrofitted on conventional vehicles, RT6 is built from the ground on Baidu’s self-developed automotive E/E architecture. The robotaxi features 1200 TOPS of computing power and 38 sensors, including solid-state LIDARs seamlessly integrated with the roof - a one-of-a-kind design distinct from spinning rooftop LIDARs that are seen common on other robotaxis. The inside of RT6 features independent rear seats, reconfigurable front seats once the wheel is removed, and an intelligent voice interaction system.

Baidu’s AV vision: The Chinese tech giant specializing in search engines and AI is expanding its footprints in the automotive industry after the unveil of RT6 and ROBO-01, an electric vehicle for private users introduced under the brand JIDU in June. ROBO-01 will be delivered by 2023. Baidu also plays a role as a Tier-1 supplier by selling its autonomous driving software and HD maps to automakers like BYD and Dongfeng Motors. The company’s intelligent transportation solution, which encompasses smart traffic lights and highways, is estimated to increase traffic efficiency by 15 percent to 30 percent.

Other AI innovations: RT6 made its debut at Baidu World, Baidu’s annual flagship tech conference. The company also showcased how its generative AI model repaired “Dwelling in the Fuchun Mountains", a legendary landscape painting that was burnt into two pieces.

China’s SMIC Reportedly Produces Low-Volume 7nm Chips

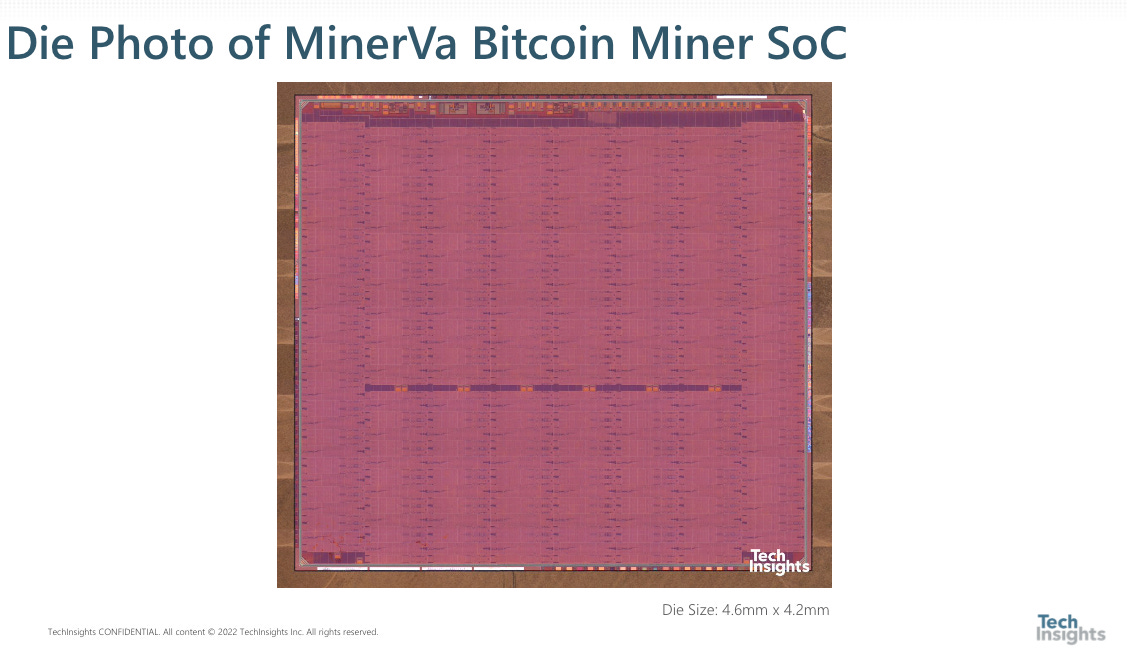

What’s new: China’s biggest chip manufacturer SMIC is reportedly able to produce 7nm SoC for bitcoin mining, the most advanced product from SMIC and two generations ahead of SMIC’s established 14-nm technology, according to tech analyst firm Tech Insights. The company revealed a picture of MinerVa Bitcoin Miner SoC shipped by SMIC.

7nm without EUV: The report suggests the US-sanctioned SMIC is likely manufacturing 7nm chips without the assistance of EUV, a critical device for the advanced semiconductor device fabrication process. In December 2020, SMIC was added to the notorious Entity List, which bans the export of U.S. technology to the blacklisted entities unless the exporter receives a government license. Under the restrictions, Dutch firm ASML, the only producer of EUV, cannot sell its EUV lithography equipment to SMIC.

Can SMIC produce 7-nm chips at scale? SMIC will continue to evolve the 7nm node without that capability, at the cost of increasing complexity and resulting yield issues, says the report. A Tom Hardware story further extrapolated that SMIC is still in the early stages of chip development as the MinerVa Bitcoin mining chip looks to be basic. Without the help of advanced technology like memory cells, EUV, and 5G communications, SMIC is unlikely to produce 7-nm CPUs, GPUs or AI accelerators at this stage.

Copy from TSMC? The Tech Insight report also claimed the SMIC 7-nm technology is a close copy of TSMC 7nm process technology.

Shenzhen Deploys Robots to Test Covid Using Nose Swab

What’s new: A half-human-tall robot that can move autonomously and collect nasal samples has been put into clinical use to automate Covid testing in a Shenzhen-based hospital last month. Named Pengcheng Qinggeng (鹏程青耕) 2.0, the robot obtained 634 nucleic acid samples in pre-clinical tests with better-then-manual satisfactions.

More details: The covid testing android features a Gundam-like robot head, an interactive tablet, and two robotic arms - one clamping a nose swab and the other holding a transparent sampling tube - mounted on top of a portable automatic guided vehicle that storages sampling tubes, swabs, and sterilization equipment.

The Covid test process begins when the robot moves to a designated place, opens a tube and grabs a nasal swab. A user who sits in front of a robot then scans their health code and identity information through the interactive tablet interface, so the robot can link the tube with their ID and upload it to the public health cloud. The robot inserts a swab inside the user’s nostril, then cuts off the swab and sterilizes key equipment.

The robot is developed jointly by Shenzhen Luohu Hospital Shenzhen Moying Technology, Shenzhen Artificial Intelligence and Robotics Research Institute (AIRS).

Papers & Projects

Next-ViT: Next Generation Vision Transformer for Efficient Deployment in Realistic Industrial Scenarios

Due to the complex attention mechanisms and model design, most existing vision Transformers (ViTs) can not perform as efficiently as convolutional neural networks (CNNs) in realistic industrial deployment scenarios, e.g. TensorRT and CoreML. This poses a distinct challenge: Can a visual neural network be designed to infer as fast as CNNs and perform as powerful as ViTs? Recent works have tried to design CNN-Transformer hybrid architectures to address this issue, yet the overall performance of these works is far away from satisfactory. To end these, we propose a next generation vision Transformer for efficient deployment in realistic industrial scenarios, namely Next-ViT, which dominates both CNNs and ViTs from the perspective of latency/accuracy trade-off. In this work, the Next Convolution Block (NCB) and Next Transformer Block (NTB) are respectively developed to capture local and global information with deployment-friendly mechanisms. Then, Next Hybrid Strategy (NHS) is designed to stack NCB and NTB in an efficient hybrid paradigm, which boosts performance in various downstream tasks. Extensive experiments show that Next-ViT significantly outperforms existing CNNs, ViTs and CNN-Transformer hybrid architectures with respect to the latency/accuracy trade-off across various vision tasks. On TensorRT, Next-ViT surpasses ResNet by 5.4 mAP (from 40.4 to 45.8) on COCO detection and 8.2% mIoU (from 38.8% to 47.0%) on ADE20K segmentation under similar latency. Meanwhile, it achieves comparable performance with CSWin, while the inference speed is accelerated by 3.6x. On CoreML, Next-ViT surpasses EfficientFormer by 4.6 mAP (from 42.6 to 47.2) on COCO detection and 3.5% mIoU (from 45.2% to 48.7%) on ADE20K segmentation under similar latency. Code will be released recently.

Towards Grand Unification of Object Tracking

We present a unified method, termed Unicorn, that can simultaneously solve four tracking problems (SOT, MOT, VOS, MOTS) with a single network using the same model parameters. Due to the fragmented definitions of the object tracking problem itself, most existing trackers are developed to address a single or part of tasks and overspecialize on the characteristics of specific tasks. By contrast, Unicorn provides a unified solution, adopting the same input, backbone, embedding, and head across all tracking tasks. For the first time, we accomplish the great unification of the tracking network architecture and learning paradigm. Unicorn performs on-par or better than its task-specific counterparts in 8 tracking datasets, including LaSOT, TrackingNet, MOT17, BDD100K, DAVIS16-17, MOTS20, and BDD100K MOTS. We believe that Unicorn will serve as a solid step towards the general vision model. Code is available at this https URL.

Greedy when Sure and Conservative when Uncertain about the Opponents

We develop a new approach, named Greedy when Sure and Conservative when Uncertain (GSCU), to competing online against unknown and nonstationary opponents. GSCU improves in four aspects: 1) introduces a novel way of learning opponent policy embeddings offline; 2) trains offline a single best response (conditional additionally on our opponent policy embedding) instead of a finite set of separate best responses against any opponent; 3) computes online a posterior of the current opponent policy embedding, without making the discrete and ineffective decision which type the current opponent belongs to; and 4) selects online between a real-time greedy policy and a fixed conservative policy via an adversarial bandit algorithm, gaining a theoretically better regret than adhering to either. Experimental studies on popular benchmarks demonstrate GSCU’s superiority over the state-of-the-art methods. The code is available online at \url{https://github.com/YeTianJHU/GSCU}.

Rising Startups

HOZON Auto, a smart EV company, has closed its D3 funding round that raises over RMB 3 billion yuan ($444 million). Founded in 2014, the Shanghai-based company delivered a total of 63,131 vehicles in the first half of 2022.

JAKA Robotics, a collaborative robot developer, has raised RMB 1 billion (~$157 million) in its Series D funding round co-led by Temasek, True Light, SoftBank Vision Fund 2 and Prosperity7 Ventures. Founded in 2014, JAKA designs collaborative robots for automation of mid and small-sized factories, offering robotics technologies for cobot applications.