💯Alibaba's Qwen2.5 Releases 100 Models, ByteDance's New AI Music Generator, and China's New Draft Rule on AI Labeling

Weekly China AI News from September 9, 2024 to September 22, 2024

Hi, this is Tony! Welcome to this week’s issue of Recode China AI, a newsletter for China’s trending AI news and papers. Also wishing a happy Mid-Autumn Festival to all my readers and your family and friends! May the year ahead bring you abundance, good health, and happiness. 🥮🌝🥮

Three things to know

Alibaba releases its latest Qwen2.5 models, which includes 100 variants.

ByteDance’s new AI model allows you to generate and edit music.

China has released a draft regulation requiring labeling for AIGC.

(By the way, my guest post on Michael Spencer’s AI Supremacy about Chinese AI startups is now fully available to everyone. I hope you would enjoy it.)

Alibaba Releases Latest Qwen2.5 Foundation Models with 100 Variants

What’s New: Last week Alibaba released its latest foundation models, Qwen2.5. This new lineup includes 100 models, featuring Qwen2.5 LLMs and specialized variants for coding and mathematics, trained in various sizes and precision. Alibaba said that it could be one of the largest open-source AI releases to date.

Although no eye-popping innovation was introduced, the models have been improved to follow different instructions, handle structured data, and generate long text. In benchmark language tests, Qwen2.5 72B often matches or surpasses Meta’s largest model, Llama 3.1 405B.

How it Works: Qwen2.5 models are dense, decoder-only language models trained on a 18 trillion token dataset. This gives them a significant advantage in terms of general knowledge, understanding, and versatility. Here’s how the models work across various functionalities:

Language Supports: Qwen2.5 supports over 29 languages, including Chinese, English, French, Spanish, and Arabic. It can process up to 128K tokens and generate long, coherent text of up to 8K tokens.

Enhanced Coding Abilities: Qwen2.5-Coder is specifically designed to program. Trained on 5.5 trillion tokens of code-related data, it can outperform larger models in code generation, debugging, and problem-solving across several programming languages. The model also understands and produces structured outputs, such as JSON.

Mathematical Reasoning: Qwen2.5-Math integrates advanced techniques like Chain-of-Thought (CoT), Program-of-Thought (PoT), and Tool-Integrated Reasoning (TIR), allowing the model to handle complex mathematical problems in both Chinese and English.

Instruction Following and Flexibility: Qwen2.5 models have been fine-tuned to respond to a variety of prompt styles such as role-playing.

Scalable Model Sizes: Qwen2.5 offers various model sizes, starting from 0.5 billion to 72 billion parameters.

Multimodal Support: With the release of Qwen2-VL-72B, the platform also includes vision-language models that are more capable of understanding and generating visual information.

APIs and Open-Source Deployment: For developers who want easy access to these models, Qwen2.5 offers APIs for flagship versions like Qwen-Plus and Qwen-Turbo through Model Studio. Additionally, developers can deploy Qwen2.5 locally using platforms like Hugging Face, ModelScope, and vLLM.

Why it Matters: The release of Qwen2.5 is set to solidify Alibaba and Qwen’s strong position in China’s open AI ecosystem. According to Yuan Jinhui, founder of China’s open-source deep learning framework OneFlow, Qwen is one of the most widely adopted open-source models in China, largely due to its range of model sizes that cater to diverse demands. In contrast, Llama 3.1 has seen limited popularity in China because it lacks a fine-tuned version optimized for the Chinese language.

ByteDance’s New AI Model Generates Music from Lyrics and Edits Melodies

What’s New: Last week, ByteDance researchers introduced Seed-Music, an AI framework that allows users to generate and edit high-quality music. The system combines auto-regressive language modeling, commonly used in LLMs like GPT, with diffusion models, widely applied in image and video generation. It supports both vocal and instrumental music creation from inputs such as lyrics, style prompts, and audio references.

How It Works: Seed-Music integrates three core music representations: audio tokens, symbolic music tokens (or lead sheets), and vocoder latents, each designed for specific creative workflows. Here’s how they work:

Audio Tokens: Compact representations of audio that allow real-time, streaming music generation using an auto-regressive model.

Symbolic Music Tokens: Human-readable scores that allow users to interact with and edit musical elements like melody and harmony before rendering the final track.

Vocoder Latents: High-quality audio representations produced by a diffusion model, offering more nuanced and flexible conditioning for tasks like remixing or multi-track outputs.

Seed-Music enables applications like Lyrics2Song, which generates complete tracks from lyrics, and MusicEDiT, allowing precise adjustments to existing songs (demos below).

Why It Matters: Seed-Music’s unified framework will enable applications like Lyrics2Song, which generates complete tracks from lyrics, and MusicEDiT, allowing precise adjustments to existing songs.

China's New Draft Rule on Labeling AI-Generated Content

What’s New: On September 14, 2024, China’s Cyberspace Administration (CAC) released a draft regulation requiring clear labeling for AI-generated content. Public feedback is open until October 14. The regulation, called the AI-Generated Synthetic Content Labeling Measures 人工智能生成合成内容标识办法(征求意见稿), targets providers of AI-generated text, images, audio, and video to enhance transparency. This draft builds on laws such as the Cybersecurity Law and AI Service Management Provisions.

How It Works: The draft mandates two types of labels: explicit and implicit.

Explicit Labels: Visible marks such as disclaimers or watermarks must be placed on AI-generated text, images, audio, and video. For example, AI-generated videos need clear marks on the opening frame, while text must display disclaimers at appropriate points.

Implicit Labels: Hidden data, such as metadata or watermarks, must be embedded in AI-generated files. These markers contain information such as the content’s source, AI service provider, and a unique identifier. Implicit labels are not immediately visible but can be detected by platforms and authorities to verify content authenticity.

Platforms are required to verify that AI content includes the necessary labels before it can be shared. They must also notify users when AI-generated material is detected, even if explicit labels are absent.

Why It Matters: As AI technologies like deepfakes gain traction, governments are ramping up efforts to prevent the spread of misleading content. China’s new draft rule aims to increase transparency by marking AI-generated material, addressing a growing concern that fake AI content could blur the lines between reality and fabrication.

While some platforms like Xiaohongshu, Bilibili, Weibo, Douyin, and Kuaishou have implemented AI content declarations, the draft regulation introduces a unified standard for all. However, implementing these rules could be costly, especially for smaller firms. AI watermarking technology is still in its infancy and faces reliability issues. Tech giants such as Google have already acknowledged that even advanced solutions, like its SynthID, are vulnerable to attacks.

Weekly News Roundup

Chinese AI firms are developing innovative software solutions to compensate for the lack of advanced GPUs due to American export restrictions. (The Economist)

Leading AI scientists, including Turing Award laureates Geoffrey Hinton and Yoshua Bengio, and Andrew Yao from China’s Tsinghua University, hosted the third meeting of the International Dialogues on A.I. Safety on September 5-8. In a statement, they recommended creating national AI safety authorities and an international body to set guidelines and red lines to prevent A.I.-related disasters. (New York Times)

ByteDance is reportedly intensifying its efforts to develop its own AI chips, with the goal of starting mass production by 2026. This initiative involves the design of two semiconductors in partnership with TSMC. (The Information)

ByteDance later denied the report. The company acknowledged that it is indeed exploring the chip development, but the efforts are still in the early stages, primarily focused on cost optimization for its recommendation and advertising businesses. All projects are fully compliant with relevant trade regulations.

Trending Research

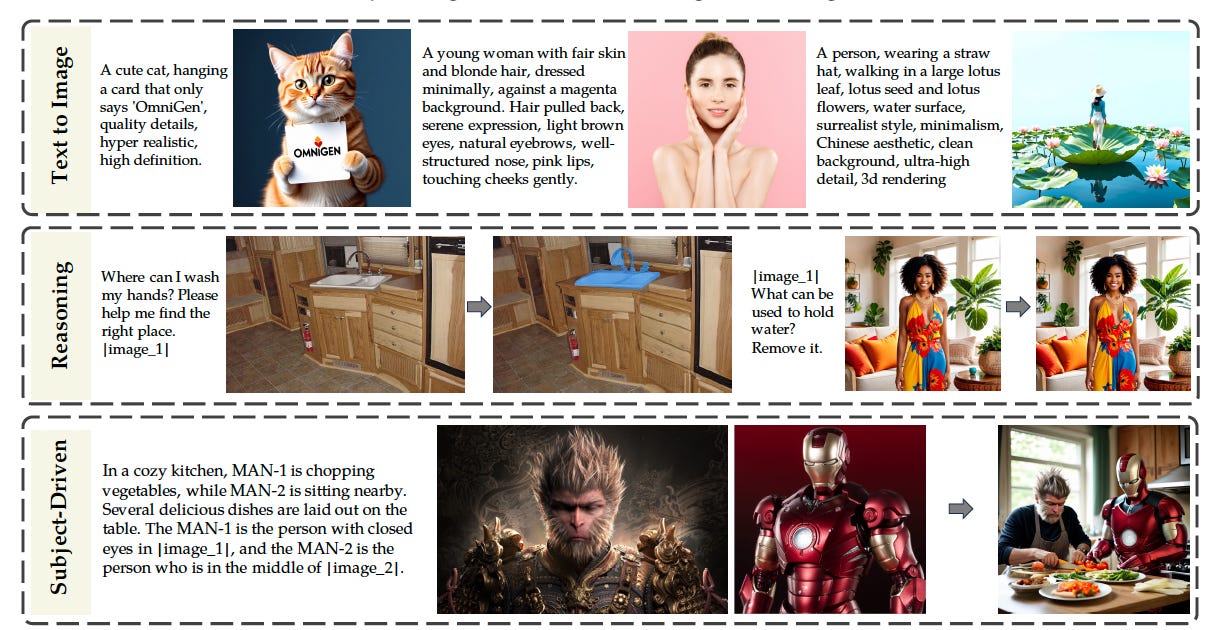

OmniGen: Unified Image Generation

Researchers from Beijing Academy of Artificial Intelligence introduced OmniGen, a unified framework that can handle a wide range of data domains without the need for specialized models for each domain. This approach leverages a shared model architecture that is trained on diverse datasets, enabling it to generate images that are coherent and high-quality. The model can perform image synthesis, super-resolution, and inpainting, while also being adaptable to different styles and content types.

Towards a Unified View of Preference Learning for Large Language Models: A Survey

Researchers from Peking University and Alibaba addresse the complexities of aligning LLMs with human preferences. The authors propose a unified framework that breaks down popular alignment strategies into four components: model, data, feedback, and algorithm. This framework aims to clarify the connections between different methods and provide a deeper understanding of alignment algorithms.