🤖 Alibaba's AI Co-Pilot; Chinese Regulations on Generative AI; Didi's Robotaxi Concept

Weekly China AI News from April 10 to April 16

Lovely readers! Buckle up for an exhilarating week of China AI adventures! We’ll delve into Alibaba’s ChatGPT-like AI and their game-changing cloud strategy. As China unveils its draft regulation on generative AI, I'll explore my thoughts on this topic. And guess what? Didi is back with a fantastic purpose-built robotaxi concept! Let’s begin! 🚀

Alibaba Refuels Cloud Computing Engine With Tongyi Qianwen

What’s new: Following Microsoft 365’s AI upgrade, Chinese e-commerce titan Alibaba has also decided to harness the power of AI by integrating their large language model (LLM), Tongyi Qianwen, across all business applications. DingTalk, Alibaba’s digital collaboration workplace, and Tmall Genie, an IoT-enabled smart home appliance provider, are among the first to benefit from Tongyi Qianwen’s capabilities.

In a bid to solidify its domination, Alibaba Cloud, the company’s cloud computing unit and China’s top cloud service provider, has made the Tongyi Qianwen API applications open to corporate users, enabling them to reconstruct their applications using generative AI. Although previously open for beta testing, the application for Tongyi Qianwen is now closed and accessible only through invitation codes.

Early reviews: I’ve gathered some online reviews and first-hand accounts to gauge user response.

Zhihu: “Pros are in terms of common sense answers, the accuracy is quite high. The ability to generate articles and ancient poems is also good, and can be used for daily text generation assistance. Cons are the model is not multimodal, its mathematical calculation ability is relatively lacking, its understanding of questions is not enough, and its coding ability is still insufficient.”

CSDN: “During the first test of 110 questions, Tongyi Qianwen scored 65 points (35/23/7), but when tested again the next day, it suddenly scored 90.”

Why it matters: The emergence of ChatGPT, coupled with the growing trend of generative AI, has prompted enterprise clients to boost their cloud computing budgets. Alibaba aims to capitalize on this lucrative opportunity. According to Chinese media, Microsoft’s Azure – which previously lacked a significant presence in the Chinese cloud market – is now in high demand among Chinese customers, as it is the sole cloud agent for OpenAI.

“We are at a technological watershed moment driven by generative AI and cloud computing, and businesses across all sectors have started to embrace intelligence transformation to stay ahead of the game,” said Daniel Zhang, Chairman and CEO of Alibaba Group and CEO of Alibaba Cloud Intelligence.

Chinese Regulator Drafts Ground Rules on Generative AI

What’s new: Last week, the Cyberspace Administration of China (CAC), the nation’s top Internet regulator, unveiled a draft regulation on generative AI – the umbrella technology term of ChatGPT and other popular LLMs that can produce text, images, music, videos, and code. The draft regulation emerged after Baidu, Alibaba, and SenseTime introduced their ChatGPT-like AI models.CAC seeks public feedback until May 10, 2023.

Why it matters: Historically, China’s regulatory approach to digital technologies like P2P finance or ride-hailing has trailed behind the rapid advancements in the field. The early intervention in generative AI regulations came as a surprise to many, positioning China among the first countries to lay down the groundwork for this technology and earlier than the EU and the U.S.

Positive Impact: The draft regulation demonstrates the Chinese government’s overall support for generative AI. It establishes clear boundaries for enterprises, ensuring that they adhere to the rules and avoid potential trouble. The prohibition of discriminatory content and protection of data privacy are also emphasized.

Room for Debate: Despite these benefits, some believe that the draft regulation is overly strict on generative AI technologies. Let’s delve deeper into the nuances of this perspective.

Article 4, Point 4: Content generated by generative AI should be accurate and truthful, with measures taken to prevent the creation of false information.

Generative AI is still in its early stages, and producing hallucinations is an inherent aspect of the technology. Although it may be reasonable to restrict generative AI from being used in spreading misinformation, preventing it from generating false information altogether would be incredibly challenging.

Article 5: Organizations and individuals (hereinafter referred to as “providers”) that provide chat and text, image, and sound generation services using generative AI products, including supporting others to generate text, images, and voices through programmable interfaces, shall bear the responsibility of the content producer for the content generated by such products…

From a technical standpoint, it is difficult for service providers to monitor and regulate how users create and distribute generated content, making it problematic for providers to assume responsibility for any potential wrongdoing by users. Moreover, the scope of these difficulties extends beyond just content platforms and encompasses service providers offering APIs.

Article 7, Point 4: Ensure the authenticity, accuracy, objectivity, and diversity of data.

The truth is that Chinese-language data corpora still lag behind English datasets in both quantity and quality. As a result, training generative AI on open-source datasets has become a standard industry practice. However, it is unrealistic to expect generative AI service providers to guarantee the authenticity, accuracy, objectivity, and diversity of the open-source datasets they utilize.

Article 9: Providers of generative AI services should require users to provide their real identity information in accordance with the “Cybersecurity Law of the People’s Republic of China.”

In China, users must provide their real identity information when using services that involve information dissemination or instant messaging. However, generative AI allows users to interact with machines, rather than real human users. Data processors should adhere to the principle of minimal necessity when collecting user personal information, in compliance with the “Personal Information Protection Law of the People’s Republic of China.”

Didi Returns With a Futuristic Driverless Robotaxi Concept

What’s new: Following nearly two years of silence, Chinese ride-hailing giant DiDi is making its biggest business move. DiDi Autonomous Driving, the self-driving technology arm of DiDi Global, announced plans to introduce its first mass-produced robotaxi to the ride-hailing platform by 2025. Below are the main highlights:

DiDi also unveiled its new autonomous truck business, Kargobot, and its first concept robotaxi, DiDi Neuron, at its Shanghai Autonomous Driving Day event.

Didi now has a fleet of over 200 robotaxis, and its autonomous driving project was established in 2016, followed by the creation of the wholly-owned subsidiary in 2019. The Kargobot business currently operates over 100 autonomous trucks, with trial operations taking place between Tianjin and Inner Mongolia.

The concept robotaxi, DiDi Neuron, is a four-passenger robot shuttle featuring an in-vehicle robotic arm that picks up luggage. The robotaxi removes the driver’s seat for more space and comfort.

New autonomous driving hardware includes the DiDi Beiyao Beta LiDAR and the three-domain fusion computing platform, Orca.

Weekly News Roundup

✍🏻 At the Tech Day event, SenseTime unveiled its AGI development strategy, introducing the SenseNova foundation model set, which includes ChatGPT-like LLM SenseChat. SenseTime also introduced its text-to-image platform SenseMirage,

💪🏻 Tencent launched a new High-Performance Computing Cluster (HCC) using i s StarLake server and Nvidia’s H800 GPUs, with an inter-server bandwidth of 3.2T.

✈️ ByteDance's enterprise collaboration platform Feishu, also known as Lark in China, has introduced an AI-powered assistant called My AI, designed to help users summarize meeting notes, generate reports, and rewrite content.

🙋🏻♂️ Zhihu, a Chinese Q&A community, introduced its ChatGPT-like LLM “Zhihaitu AI”, co-developed with Model Best. Zhihaitu AI is now open for testing.

🚙 Apollo, Baidu’s autonomous driving arm, has unveiled an array of products for smart driving, including Apollo City Driving Max, an advanced driver assistance system (ADAS) for urban roads that can rely on only cameras. Meanwhile, Huawei launched ADS 2.0, a HD map-free ADAS that is said to cover 90% of urban roads.

🚗 XPeng introduced its Smart Electric Platform Architecture (SEPA) 2.0 on Sunday, cutting R&D cycles by 20% and decreasing expenses for ADAS and infotainment systems by 70% and 85% respectively. XPeng also debutted G6 coupe SUV.

Trending Research

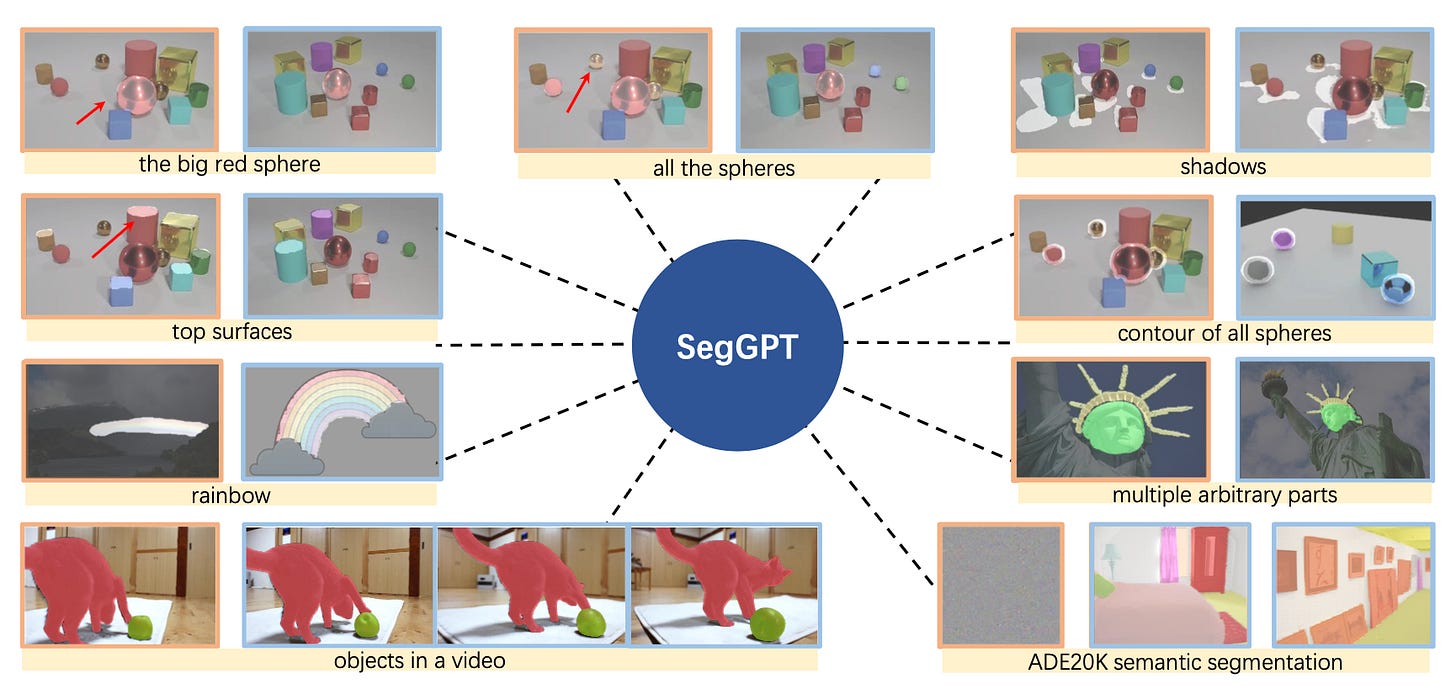

SegGPT: Segmenting Everything In Context

Affiliations: Beijing Academy of Artificial Intelligence, Zhejiang University, Peking University

SegGPT is a generalist model that unifies various segmentation tasks into an in-context learning framework, transforming different kinds of segmentation data into a single image format. It is trained as an in-context coloring problem with random color mapping, focusing on diverse tasks based on context. SegGPT can perform various segmentation tasks in images or videos, such as object instance, stuff, part, contour, and text. It demonstrates strong capabilities in segmenting in-domain and out-of-domain targets, both qualitatively and quantitatively.

RRHF: Rank Responses to Align Language Models with Human Feedback without tears

Affiliations: Alibaba DAMO Academy, Tsinghua University

The proposed novel learning paradigm, RRHF, aligns language model outputs with human preferences more efficiently and robustly than existing methods, requiring fewer models during tuning. It is also simpler in terms of coding, model counts, and hyperparameters. RRHF achieves comparable performance to Proximal Policy Optimization (PPO) in evaluations, and a new language model, Wombat, has been developed using RRHF.

Affiliations: Huawei Noah’s Ark Lab

DiffFit is a simple, parameter-efficient strategy to fine-tune large pre-trained diffusion models for fast adaptation to new domains. It speeds up training by 2x and reduces storage costs, needing only 0.12% of total model parameters compared to full fine-tuning. DiffFit demonstrates superior or competitive performance on 8 downstream datasets while being more efficient. Notably, it can adapt a low-resolution generative model to a high-resolution one with minimal cost, setting a new state-of-the-art FID on the ImageNet 512x512 benchmark.